It seems Google’s neural community is obsessive about canines for a cause.

July 23, 2015

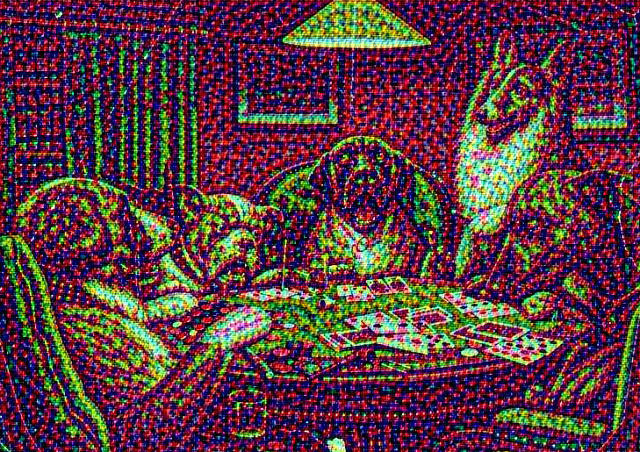

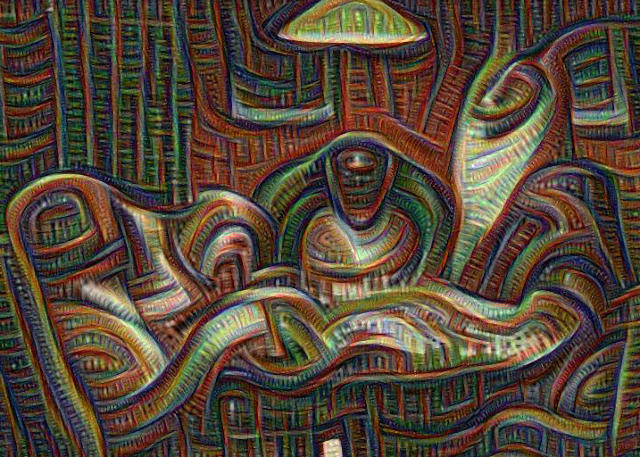

we now have all been getting a kick out of what artists and builders had been doing with Google’s Deep Dream, the neural net powered hallucination AI. Now you could play with it for yourself, due to the Dreamscope internet app. just upload a picture, choose certainly one of 16 totally different filters, and switch that image into a hallucinogenic nightmare of infinitely repeating dog eyes for yourself.

Which more than likely has you questioning: what’s up with all those canine eyes anyway? Why does every single picture Deep Dream coughs up seem to a lesser or better extent like Seth Brundle from The Fly stuffed his teleport pod filled with canines then flipped the change? as it seems, there is a horny easy solution for this.

As you may know, Google’s Deep Dream runs off the same form of neural network that powers Google’s photographs capacity to determine pictures by way of their content material. essentially, the community emulates the neurons within the human brain, with a single core of the network ‘firing’ every time it sees a part of the picture it thinks it recognizes. Deep Dream’s trippy results come from giving it an preliminary picture, then initiating a comments loop, so that the community starts offevolved looking to acknowledge what it acknowledges what it acknowledges. it is the identical of asking Deep Dream to attract a picture of what it thinks a cloud looks like, then draw a picture of what its image seems like, endlessly.

the place do the canine are available? This Reddit thread offers some insight. A neural community’s skill to acknowledge what’s in an image comes from being educated on an preliminary information set. In Deep Dream’s case, that data set is from ImageNet, a database created by means of researchers at Stanford and Princeton who built a database of 14 million human-labeled images. but Google did not use the entire database. as a substitute, they used a smaller subset of the ImageNet database launched in 2012 for use in a contest… a subset which contained “fantastic-grained classification of a hundred and twenty dog sub-courses.”

In other phrases, Google’s Deep Dream sees dog faces all over because it used to be actually educated to look dog faces all over. simply think about how a lot the internet could be freaking out about Deep Dream right now if it was trained on a database that integrated fine-grained classification of LOLCATS as an alternative.

[by way of Rhizome]

(324)