When it comes to AI, will we learn from past mistakes?

Welcome to AI Decoded, Fast Company’s weekly LinkedIn newsletter that breaks down the most important news in the world of AI. I’m Mark Sullivan, a senior writer at Fast Company covering emerging tech, AI, and tech policy.

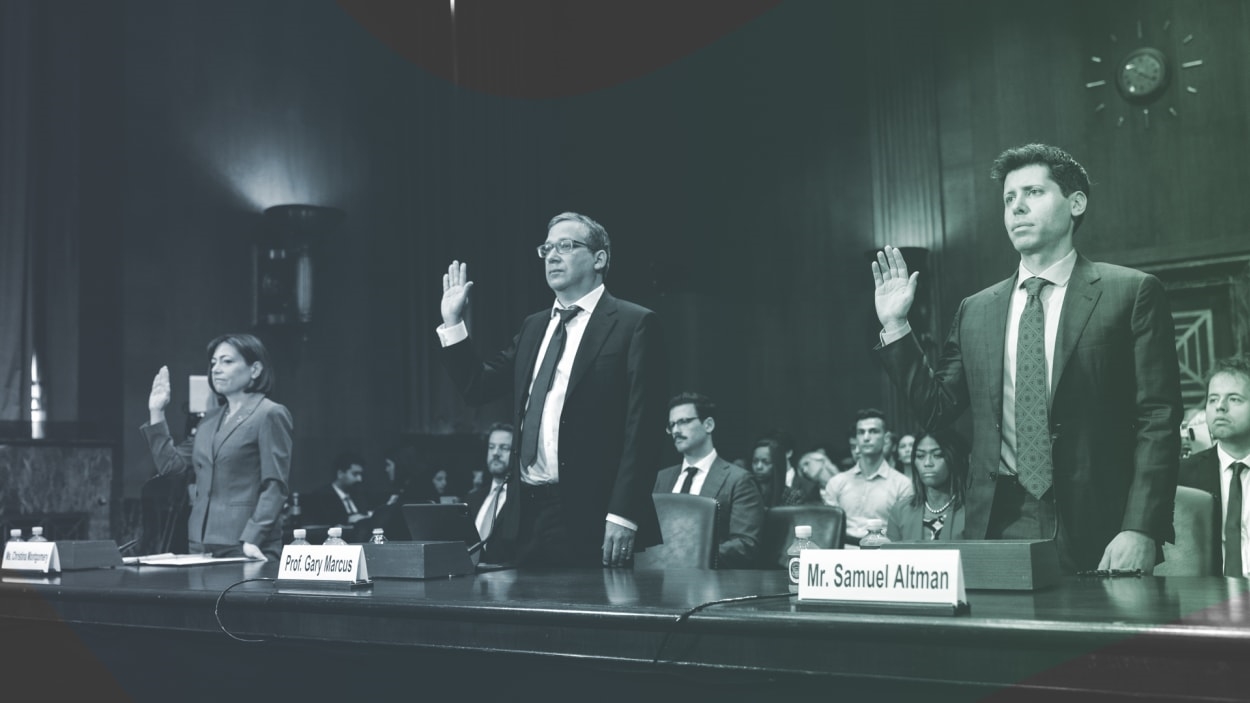

This week, I’m looking at the D.C. debut of perhaps the most important person in AI today, OpenAI CEO Sam Altman. He’s been engaging with Congress at a time when calls for regulation of large language models, like the one that powers ChatGPT, are growing louder.

If a friend or colleague shared this newsletter with you, you can sign up to receive it every week here. And if you have comments on this issue and/or ideas for future ones, drop me a line at sullivan@fastcompany.com.

Mr. Altman goes to Washington

OpenAI CEO Sam Altman testified in front of the Senate Judiciary subcommittee on privacy and technology for the first time Tuesday. This comes roughly six months after the release of its first widely available product, ChatGPT. For reference, Mark Zuckerberg didn’t make his congressional debut until 2018, 14 years after the launch of Facebook—at which point, the social media platform was embroiled in a data-sharing scandal.

There’s a good reason for Altman’s accelerated timeline. Lawmakers are painfully aware of the profound success Facebook had in stiff-arming regulators over the years, and of the government’s failure to protect the public from the platform’s abuses. Now, many of those legislators are keen to make sure the same thing doesn’t happen again with AI. That’s why they want to talk to Altman, early and often.

Altman suggested to the Senate subcommittee the formation of an AI-focused agency (not a new idea). He said this agency should issue licenses to companies wanting to build large language models, complete with strict safety requirements, and a requirement that any models pass certain tests before they’re released to the public. Give him credit for not shying away from a few difficult questions, like how AI companies should respect the copyrights on web content they vacuum up to train their models (he said content owners should “get significant upside benefit”) and whether AI companies should get Section 230’s legal safe harbor from lawsuits over AI-generated content (he said they shouldn’t).

Still, there were lots of missed opportunities. There were no questions about how exactly companies might clearly label AI-generated content, and there was barely any discussion around whether AI companies need to be more transparent on the data used to train AI models. I suspect Altman will be called back to testify, perhaps numerous times, as Washington gets closer to understanding the unique privacy, misinformation, and safety threats large language models might pose.

Google’s new generative AI-powered offerings

The last major salvo in the generative AI arms race came last week when Google used its annual I/O developer event to announce a flurry of new generative AI tools. Google has been under a lot of pressure to counter the generative AI developments of Microsoft, whose products are powered by OpenAI models.

The centerpiece of Google’s generative AI announcements is a new and more powerful large language model called PaLM 2, which the company says is already the brains behind 25 new products and features. “[It’s] obviously important for us to get this in the hands of a lot of people,” said Zoubin Ghahramani, VP of Google DeepMind, who leads the research team that built the PaLM 2 models. He said PaLM 2 is smarter and more trustworthy than its predecessor. “We’ve actually trained PaLM 2 deeper and longer on a lot of data.”

Importantly, PaLM 2 brings new powers to Google’s Bard chatbot, which so far has failed to measure up to its ChatGPT rival. PaLM 2’s multilingual powers will be on full display with Bard, which is now available to millions of users in English, Japanese, and Korean.

Also on the roadmap are “extensions,” which are Bard’s answer to OpenAI’s “plugins” for ChatGPT. Extensions, like plugins, are a way for users to access the functionality and data from other apps from within the Bard environment. There will be extensions for Google apps including Docs and Gmail, meaning users can generate ideas from documents and emails within Bard, and then easily flesh out those ideas within the apps. Interestingly, there will also be extensions from other companies to integrate with Bard. The first one, Google says, will be Adobe Firefly, which will give Bard users the ability to generate original images based on text prompts issued to the chatbot.

Not to be upstaged, OpenAI announced that it’s now moving its plugins out of limited beta and making them available to all ChatGPT Plus subscribers. It’s also offering more third-party plugins in its “Plugins Store” this week, confirming my suspicions that the AI giant is indeed having its “App Store” moment.

Anthropic’s Claude gets a longer memory

Earlier this week, Anthropic released a new version of Claude, its large language model-powered chatbot that has a lot more memory than ChatGPT. Chatbots can only devote so much memory to the conversations it has with users, and it can only intake so much data in the way of prompts. For example, if you’re an author and you want help from a chatbot on finishing a novel, you can only fill ChatGPT’s brain with about 3,000 words of your manuscript. With the new version of Claude you can input 75,000 words of text. That’s enough room for a proper novel (albeit one on the shorter side). In fact, the Anthropic team used a famous novel to test Claude.

“[W]e loaded the entire text of The Great Gatsby into Claude-Instant (72K tokens) and modified one line to say, Mr. Carraway was “a software engineer that works on machine learning tooling at Anthropic,” Anthropic researchers wrote in a blog post. “When we asked the model to spot what was different, it responded with the correct answer in 22 seconds.”

(17)