Don’t use Ada Lovelace as a stand-in for all of computing history’s remarkable women

May 28, 2024

Don’t use Ada Lovelace as a stand-in for all of computing history’s remarkable women

Celebrating Charles Babbage’s collaborator is fine, but it’s not a substitute for recognizing later pioneers.

Excerpted from Beyond Eureka! The Rocky Roads to Innovating by Marylene Delbourg-Delphis, with a foreword by Guy Kawasaki (Georgetown University Press).

Lord Byron’s daughter, Ada Lovelace (1815–52), is often mentioned as the first computer programmer for describing an algorithm computing the Bernoulli numbers and lauded for annotating her translation of Luigi Federico Menabrea’s “Notions sur la machine analytique de Charles Babbage.” She was undoubtedly extraordinarily gifted, but it may be a stretch to retrospectively attribute to her the advent of computer programming. Babbage also wrote multiple algorithms, and his computing machine never worked. So his efforts, just as Ada Lovelace’s, are largely a false start for a computing genre that we’re viewing through contemporary eyes. It was a failed attempt to expand on calculators—the first one, the Pascaline created by Blaise Pascal, having been produced and used in the mid-seventeenth century.

Lovelace can be seen as a visionary anticipating that a machine could do more than crunch numbers to manipulate symbols according to rules and create new data (as when she wrote that “the engine might compose elaborate and scientific pieces of music of any degree”), thus advancing the idea of a transition from calculation to computation. Yet, here again, we must avoid revisiting the past based on the present. In fact, her remarks may only be the restaging of a then very famous dream, Leibniz’s idea of a “characteristica universalis,” that is, the quest for a universal symbolic language that would not only coordinate human knowledge but would operate as an “ars inveniendi,” an art of inventing, a generative AI of sorts.

However fascinating Ada Lovelace was, emphasizing her exceptionality may ultimately tokenize her and bestow her a celebrity that relegates her to tabloid tragedies. It’s hard to know how her career would have turned out given that she died at thirty-six of uterine cancer. Meanwhile, we also create a new form of historical imbalance and overlook women who advanced the women’s cause much further, like the mathematician and astronomer Mary Somerville. She was Lovelace’s mathematics tutor and introduced her to Babbage, as well as to numerous other scientists and inventors, including Charles Wheatstone, who created the first commercial electric telegraph, the Cooke and Wheatstone telegraph, in 1837. She was the first woman (with Caroline Herschel) to become a member of the Royal Astronomical Society. She enjoyed a high reputation as a scientist, especially after the publication of her second book, “On the Connexion of the Physical Sciences” in 1834, reviewed by William Whewell, a celebrated polymath, and she remained engaged in scientific debates throughout her life. She was also the first person to sign a major petition for female suffrage initiated by one of the most influential political economists of the time, John Stuart Mill, in 1868.

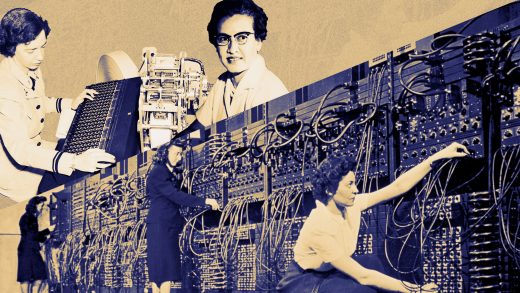

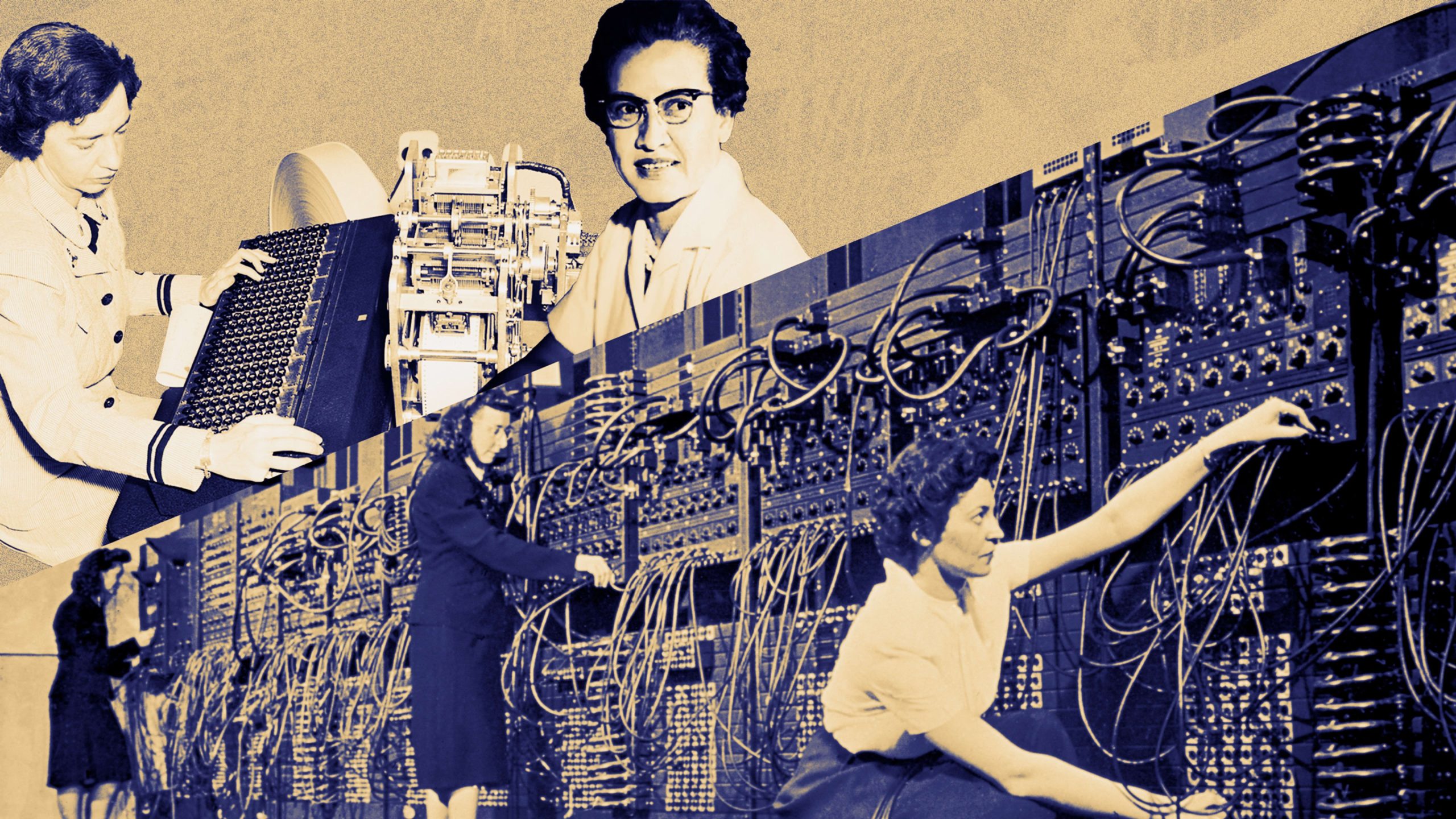

Women in the early days of modern computing

From the point of view of innovation, the women of the ENIAC, who invented how to program the first general-purpose electronic digital computer successfully used, are far more relevant. They weren’t the only pioneers: Grace Hopper, a PhD in mathematics from Yale and a member of the Navy Reserves, began her computing career in 1944, when she worked with a team led by Howard Aiken on the Harvard Mark I, used during World War II to make calculations for the Manhattan Project. So how is it that women didn’t take over a space where they had such a considerable initial role?

The short response would be sexism, but this label obfuscates the actual mechanisms and circumstances that breed unfairness and oblivion. Most of these women were categorized under the older concept of “human computer” that predated modern computing. Human computers were hired to perform research analysis and calculations on the vast amount of data that started to accumulate in the mid-1800s, especially in astronomy and navigation. By the 1870s, there were enough women trained in math to become “human computers.” What was initially attractive about them was that they were a cheaper workforce and were perceived as more precise and conscientious than men. That’s why Edward Pickering used an all-female group who became known as the Harvard Observatory computers. The twentieth century carried over this old habit. Women like Barbara Canright, who joined California’s Jet Propulsion Laboratory in 1939 as its first female human computer, and many others at NASA did work that had life-and-death consequences, but their status didn’t change. The same goes for the women of the ENIAC.

As remarkable as they were, these women didn’t have a status as scientists or engineers. Their position was more akin to clerical activities, a space that had exploded after the 1920s. They were classified as clerical workers (at a “subprofessional” grade, as highlighted by Janine Solberg). When World War II came, the need for more human computers flared up but didn’t trigger a change in job classification, especially as there wasn’t a real shortage of talent. The Moore School of Engineering of the University of Pennsylvania (where the ENIAC was developed), funded by the US Army, hired massively, and especially women (about two hundred, i.e., 50% of human computers) because many young American men were fighting overseas. While it’s true that the women of the ENIAC did more than what was expected from traditional human computers by creating new methods to manage the data on which they had to perform calculations, and thereby inventing programming, the institutional framework in which they operated was not going to grant them special recognition. Exceptional employees do not change job classifications.

Their bosses could have been the ones requesting a recategorization. They didn’t. Why? People are products of their environments, no matter how remarkable they may be. John Mauchly and Presper Eckert, the two main inventor-innovators behind the ENIAC, were no exception. They were conditioned to view women within preexisting ideological frameworks. Eckert had studied at Penn’s Moore School of Electrical Engineering, which admitted women as undergraduates only in 1954. Mauchly had earned his PhD from Johns Hopkins in 1932 in physics, where women were exotic exceptions. Realize that when future Nobel physicist Maria Goeppert Mayer came to Johns Hopkins with her husband, Joseph Mayer, she was given only an assistantship position in the Chemistry Department.

Female “human computers” were innovative as they built the software for a major hardware innovation—electronic computers—but they remained statutorily something like what the couture industry called “petites mains” (little hands), who sewed priceless dresses and stitched fancy beads. Women had sewn forever, but Charles Frederick Worth, an Englishman who established his fashion salon in 1858, is considered the father of haute couture (and we forget that his wife, Marie Vernet, was the first professional model, and thus powered the diffusion of his creations). Women had cooked for centuries, but the role of being “chef” was appropriated by men. The history of female human computers is the carryover of a secular opposition between the “hand” and the “head.”

So, when electronic computers came about, there was a collusion between a device called “computer” and a job also called “computer,” but the same word covered two different concepts belonging to different layers of the kairos with two different histories. Female “human computers” belonged to a linguistic construct where being acknowledged as an innovator would have been an oxymoron. As Janet Abbate has reminded us, programming was later repositioned within an area where men were kings, engineering, as “software engineering.”

Although quite a few female human computers did continue as programmers, the repositioning of the genre as engineering excluded them down the road, because companies were starting to look for “engineers.” But very few women had this title in the 1950s and 1960s. It’s also probable that the women who had graduated in electrical engineering, which was difficult, couldn’t have been thrilled by the idea of applying for jobs they had seen for so long as destined for human computers. Language frames the way we see opportunities and people.

It’s because the term “human computer” has fallen into disuse that we can evaluate the actual content of what the women of the ENIAC and many others (like Katherine Johnson, who worked on the first spacecraft launch in 1961 and the first moon landing in 1969) did, and that we can legitimately reintegrate these women into the mainstream history of innovation. Sometimes, people are forgotten because they are left out of the linguistic structures that shape the kairos at any given time.

Contrary to Ada Lovelace, the female human-computers do fully belong to the general history of computer innovation. The role of women in the creation of software is not a footnote for women by women, but a keystone moment in the history of the genre. However, as we render homage to these women, we may not want to lose sight of the fact that the goal is color and gender neutrality, not the formation of identity-based gardens that might ultimately continue to rubber-stamp biases or possibly reinstitutionalize them in a different way. So as much as we want to eulogize history makers, we must still look at what reality shows today. Major tech companies may claim that they “take diversity seriously,” but as data-centric as they are when they sell us advertising, they’re nowhere close to showing a comparable efficiency in diversity.

ABOUT THE AUTHOR

(15)