2017 laid the foundation for faster, smarter AI in 2018

“AI is like the Wild West right now,” Tim Leland, Qualcomm’s head of graphics, told me earlier this month when the company unveiled its latest premium mobile chipset. The Snapdragon 845 was designed to handle AI computing tasks better. It’s the latest product of the tech industry’s obsession with artificial intelligence. No company wants to be left behind, and whether it’s by optimizing their hardware for AI processing or using machine learning to speed up tasks, every major brand has invested heavily in artificial intelligence. But even though AI permeated all aspects of our lives in 2017, the revolution is only just beginning.

This might be a helpful time to clarify that AI is often a catch-all term for an assortment of different technologies. There’s artificial intelligence in our digital assistants like Siri, Alexa, Cortana and the Google Assistant. You’ll find artificial intelligence in software like Facebook’s Messenger chatbots and Gmail’s auto-replies. It’s defined as “intelligence displayed by machines” but also refers to situations when computers do things without human instructions. Then there’s machine-learning, which is when computers teach themselves how to perform tasks that humans do. For example, recently, an MIT face-recognition system learned how to identify people the same way humans do without any help from its creators.

It’s important not to confuse these ideas — machine-learning is a subset of artificial intelligence. Let’s use the term machine learning when we’re talking specifically about concepts like neural networks and models like Google’s TensorFlow library, and AI to refer to the bots, devices and software that perform tasks they’ve learned.

Still with me? Good. This year, AI got so smart that computers beat humans at Poker and Go, earned a perfect Ms. Pac Man score and even kept up with veteran Super Smash Bros. players. People started using AI in medicine to predict diseases and other medical conditions, as well as spot suicidal users on social networks. AI also began to compose music and write movie scripts.

Everywhere you look, there’s someone trying to add AI to something. And it’s all facilitated by neural networks that Google, Microsoft and their peers continued to invest in this year, acquiring AI startups and launching or expanding AI divisions. Machine-learning has progressed quickly, and it’s going to continue improving next year.

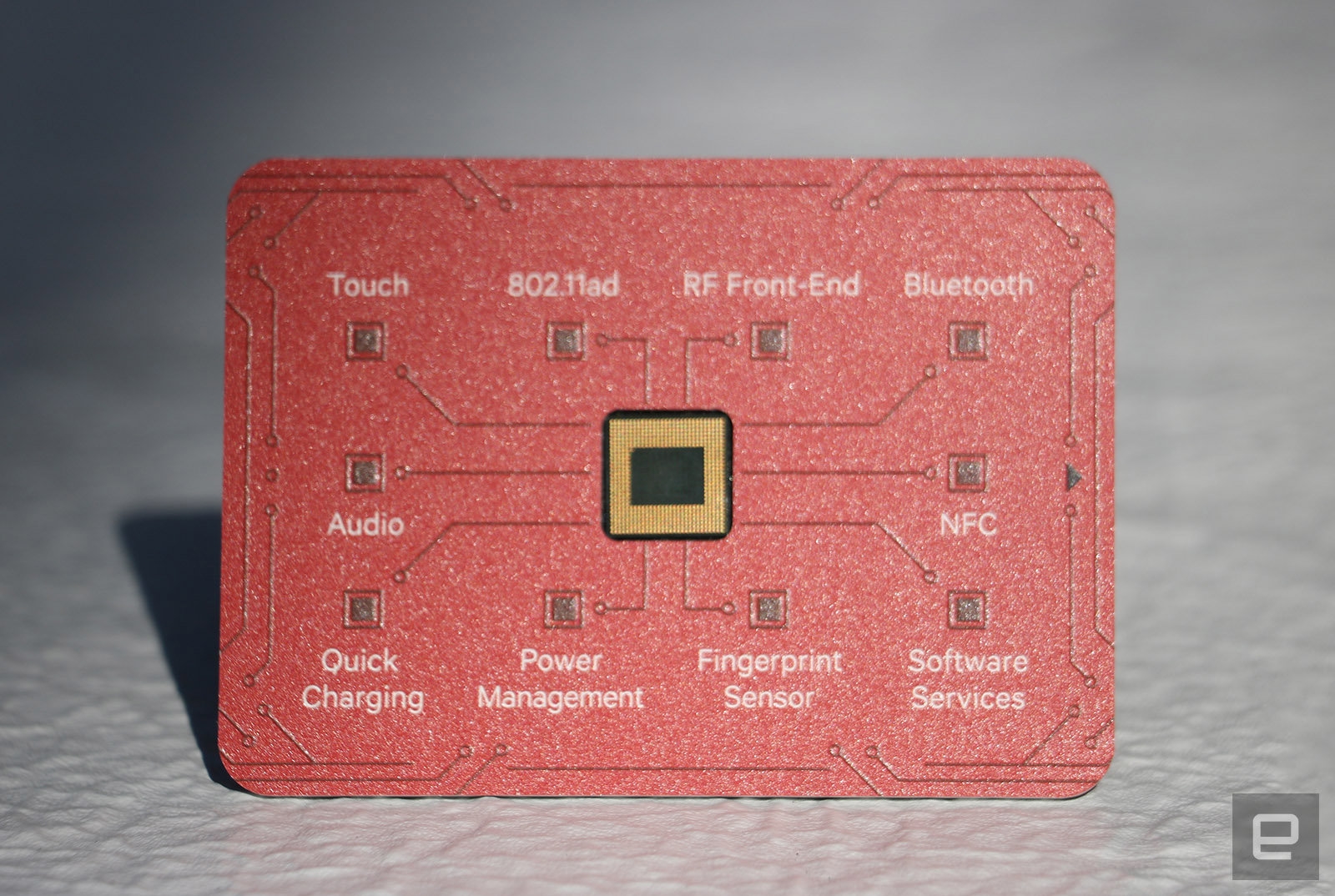

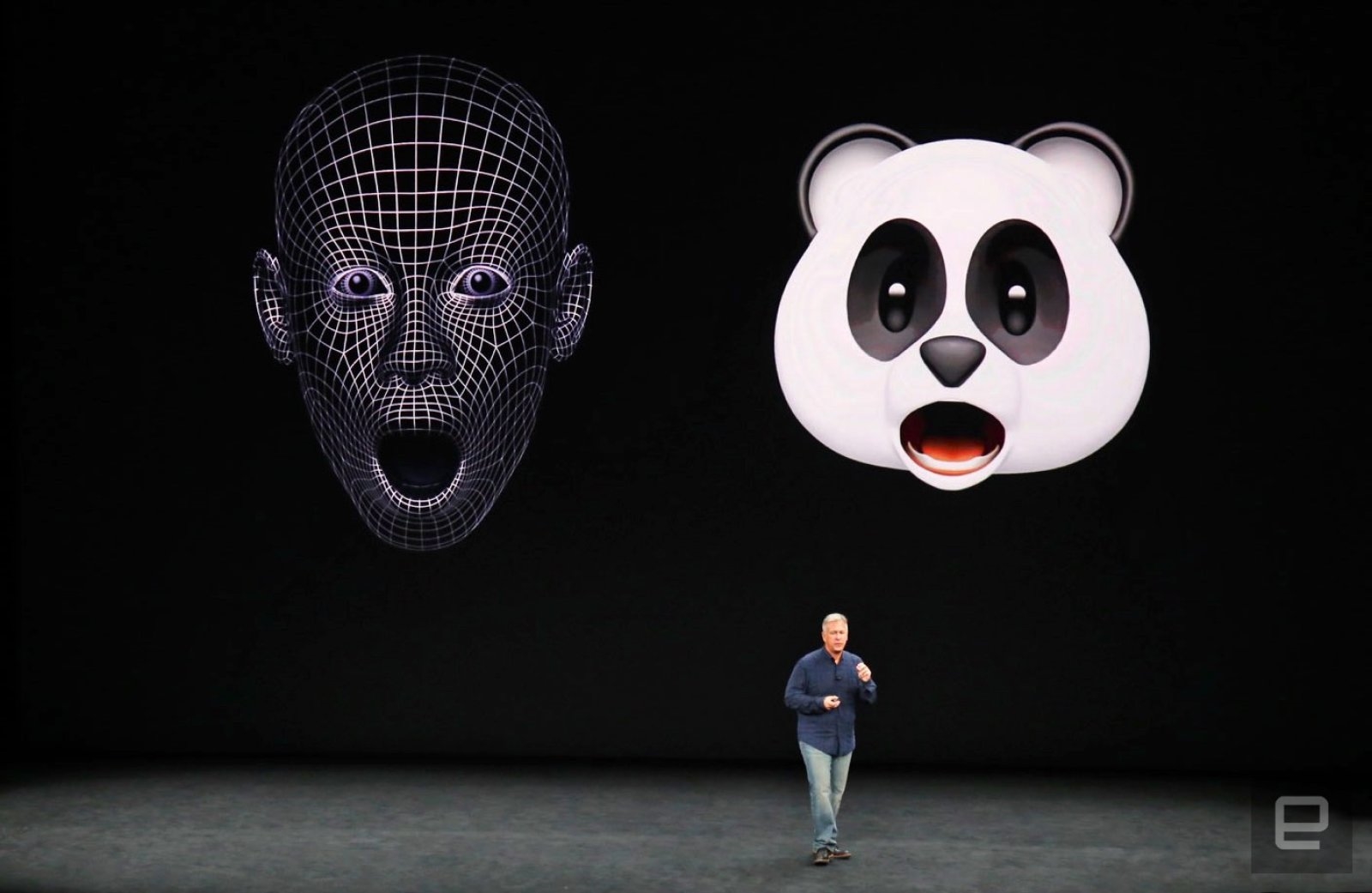

One of the biggest developments as we head into 2018 is the shift from running machine-learning models in the cloud to your phone. This year, Google, Facebook and Apple launched mobile versions of their machine-learning frameworks, letting developers speed up AI-based tasks in their apps. Chip makers also rushed to design mobile processors for machine learning. Huawei, Apple and Qualcomm all tuned their latest chipsets this year to better manage AI-related workloads by offering dedicated “neural” cores. But barring a few examples like Face ID on the iPhone X and Microsoft Translator on the Huawei Mate 10 Pro, we haven’t yet seen concrete examples of the benefits of chips tuned for AI.

Basically, AI has been improving for years, but it’s mostly been cloud-based. Take an image-recognition system, for example. At first, it might be able to distinguish between men and women who look drastically different. But as the program continues training on more pictures in the cloud, it can get better at telling individuals apart, and those improvements get sent to your phone. In 2018, we’re poised to put true AI processing in our pockets. Being able to execute models on mobile devices not only makes AI faster, it also stores the data on your phone instead of sending it to the cloud, which is better for your privacy.

It’s clear the industry is laying the groundwork to make our smartphones and other devices capable of learning on their own to improve things like translations, image-recognition and provide even greater personalization. But as the available hardware gets better at handling machine-learning computations, developers are still trying to find the best ways to add AI to their apps. No one in the industry really knows yet what the killer use case will be.

Eventually, every industry and every aspect of our lives — from shopping in a mall to riding a self-driving car — will be transformed through AI. Stores will know our tastes, sizes and habits and use that information to serve us deals or show us where to find what we might be looking for. When you walk in, the retailer will know (either by recognizing your face or your phone) who you are, what you’ve bought in the past, what your allergies are, whether you’ve recently been to a doctor and what your favorite color is. The system’s AI will learn what you tend to buy at specific times of the year and recommend similar or competing products to you, showing the information on store displays or tablets on shelves.

Cars will be smart enough to avoid obstructions and use machine-learning to better recognize dangers and navigate around hazards. Even your doctors will soon rely on AI to classify X-rays, MRI scans and other medical images, cutting down the time involved in diagnosing a patient.

AI is already prevalent in image-recognition, and it will soon become even more pervasive. Home-security cameras are already getting better at distinguishing between individual humans, dogs, cats and cars. Don’t be surprised if this is ultimately used in law enforcement to sift through traffic- and other public-camera footage to look for potential criminals or missing persons.

The digital assistants that we talk to through phones and smart speakers will not only get faster and converse more naturally by learning from our conversations, they’ll also better anticipate our needs to offer the things we want when we want them. When you walk into your home after work, your lights will come on, your thermostat will turn the temperature up and your favorite winding-down music will start playing.

Sure, this already happens, but the existing method relies on triggers you’ve set based on your location or the time of day. In future, AI will know just how to adjust everything in your home just the way you like it while accounting for external factors. For example, if it’s a hot day, your digital assistant can turn up the air conditioning without your input after detecting temperature changes outside. All these automations could eventually make the world of Black Mirror a reality.

In 2017, the AI takeover gained momentum, but the most compelling use cases were confined to controlled, experimental environments. Next year, we’ll start to see more powerful AI emerge that might actually change the way we live. It might not happen right away, but soon AI will run our lives — for better and worse.

Check out all of Engadget’s year-in-review coverage right here.

(32)