4 ways Google’s newest AI breakthrough will improve your search results

Last May, at Google’s I/O developer conference, the company previewed a new AI algorithm it had been working on known as MUM. Short for Multitask Unified Model, the technology built on top of an earlier Google algorithm, BERT (Bidirectional Encoder Representations from Transformers), which had already given Google’s search a radically more sophisticated understanding of the web pages it pulls together. At I/O, Google said that MUM was 1,000 times more powerful than BERT—and said to stay tuned for more news.

Rather than improving search in any one way, MUM is an ingredient that Google can call on to create a range of new experiences. “What’s really exciting about it is the new things it unlocks,” says VP of Search Liz Reid. “It’s not just that it’s a lot more powerful than BERT. We’ve trained it on 75 different languages, and it has much more of an understanding of connections and concepts.”

Now, at a streaming event called Search On, Google has showed off some of the specific features that MUM is letting it build, some of which are due to arrive in coming weeks and months:

The questions behind the questions. MUM’s deeper understanding of how topics intersect will help Google deliver search results that are likely to contain useful information even when they don’t squarely reflect the words in a query. “You can type in, ‘When do I plant tomatoes in California?’” says Reid. “Well, behind that, maybe you’re trying to plant a garden for the first time in California, or it’s your first time planting tomatoes.”

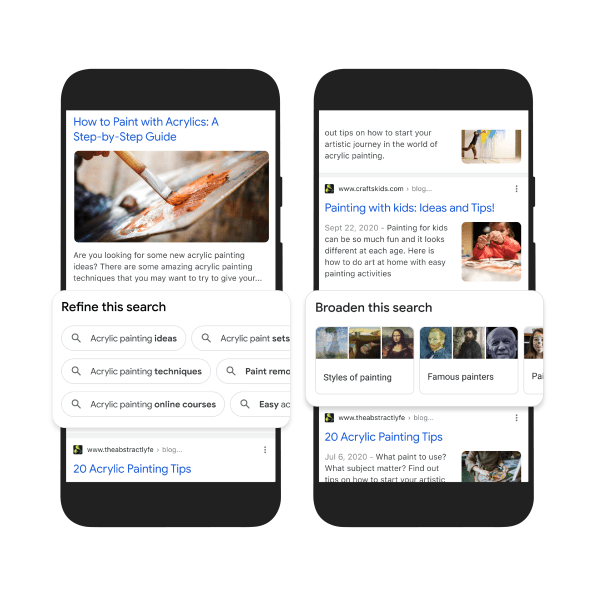

One topic, many aspects. Google is using MUM to let people begin with a simple search—maybe on a subject they’re just beginning to investigate—and then browse through links to material covering a variety of related areas. For example, MUM has helped the search engine divvy up the broad subject of acrylic painting into more than 350 subtopics, ranging from the tools you can use to do the painting to how to clean up when you’re done. Already, MUM is helping power a more visual search results page for some topics that weaves together articles, images, and videos.

Pictures and words. Rather than just understanding information in text form, MUM is capable of comprehending images, video, and audio. That multimedia savvy comes into play in a new feature Google is building for its Google Lens search tool, which lets you initiate a search by aiming your smartphone camera at something in the real world. Thanks to MUM, you’ll be able to snap an image, then supplement it with text to create a search that might have been impossible to express with pictures or words alone. One of Google’s examples: taking a photo of a patterned shirt, then using text to convey that what you’re really looking for are socks with a similar pattern. Or if you want to repair your bike but aren’t sure about the name of a component, you can capture an image and type “how to fix.”

Videos decoded. It’s long been tough for a search engine to understand video with anything like the precision with which it can parse text-based content. Google will call on MUM to help it detect that multiple videos relate to a concept even if they’re not linked by usage of precisely the same term, allowing it to group videos that reflect a concept such as “macaroni penguin’s life story” regardless of whether they use that phrase. This feature will work for YouTube videos shown in Google search results; Google is looking at ways to incorporate videos hosted elsewhere.

Google has been criticized for introducing search features—such as boxes at the top that provide a direct answer to a query—that tend to keep users on its pages rather than sending them to worthy material around the web. The company has pushed back on some of it. And according to Reid, one of MUM’s benefits is that it helps Google expose useful sites that might otherwise be buried in results. “A lot of these features are designed not [for] cases where users are coming for a quick answer, but helping people with much more broad exploration,” she says. “And in those, there’s never going to be a single right answer.”

Bias, and how to mitigate it

Google’s BERT algorithm was a landmark in teaching machines to understand written language. And it didn’t just improve Google search: Other companies learned from it and created their own variants, such as Facebook’s RoBERTa, which the social network uses to identify hate speech.

But for all their power, research has shown BERT and other algorithms inspired by it can perpetuate biases—racial and otherwise—reflected in the text used to train them. This conundrum was among the topics discussed in the Google research paper that sparked an internal drama that led to AI ethics co-lead Timnit Gebru’s controversial departure from the company in 2020.

Reid acknowledges that MUM carries its own risks. “Any time you’re training a model based on humans, if you’re not thoughtful, you’ll get the best and worst parts,” she says. She emphasizes that Google users human raters to analyze the data used to train the algorithm and then assess the results, based on extensive published guidelines. “Our raters help us understand what is high quality content, and that’s what we use as the basis,” she says. “But even after we’ve built the model, we do extensive testing, not just on the model overall, but trying to look at slices so that we can ensure that there is no bias in the system.” The importance of this step is one reason why Google isn’t deploying all its MUM-infused features today.

Beyond anticipating how MUM could go awry and working to prevent it before that happens, Google’s search team has plenty of opportunities to use the technology both to solve existing problems with search and create all-new experiences. The challenge, Reid says, is to “dream about what’s possible. That’s the fun part, but also why we’re early on with MUM. It’s not that we figured out everything we want to do, and we just have to do it. We’re still trying to figure out all different ways it can be useful.”

(39)