5 Lessons to Learn from the Fastly and Akamai Outages

5 Lessons to Learn from the Fastly and Akamai Outages

Fastly, one of the Internet’s many critical components experienced an outage on June 8th that took some of the most prominent websites online — well, offline. Then, almost two weeks later, Akamai, one of the largest global content delivery networks, also stumbled — taking out online systems for airlines, banks, and stock exchanges worldwide.

Lessons to Learn from the Fastly and Akamai Outages

In light of these recent outages, it’s important to remember that failures will happen, but the vital attribute of failure is the opportunity to learn. So what can you learn from these examples? Here are five lessons learned from the recent outages and actions you can take to ensure you have a fast website that provides a reliable digital experience even when infrastructure partners fail.

Lesson 1: Everything fails eventually. Have a backup plan.

I was told as a young engineer: “If you design software with the expectation that every dependency will fail at some point, you’ll never be disappointed, regardless of the outcome.”

The recent failures certainly bring this lesson home, but we forget things fail all the time. Although the scope and magnitude of the Fastly and Akamai outages drew headlines, the reality is that the internet experiences failures all the time. For example, for the week of June 14 – 20, 2021, there were: 427 network outages, 352 internet service provider outages, and 23 outages in the public cloud.

It’s more important than ever to recognize the inescapable fact of the internet: everything fails eventually. To combat this impact, reliability engineers look to implement redundancy wherever financially and operationally possible.

An Online Infrastructure

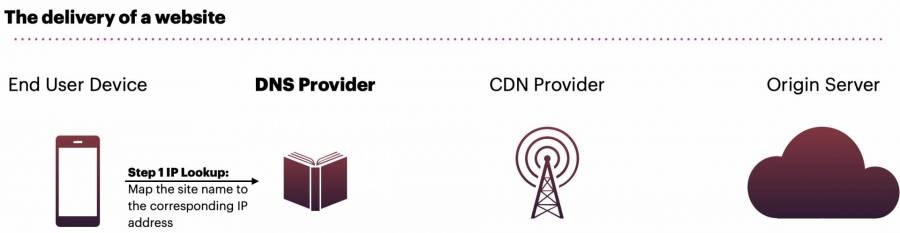

When users access an online store, a delicate handoff occurs between multiple providers of core infrastructure. So first, let’s take a quick look at the significant infrastructure levels for accessing an online store and identify the opportunities and costs to implement some levels of force majeure protection. The first step is the Domain Naming Service (DNS).

The Domain Naming Service (DNS)

DNS is responsible for translating a website’s name (e.g., your website here) to its underlying Internet Protocol (IP) address (e.g., think of the IP number as a global internet phone book).

DNS is distributed, with 13 core Root Servers providing the backbone database and thousands of copies replicated globally for multiple geographic locations. In addition, every website has a DNS provider responsible for mapping a site’s name to its existing IP value.

As these values may change over time, DNS providers implement a Time to Live (TTL) for each record update to ensure that the latest information is continuously updated. If a DNS record does not exist for a website, the end-user is left facing an error page stating, “Server Not Found/Webpage Not Available.” (There are many other reasons to “get” an error page also.)

For many companies, DNS functionality is provided by a single company — leaving companies exposed to DNS lookup failures if that provider has a material outage. Implementing redundancy for DNS does not cost much, as DNS services are a few hundred dollars annually at most — but there is a high operational cost, as multiple providers must be updated simultaneously about any backend changes.

As is common in the modern age, a bevy of companies provide offerings to automate this process, and it’s often money well spent.

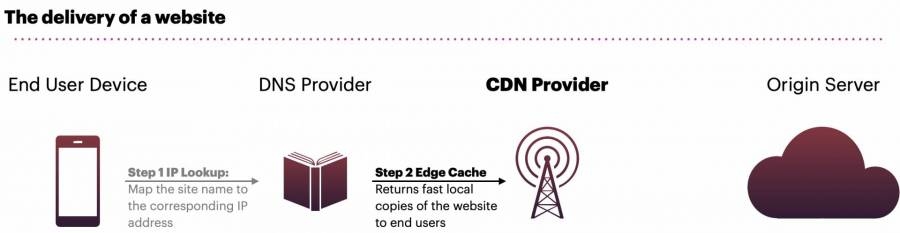

The Edge Network / Content Distribution Network

The next level is the “edge” network, often a Content Distribution Network (CDN).

The CDN is at this layer where our high-profile failures (Fastly and Akamai) took place. CDNs help websites load faster by reducing the physical distance between your web server and the user. CDN’s enable users worldwide to view the same high-quality content without slow loading times while simultaneously employing a global fleet of servers to power your online presence. Unlike DNS redundancy, where the financial cost is minimal, implementing multiple redundant CDNs is expensive.

For this reason, CDN redundancy is often employed only by enterprise customers and large eCommerce brands. In most cases, the CDN redundancy is implemented at the DNS layer itself. However, for smaller companies, ensuring you have up-to-date IP addresses for your origin server (your web host itself) can save the day if you have DNS separated from your CDN provider.

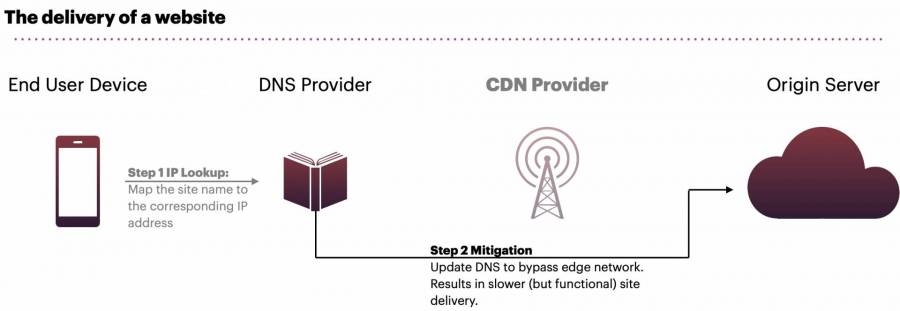

CDN Failure Mitigation

When CDNs fail, engineers can update DNS records to have users bypass the CDN altogether. This gives customers a functional (but slower) experience. During the Fastly outage, many companies sidestepped the impact by simply redirecting users to either their web server or backup CDN provider.

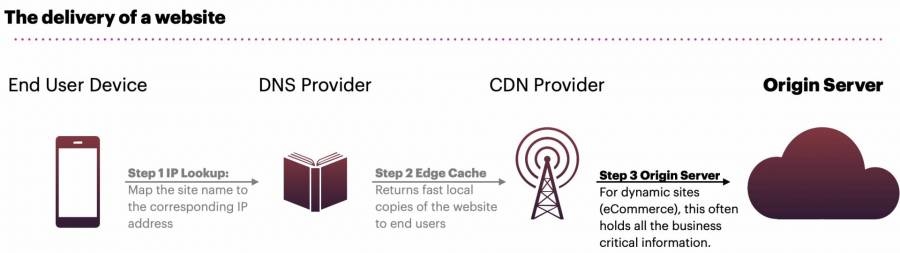

The Origin Web Server

This is either a hosted content management system (Magento, WordPress, etc.) or a platform (Shopify, Kinsta, etc.). This is the traditional place where IT deploys redundancy resources, with backups and load balancing often already in place.

An important lesson from the Fastly outage: ensure your web servers could operate a capacity to service all customers if necessary. If you are forced to bypass the CDN – your web servers will be responsible for serving all the traffic. CDNs often cache between 60-95% of all web requests—so if you need to bypass this provider due to an outage, can your web server keep up with 10x the site traffic?

Lesson 2: Understand your third-party dependencies.

When critical infrastructure fails and takes a site offline — you have a fatal failure. For example, if you have a first-party dependency on a provider, and that provider fails—your support team is hit with a barrage of angry customers (assuming they can reach you).

But what about third-party dependencies, those critical services woven into every online store? The blast radius of the Fastly outage was more extensive than sites that went offline, as it took out hundreds of SaaS companies. Around the globe, marketing and development teams had blackouts in analytics data, failures in the email follow-up campaigns, and more esoteric impacts, such as failures to calculate shipping costs for some regions.

Third-party dependencies also show up in the user experience, such as external JavaScript (JQuery, D3.js) failing to load and render the page correctly. These non-fatal failures often cause the biggest headache, as users think the site is operational, but some components (e.g., clicking buttons) don’t work.

Analyzing your infrastructure dependencies

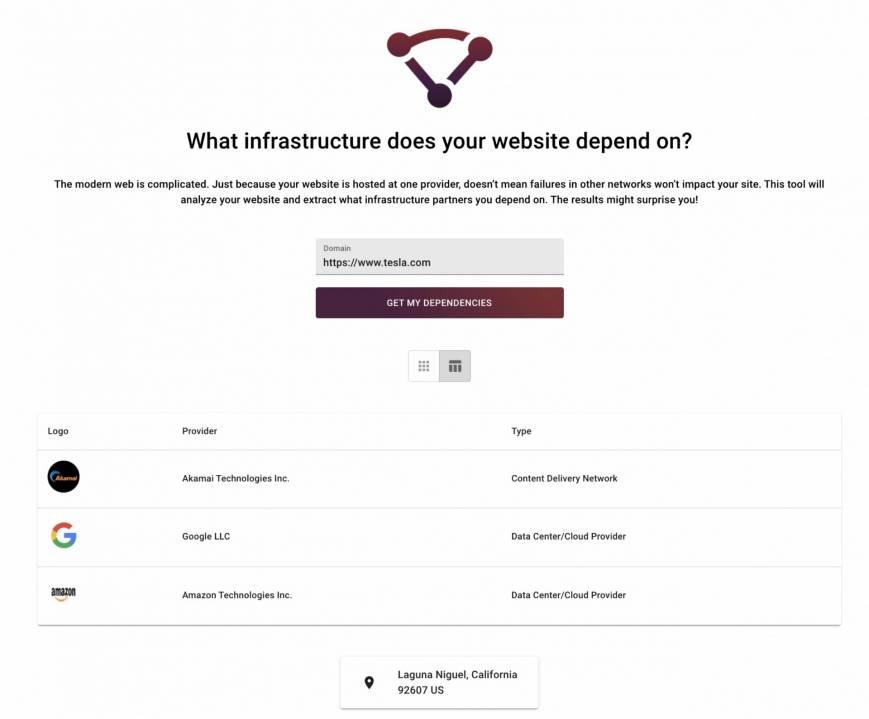

This free online tool provides a way to analyze the infrastructure needs of any website. Using Tesla.com as an example, we can see that there are dependencies on Akamai, Google, and Microsoft. Each one of these providers plays a critical role in the Tesla experience.

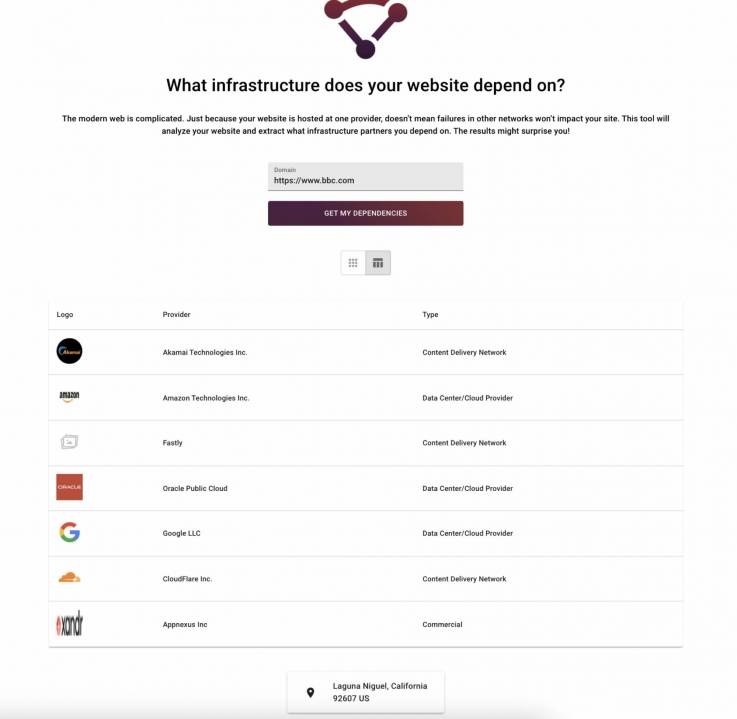

For larger sites, especially those that employ regional content delivery, the web of dependencies can be extensive (and vary by the user’s location!). For example, looking at BBC.com, we can see dependencies on three CDN providers, three cloud providers, and a direct hosted advertising network. That’s a significant amount of infrastructure to oversee.

Fatal Failures vs. Non-Fatal Failures

The solution reliability engineers employ here is to make as many failures fatal as possible. At first, this might seem counterintuitive, but a partially working site may indeed be more damaging than one that is hard down. Furthermore, fatal failures are the easiest to debug as the failure is explicit—the system itself stops.

Non-fatal failures, on the other hand, are often “Heisenbugs,” i.e., notoriously tricky issues that may be transient and never truly root-caused because overall, the system continues operating.

In light of this, reliability engineers push toward making failures explicit and minimize the blast radius of dependencies by self-hosting as many services as possible.

For example, when an essential piece of JavaScript is needed to load some functionality, hosting this “on origin” (your webserver) is faster and more transparent. In addition, in the ever-increasing push towards privacy, hosting assets (fonts, JavaScript, images, etc.) “on origin” minimizes data sharing with external providers.

The key takeaway: When possible, streamline and host your dependencies on your infrastructure — and may all your failures be fatal.

Lesson 3: If you don’t measure, you won’t know.

For explicit fatal failures, the monitoring challenge is simplistic — is the website up? But what if just some parts of the website are broken, or worse, so slow they seem broken? Would you even know? Modern websites are surprisingly complex. The average website needs 73 network requests to load. That’s 73 different network calls out to dozens of separate networks. When outages happen, they might only impact one of those requests — but maybe it’s critical (think: credit card validation?).

To make matters worse, site speed is not deterministic. For example, sites that load personalized content or ads may experience vastly different performance characteristics from user to user, region to region, or device to device. Complex systems require robust monitoring, and it’s never been a better time to implement it than now.

If it’s not Real User Monitoring, it’s, by definition, Fake User Monitoring.

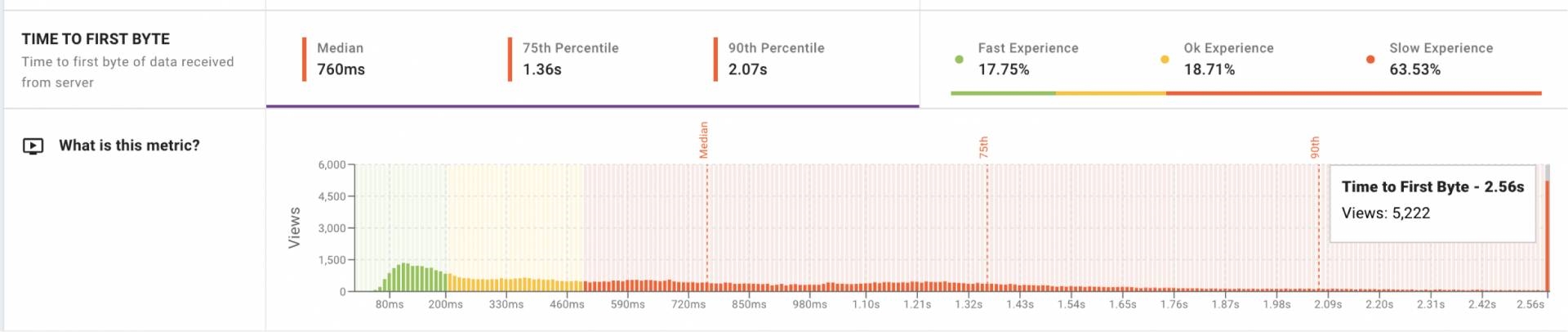

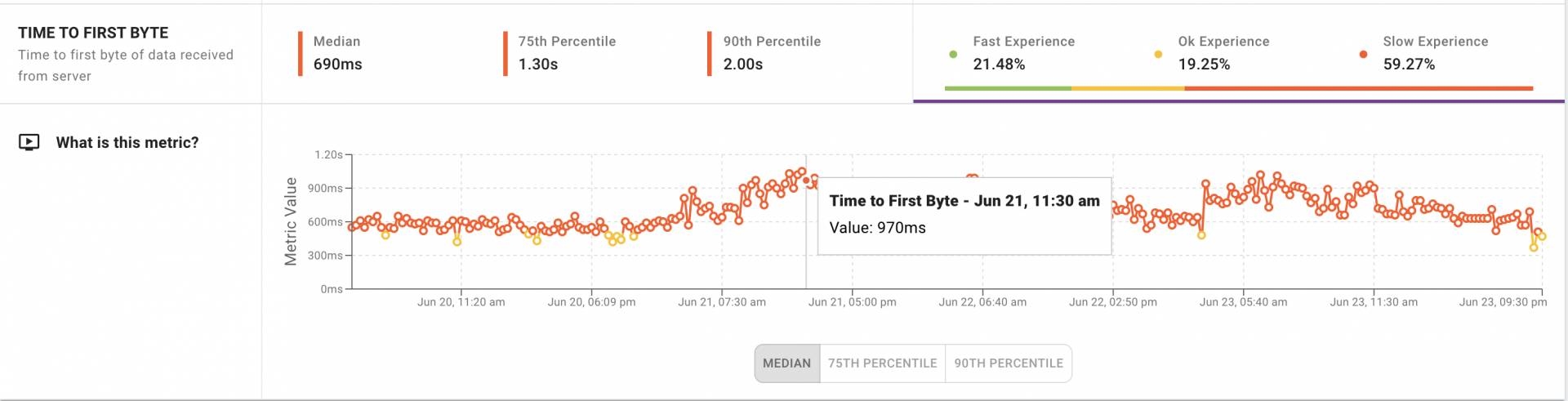

The only way to know how your site performs for your users is to measure its performance when loading and interacting with the site. This type of measurement is commonly referred to as Real User Monitoring, and in the world of eCommerce, this is the only monitoring worth looking at.

When explicit failures happen, like the failure of a third-party component to load, Real User Monitoring systems provide detailed views about what content failed, on what device, and from which network or infrastructure partner it came from.

For implicit failures, where a third party may be in a degraded state and thus serving content more slowly, Real User Monitoring is the canary in the coal mine that provides accurate and actionable data to reliability engineers on what’s going on.

.

In an eCommerce world, where site speed is critical to business success, Real User Monitoring provides the flight data recorder that engineers and business leaders need to optimize the store. This is even more important today, where even a tenth of a second slowdown in page load time can result in an 8.4% drop in conversion rate and a 9.2% decrease in average order value.

Lesson 4: Error messages matter.

When sites have a fatal failure, the “site unavailable” page rears its head. However, if the failure is more pronounced or further up the delivery chain (as was the case with Fastly), the error page might be something even less user-friendly.

Error pages are often overlooked as a potential source of customer outreach. Yet, a well-crafted error page can turn a frustrated, lost customer into a future potential sales opportunity.

Making great error pages

Error pages offer an opportunity to convey important information to your customer. Great error pages have three key attributes:

- Acknowledge: This is your error, not the customers. It’s critical to acknowledge this and offer links to support services, social updates, and status pages. The key is to ensure the customer knows this is a temporary failure and that you will be back online soon.

- Apologize: For wasting the customer’s time. Nobody arrives at your website for free; you’ve either paid with advertising dollars or marketing. Now that we have them at the site, we have failed to deliver our offered value. Please take a moment to convey that their visit matters to you.

- Award: Just because your site is offline doesn’t mean the relationship has to end here. Offer the customer a discount if they provide you an email. Error pages can also redirect to third-party websites that (hopefully) are not offline. Use this moment to regain customer trust and move the sale process forward.

When done correctly, error pages can be superheroes—and give your support teams (who are already dealing with other issues) some cover. When eCommerce sites are down, we also likely have lost our tracking and metrics capabilities, so capturing that user email and following up with additional offers might save the day.

Lesson 5: Client-side technology offers advanced protection.

When it comes to failure prevention, we often look at things we can implement at the infrastructure or server level. But what if the failure is the user’s network (cable’s out)? What if your site is so big that you can’t track every infrastructure partner? What if your IT team is months behind on implementing your last infrastructure request? Is it time to give up? Nope, it’s time to look at client-side solutions.

Client-side performance solutions run inside the user’s browser itself. These are pieces of code that you ship with your website but run directly in the browser itself — like a guardian angel watching over the page load. And over the past decade, the web has made some powerful yet often overlooked client-side solutions. But none more potent than Service Workers.

At your Service (Workers)

The Service Worker API was initially designed to facilitate offline browsing of a website, specifically Gmail. When Gmail first came out, people used it on early smartphones. When they went into the subway (where network connectivity is zero)— they couldn’t use the site. Obviously, an email client that couldn’t work offline was a buzzkill.

To fix this, the Google team developed a feature in the browser that would allow the browser itself to have some control over a website, even if the network was down.

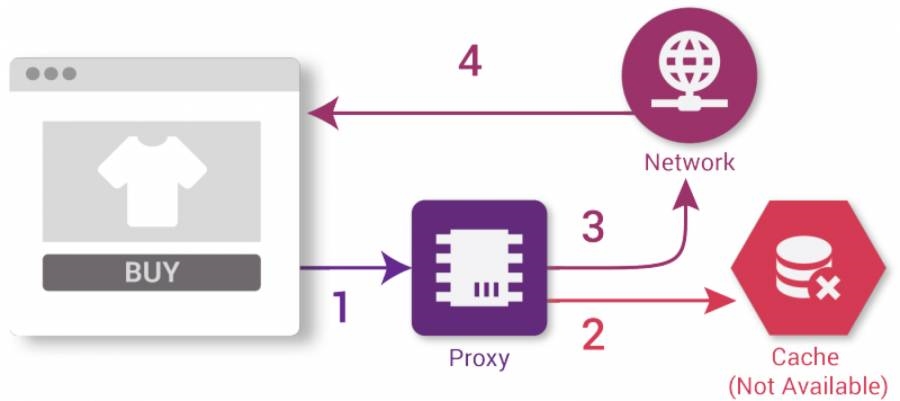

They called the new feature Service Workers, as they are a form of code that can run in the browser (do work) but aren’t dependent on external network services. Instead, service Workers act as a proxy between the website, the browser, and the network — and give developers the ability to store data on the device and respond to requests from inside the browser. In many ways, they are infrastructure-level ideas but run directly on the user’s device itself.

Service Workers can intercept network requests the browser sends, take action based on whether the network is available, endpoints are responding quickly, or return a locally cached copy of the site in the event of a server error. In advanced cases, they can enable client-side caching that makes the site both more reliable and faster.

How Service Workers can help in times of peril:

Caching client-side:

The number-one benefit is the ability to store data, including those pesky third-party resources, on the device itself. A dedicated client-side cache will dramatically speed up a working website and provide some level of protection when individual assets are failing. If the cache is advanced enough, you may be able to reduce your returning customer’s dependencies on your network-based infrastructure by 70% or more.

Client-side failover:

Implementing multi-CDN solutions can be costly, and as we discussed, and require a certain level of operational expertise. However, with a Service Worker, you can implement client-side failover that is both automatic and operationally simplistic.

For example, a client-side failover rule might say, “if foo.com is unavailable, or not responding in one second or less, then automatically try backup.foo.com.” All the benefits of advanced infrastructure level failover, with minimal effort.

Client-side data buffering:

Most solutions that implement advanced client-side functionality include client-side performance metrics (Real User Monitoring). We can also continue capturing marketing data such as Google Analytics events and store them client-side for later transmission when the site is back online. No more data loss!

Advanced offline error messages:

What’s better than an error page? How about a full offline error site. Service Workers were designed to allow websites to work—partially—offline. You may not complete checkout, but you can still have a few top product pages and a client-side version of a AAA error response. Combined with client-side caches and data buffering, you might be able to allow a customer to “start to shop” while the website comes back online in the background.

Learning from failure

As they say, “to err is human, but to error is software.” As the world trends toward online-first marketplaces, it’s even more vital to learn from failure. The techniques and best practices outlined here give a glimpse into how we can all build robust, performant – and user-centric digital experiences—even in failure.

Image Credit: blue bird; pexels; thank you!

The post 5 Lessons to Learn from the Fastly and Akamai Outages appeared first on ReadWrite.

(42)