5 Questions To Ask Yourself Before Writing Code

— June 19, 2019

Writing code is like finding your path through a dark forest. Behind each tree can lurk a monster, a treasure or a pit. Or there is no path at all. You don’t know until you look. For me, this attitude encapsulates the essence of writing software empirically.

A developer culture is one where this kind of thinking is enabled and encouraged. It values an empirical approach to every level of development. It encourages the active exploration of ways to shorten the feedback-loop even further and deliver value even faster.

I know this sounds very nice in theory. But how does it translate into the practice of writing code? In this post, I share five questions?—?or cognitive strategies?—?that I find helpful to ask before starting development of a feature, a piece of functionality or fixing a bug. And to focus this post a bit more on the ‘How’, I will assume that the feature you’re building is actually valuable and relevant in the first place and requires at least some amount of code. But these are obviously vital questions before even considering the ‘How’.

1. “What is the purpose, really?”

I strongly believe that you shouldn’t write code before you at least understand the purpose of what you intend to create. Although this might seem obvious, a lot of developers practice a style of “Gung-Ho”-coding that leaves little time for this. And this includes me. In my eagerness to write code, I often forgot to take a step back and look at the big picture first.

It’s a bit like starting to write a book without first having at least a basic sense of the story you want to tell. Or indeed, venturing into a dark forest without first considering why. If you don’t take this step back, you will end up writing a lot of code that isn’t necessary or useful.

Now, I’m not suggesting you should analyze until you have every detail nailed down. Writing code, even fixing a simple bug, is complex work and involves loads of assumptions and setbacks that you can only discover and validate as you do the work. You don’t know what’s behind the tree until you look. So you have to allow yourself to explore how to achieve the purpose as you write the code.

“You have to allow yourself to explore how to achieve the purpose as you write the code.”

Before writing code, I always want to make sure I can comfortably and easily complete the following two sentences in layman’s terms:

- “This feature/Product Backlog Item/code exists in order to …”;

- “I will know that I’ve implemented it successfully when I …”;

This is also an excellent reason why pairing up with someone else is so valuable, as it allows you to actually have this conversation. And if you don’t have that opportunity, you can always explain it to your plant, cat or rubber duck. The mere act of explaining it to someone else?—?even if that someone doesn’t exist?—?helps your mind makes sense of things.

Pairing up with someone else is a great way to have this conversation

Once you have an understanding of the purpose?—?what it is that you intend to achieve?—?you can start thinking about the steps that you know of right now that are required to achieve that purpose.

2. “What steps are involved in achieving that purpose?”

In order to discover the steps required for a purpose, I find it helpful to tell or draw the story of a feature in terms of its timeline: “First … Then … After which … Followed by …. Finally … (and so on)”. Again, this is something that works best when you pair up with someone.

For example, we recently implemented a feature that automated the process of editing a video to have a nice intro and outro (from a set of variations) and uploading it to YouTube. Our initial understanding of the timeline was:

- First, a user should be able to upload a video through a web-based form (Angular) for further processing;

- Then we have to pick one of five intros and one of four outros;

- After which we have to merge the uploaded video with the intro (before) and the outro (after);

- Which is followed by uploading the merged video to YouTube using an API token;

- Finally, we have to inform the user when the merge and upload was successful (or not);

Additional steps emerged while writing the code, such as:

- First, an upload has to be registered with YouTube to receive a URL to upload the video too;

- Then we have to upload the video that URL;

- After which, we have to clean up temporary files left-over by the merge to avoid the server from clogging up;

As you can see, thinking about a feature in terms of its timeline helps to break down a larger piece of functionality into a series of connected bits of functionality. Some of these could even be implemented on their own, while others can be skipped completely. And this is where the next question becomes invaluable.

3. “What steps are most critical to the purpose and nag you the most?”

In a “Gung-Ho”-world, we would go ahead and start writing code. But writing code is fraught with unpredictable problems that can heavily impact the feasibility and the time it takes to write it. Inspired by the Cynefin Framework, it is helpful to write small bits of code that act of as “probes”, each illuminating as much of the dark forest as possible.

In order to find what lurks in the darkness of the code you still have to write, it is helpful to consider which steps nag you the most. Behind which tree is it most likely you will find a monster or a pit? These are the steps that I often find myself revisiting on autopilot while taking a shower of before trying to fall asleep.

In my example, we found ourselves thinking about two things most of the times; How do you merge three videos with C# code on Linux? And how do you upload a (large) video to YouTube? We considered the first to be the most critical. If we couldn’t pull that off within a short period of time and with relatively few lines of code, the amount of effort involved in the entire feature probably wouldn’t be worth it.

As it turned out, it took only two hours to write a prototype that could run ‘ffmpeg’ and merge three videos into one. Initially, this was just a simple console application with about 100 lines of code?—?way too long. But once we knew that the code worked, we started to refactor it out into smaller, specialized classes.

Probes don’t have to involve writing code. For uploading a video to YouTube, we were most worried about authentication and the complexity of YouTube’s API, and not so much about writing C# code to talk with their REST-API. So instead we fired up Postman to talk directly to their API and get a sense of what was involved. When we got that working, it was easy to translate the message-exchange into C# code that did the same thing.

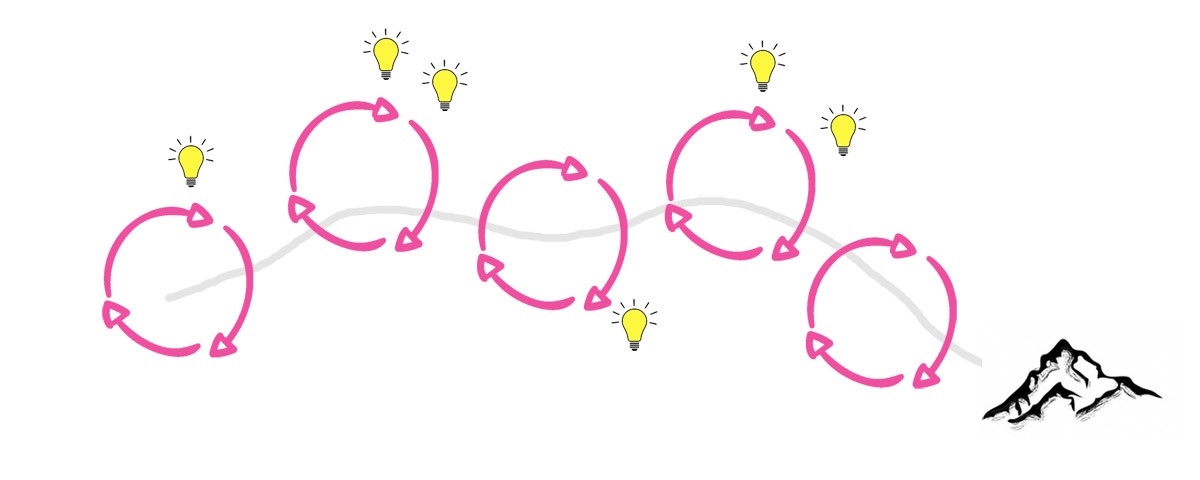

You’ll notice that each of these probes are essentially short feedback loops. And this leads to the next question.

4. “What is the smallest piece of functionality that you can deploy to production to achieve (part of) the purpose?”

It’s tempting to keep running local probes. But one of the most important probes we have for illuminating the “dark forest” of the code we still have to write is to actually deploy something to a production-environment and make it available to users. This is the most realistic, most complete feedback-loop we can achieve. And the faster we do it, the faster we learn about what else is necessary.

Each probe represents a feedback loop

So I always ask myself which steps represent the smallest piece of well-designed, high-quality functionality that can be deployed to production.

For my example, the purpose of the feature was to automate a manual process. The most tedious part of that process was the merging of three video’s, which required specialized video-editing software on the laptops of users. So we started by first building a form that allowed users to upload and submit a video for merging. Because this process took quite some time, we made it asynchronous. When the merge was done, users received an email with a link to download the merged video. From here, the user still had to manually upload the merged video to YouTube. But this already resolved 75% of the manual work. We implemented the rest later. Building this first part required about 4–5 hours; after which value was delivered to users and a lot was learned about what else was needed.

Once we had deployed this first full-cycle probe, we started to refactor the codebase. The (working) code was separated into more specialized classes and projects. Code that was initially duplicated for the purpose of rapid development was removed or moved into shared NuGet-packages. We also improved the architecture by pulling the code for merging videos into its own microservice that connected with the other services through a message queue that made it more robust to intermittent failures. Every commit on the Git-repository was automatically deployed to production, provided all tests passed. Each iteration of refactoring took about at most one hour followed by an automated deployment of a couple of minutes. In the rare instances where we noticed unusual behavior in the microservice, a fix was deployed soon after.

Now, this is just an example. The steps are entirely contextual and dependent on the feature and the application. But there are some commonalities that I think apply everywhere:

- What is the smallest bit of functionality that we can safely deploy to production?

- What is the simplest-yet-flexible architecture that allows us to achieve the purpose?

- When this functionality is successfully deployed, what is the first thing we should refactor to improve the code and/or the architecture?

- In case of disaster, how can we recover as quickly as possible?

5. “How can we make our feedback-loop even simpler, faster and more robust?”

It is immediately obvious that this empirical approach to writing software requires automation to be feasible. If testing and deployment have to be done manually each time, we are bound to start making excuses for not doing it (‘I’ll do it tomorrow’, ‘I want to add just this one thing…’). And by not sending out small probes, we don’t learn nor limit the risk of wasting time and effort on something that turns out to be too difficult or not useful to users. So I’m always looking for ways to make it easier, simpler and safer to deploy to production.

A couple of years ago, I was working with a team on connecting an application to a remote API for exchange information that resulted in financial payouts. This remote API was not under our control, and the people responsible for it were generally hard to reach. It could take hours, on occasion even days, to receive feedback on whether or not our message had been processed correctly or what caused an error to be returned. What worried me most was that this slow build-test-feedback-refactor cycle would take too much time, meaning we would probably skip certain tests or improvements for convenience (or laziness). So as an experiment, I spent one weekend to write a simple facade over the remote API that implemented the same protocols for the handful of messages we were already exchanging. Instead of talking directly to the remote API, we configured our applications to talk to the facade instead. A simple web interface showed traffic and allowed us to relay messages to the remote API, repeat them when an initial attempt failed, receive responses and send ‘mocked’ responses to our applications for testing purposes. Over time, this facade proved so helpful that it grew into a gateway of its own with support for many kinds of messages, advanced routing and a management interface that was very helpful during Sprint Reviews (hint: make something like this when your team does a lot of technical API-based work).

Concluding thoughts

In this post, I shared five questions that I find helpful before venturing into the dark forest of code-yet-to-write. In a developer culture, asking these questions is encouraged and supported. Let me know if you find them helpful. And perhaps you have other questions that you feel add to these. Let me know in the comments.

Business & Finance Articles on Business 2 Community

(24)