A deep look at Google’s biggest-ever search quality crisis

The past few months have been bad for Google’s search reputation. Long considered the “gold standard” in search, Google has seen its search results questioned as never before. It’s a body blow to a core service that should be safe as Google tries to grow in new directions.

Recovering from that blow isn’t easy. What’s happened to Google search is on par with the Apple Maps fiasco or Samsung’s exploding Galaxy Note7 phones.

To this day, people still joke about Apple Maps being bad, even though it’s greatly improved. As for Samsung, the phones might no longer explode, but the jokes continue. Google now faces the same problem. Some of its search results are seen as laughable, embarrassing, or even dangerous.

How Google lost its search groove

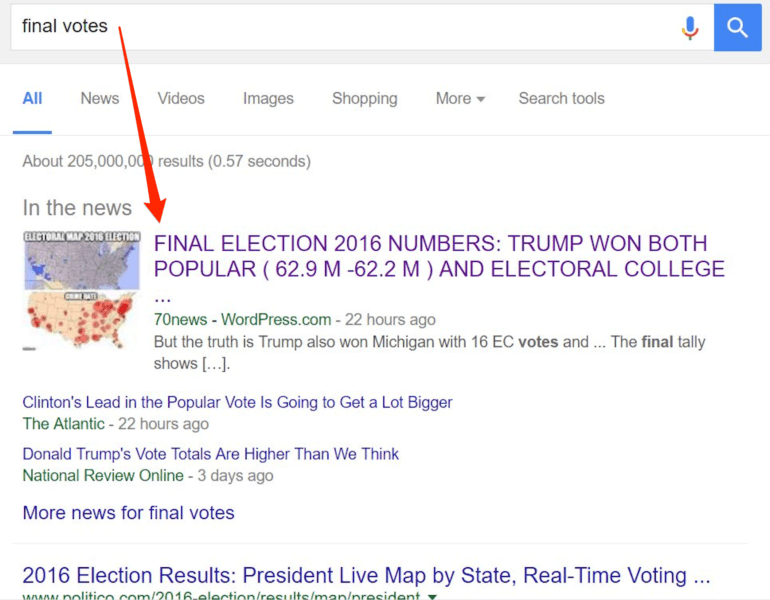

Last November, Google found itself dragged into what had been mostly Facebook’s fake news problem when it listed a page at the top of its “In the news” section that promised the final election counts for the 2016 US presidential election. The page didn’t have the final, official, or even accurate counts.

The next month, in December, Google took a huge hit after a Guardian article highlighted how, for some searches, Google was giving extremely disturbing answers. For example, here’s Google Home speaking at the time about how every woman has some degree of prostitute and evil in her:

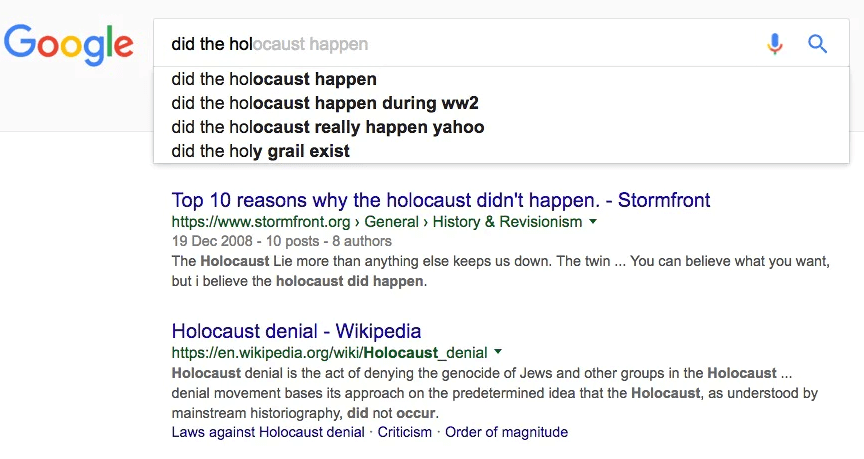

A week after that, the Guardian was back, highlighting how Google was putting a Holocaust denial site at the top of its search results for “did the holocaust happen.”

That hadn’t yet been forgotten when last month, Google could be found confirming that President Barack Obama was plotting a coup:

This was just one of several odd “featured snippets” or “one true answers” appearing at the top of Google’s results.

Less than two weeks ago, Google took further flak after featuring Breitbart for a science news search:

That’s not actually what happened, as I’ll come back to. Nevertheless, it doesn’t stop the “WTF, Google?” reaction. Indeed, it’s a reaction that’s happening because I’d argue Google has lost core faith with people and publications that have bought into the idea that you can Google anything and get the right answer.

Google’s results might be as good as ever. They might even be better than ever. But if the public perception is that Google has a search quality problem, that wins, because we don’t have any hard figures about relevancy.

We don’t have relevancy ratings for search engines

We don’t know which search engine has the best search results. There’s no independent third-party diligently and consistently evaluating actual results. We occasionally get consumer satisfaction surveys, but those don’t actually try to verify that the consumers rating search engines actually know themselves how to evaluate the quality of results.

Without qualified data, Google has earned its reputation as being the best search engine because early on when it started, it was easily demonstrably better than other search engines. By the time the others caught up, it was over. People stopped talking about using “search engines” and shifted over to “Googling” things, where Google was seen at the best and only serious way to get information. A 2003 New York Times column by Thomas Friedman even asked, “Is Google God?”

Google’s only real challenger in recent years, Bing, was largely laughed off as competitor when it started. Bing certainly didn’t help itself by spying on Google searchers in order to copy Google’s results. By and large, Google’s results were unquestioned. Google was the best.

Anyone in the search marketing space, or those who watch search closely, knew this wasn’t necessarily the case. Google had weaknesses. But we’re a niche audience that’s largely dismissed. It takes something among the “normals” out there to finally have an impact on Google’s reputation as being great. There have been only a handful of such occasions.

Google’s previous search quality challenges

In 2003, Google came under criticism after its results were “Googlebombed” so that in a search for “miserable failure,” the official biography for George W. Bush generally ranked first. This wasn’t a major crisis for the company, however. Indeed, Google saw it as so low in priority that took three years before a fix was put into place.

A far bigger crisis was in April 2004, when the anti-Jewish “Jew Watch” site was noticed to be ranking at the top of Google for a search on “jew.” There were calls for the site to be dropped from Google’s results. But Google chose to go with a message of wanting to be inclusionary rather than censor. It quickly posted a disclaimer that appeared alongside those results, which themselves changed over time. The issue largely went away.

In August 2005, Google took a brief blow when Yahoo managed to claim it had indexed more pages than Google. In a world without a universal quality score to measure search engines, size was often used as a proxy. As relatively meaningless as that number was, Google went into red-alert status to claim within about a month that it had overtaken Yahoo with a bigger index — and oh, that it now considered size so passé that it wasn’t going to cite pages indexed as a metric any longer.

Google’s most serious challenge until now, in my opinion, really came on January 1, 2011. In the weeks before, there had been grumblings that “content farms” were somehow walking all over Google’s results, serving up lightweight content to answer common questions. On New Year’s Day 2011, Vivek Wadhwa published a column about why a better Google was “desperately” needed.

The column was an over-the-top condemnation of Google’s search quality backed by no actual metrics. Google was clearly serving hundreds of millions of searches successfully per day, or its actual users would have been abandoning it in droves. They were not. But Wadhwa’s column resonated with tech bloggers who for various reasons just felt in their gut that Google had a problem.

Google again went to red-alert status. Within two months, it launched what was called the Panda Update, a change especially meant to go after content farms and low-quality content. The normals relaxed, assuming all was fixed. Meanwhile, search marketers watched as Google rolled out nearly 30 subsequent updates over a four-year period to get a handle on the issue.

Google’s current search reputation challenge

All those past crises are nothing in seriousness, compared to what Google currently faces. Now, the search engine is regularly finding its search quality questioned, often with little perspective and sometimes with flat-out inaccuracies reported as fact.

For example, the Guardian has done a fantastic job highlighting serious issues with Google. But that same publication also declared in December that Google was promoting right-wing bias in a systematic fashion.

That’s not true. If you care to understand in detail why it was demonstrably false, see my previous explanation at the end of this story. The reality is that Google has problems that can seem to favor extreme sites of any leaning. The Guardian writers just didn’t bother to do any easy, basic checks beyond right-wing sites.

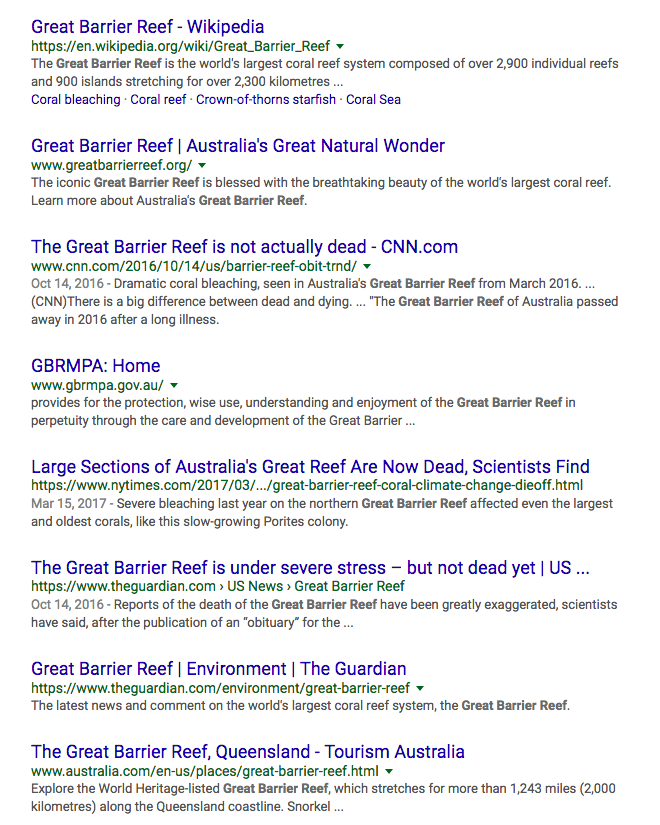

Also consider that issue I mentioned earlier, where Google was spotted listing a Breitbart story first among three news items for a search on “great barrier reef.” After that was tweeted, legitimate questions were raised about whether Breitbart should be a science news source. What seemed missing was any solid examination of the problem.

Instead, Gizmodo first condemned Google for serving up Brietbart for a “climate science” search, then in a follow-up declared in a headline: “Google Says Its Job Is to Promote Climate Change Conspiracy Theories.”

Neither of those things were true. It’s also deeply ironic. Google’s being attacked over whether or not it’s doing a good job presenting factual information in articles that themselves that have factual problems.

A fact check on Google’s alleged failure on facts

A search for “great barrier reef” is not a “science” nor a “climate science” search. It’s a search for a place. Those conducting it might be interested in science information. They might be interested in tourism information. They might be interested in business information about the region. They might just want a map.

Google has no way to know the exact intent of that search. That’s why it presents a variety of results, some related to tourism, some related to climate science. Those results currently include pages from three major and generally respectable news sites — CNN, The New York Times and the Guardian — saying large parts of the Great Barrier Reef are dead, or that it’s under stress but not dead, or that it’s not actually dead at all:

(Those conflicting headlines all come from the same research report, which indeed says that the Great Barrier Reef has serious problems. But because some publications reacted to that initial report to say that the reef was dead, the researchers and local tour operators pushed back — which caused a spate of “it’s not dead or completely dead” stories).

When people search about things, they might also want to know related news. That’s why Google has the Top Stories box. And with this search, Google faces the same issue. People searching for “great barrier reef” aren’t necessarily wanting just science news, so Google provides a variety of current news headlines.

On the day this all exploded, there was fresh news about the stress the Great Barrier Reef is suffering. Breitbart had a commentary on that topic. It happened to get the first spot.

It wasn’t “pinned” to that spot permanently, as the Gizmodo article suggested. It was rotated out as the news itself started to change. Nor was that news for a “science” search, as previously explained. And no, Google didn’t say the story was there because Google’s job was to promote climate change conspiracy theories. Google said it was there as a natural consequence of showing a range of news and views — which is generally what you want from a search engine.

If you want to go further with me examining the issues in this particular search, see some of my commentary in this Twitter moment. But the facts don’t matter, in terms of Google’s search quality reputation. The Google outrage machine is stoked.

After so many failures, I’d say many don’t care about the facts and important questions in search, including how censorship might have unwanted blowback. They just see Google screwing up again, adding to the growing public relations issue. Fix it!

Google DOES have problems that need fixing

Don’t get me wrong. Google deserves plenty of criticism for some of the results that it has been showing. Even when there are reasons that provide perspective, that doesn’t excuse the need for Google itself to take corrective actions. Here are some specific things it is doing and could do better.

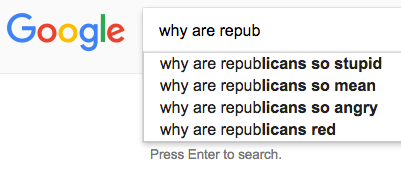

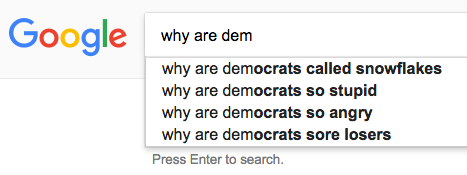

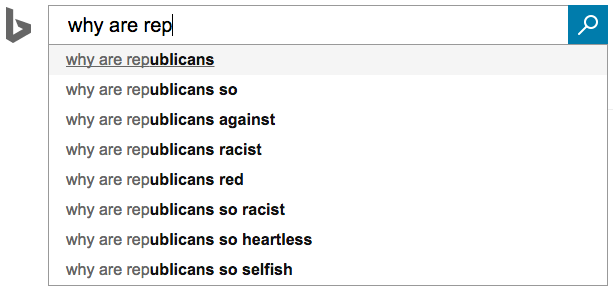

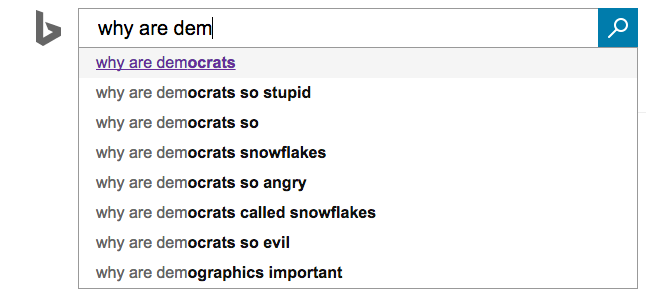

Search Suggestions / Autocomplete: Google began testing a way for people to report offensive search suggestions in February and has promised to improve those suggestions overall. But nearly two months after that limited test began, the reporting tool has yet to be rolled out broadly. Meanwhile, it’s fairly easy to find suggestions that some might find objectionable:

Those are for the political parties of the Democrats and Republicans, but trust me, you can sadly find similar things about racial groups, ethnicities and sexual orientation.

These will continue for as along as Google offers suggestions, which are based on actual searches that real people do in volume. Over time, perhaps they’ll be reduced. But with an infinite amount of things to search for, you can’t fix it all. Nor can Bing, by the way:

Google needs to get that reporting tool out broadly as soon as possible. It needs to consider eliminating suggestions on desktop, where they aren’t as necessary as on mobile. It needs to ramp up ways to filter out offensive suggestions. It’s a problem that’s festered for six years or more. Google needs to do more.

Featured snippets: Google could and perhaps should eliminate featured snippets for desktop searches, where they aren’t necessary and would encourage people to assess a variety of results rather than fixating on “one true answer” that might not be true at all.

It’s much more difficult to drop featured snippets for Google Assistant and Google Home, because when they work — and they do often work — they are a distinguishing feature that puts Google ahead of Apple’s Siri and Amazon Echo’s Alexa.

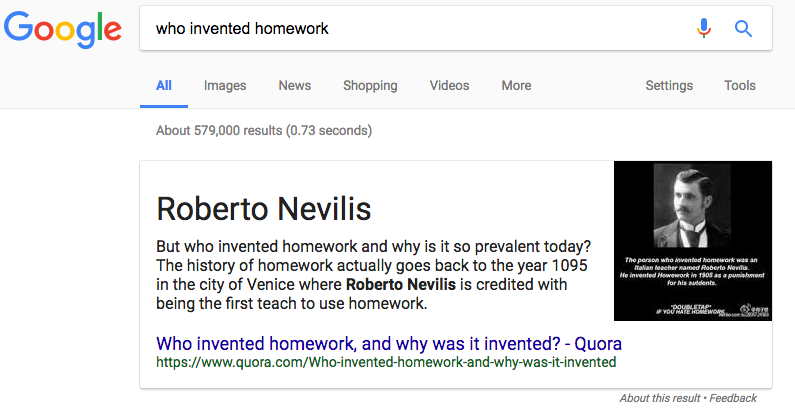

Google hopes a new effort announced last month involving its quality raters will make objectionable and questionable content less likely to appear. But that wouldn’t stop a site like Quora, which isn’t generally objectionable, for showing up with an entirely fake answer about the person who “invented” homework:

That person isn’t real and didn’t invent homework, but because the page looks like it’s providing an answer to the question, Google elevated it. Bing did this as well, when I first noticed this last month.

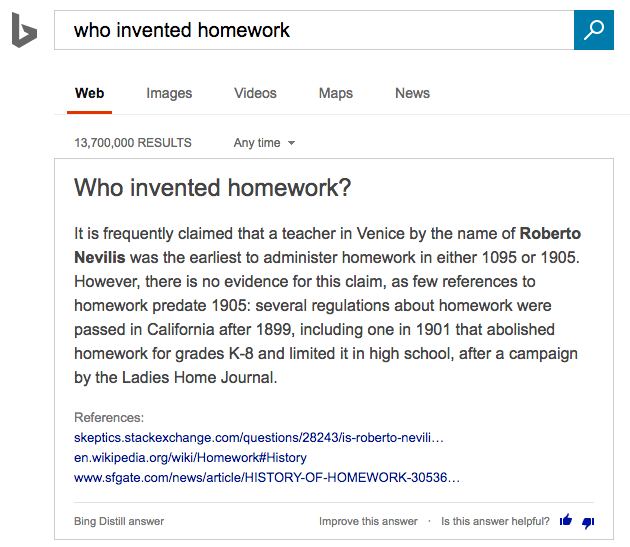

Bing’s since changed to using an answer from its Bing Distill community, where volunteers try to answer questions:

That’s better, but it’s not a scalable solution to the overall problem. People want one fast answer for all types of topics. The more comprehensive any search engine tries to be in doing this, the more likelihood that it will make mistakes for more obscure or infrequent questions.

The solution overall might be that our voice assistants have to do a better job stressing that they’re making a “best guess” and perhaps encouraging us to review other answers. It might also involve us, as searchers, doing a better job understanding that there’s not always one true answer to everything and that we need to be more critical about what we receive and do further research where it might seem needed.

Search quality: Aside from presenting one answer in a special featured snippets box, Google has had problems where the top web result can be offensive or where most of the results have issues, as was the case with searches about whether the Holocaust was real.

Google launched an algorithm change within a week of that problem coming to light in December. It quickly changed the results for the Holocaust search in question. That change also appears to have helped with some other problematic searches, as I’ve covered earlier. Data from quality raters may help further improve things.

Still, Google almost certainly won’t be able to eliminate all objectionable results. Inevitably, someone will stumble across something that feels grossly wrong. The question then is whether that will be seen as Google failing to do enough or that Google can’t fix everything perfectly.

Top Stories: The problem of fake news or dubious content appearing in Google’s “Top Stories” section is largely down to Google itself. It deliberately chose to allow publications beyond vetted news sites into this area back in October 2014. That’s why those fake election results appeared there. Changing the name of the section to “Top Stories” last December didn’t change the underlying problem.

Shifting back to only allowing vetted sites won’t solve the issue of Breitbart content showing up. Breitbart is a vetted site that was admitted into Google News. The only way to keep that content out would be to ban the site from Google News entirely. Some might agree with that; others might find there’s a strong argument that a publication that’s one of the few to get a one-on-one interview with President Donald Trump deserves to be retained as a news source.

Search will never be perfect

In the end, it’s good that Google is going through this search quality crisis. This new pressure is forcing it to attend to issues that can no longer be allowed to fester.

It’s not clear, however, if Google will be able to solve its biggest issue overall: the drip-drip-drip of criticism for problems that no search engine can ever fully eliminate, given how broad search is.

Google handles 5.5 billion searches per day. Per day. Billions of searches, with around 15 percent being entirely new, never asked before. Google tries to answer these questions by producing results from billions of pages from across the web. It’s an impossible task to get perfectly right every time.

Pick any search, and you can come up with something that will return objectionable or questionable results. This isn’t a new issue, as some of Google’s past search quality crises demonstrate. But possibly it’s growing, either as more questionable content flows onto the web or as more people are hyperaware of checking to see if such content surfaces in search results.

An impossible task, but one where striving for perfection remains so crucial. Dylann Roof, convicted of murdering nine people in the Charleston church shooting, is an example of how important this is.

Roof has said he did a Google search to learn more about “black on white crime” and that the first site he came to was a white supremacist site, which in turn may have shaped his motivation to commit the murders, as this NPR story recounts. Google no longer lists that site in the first page of its results. Bing does, at the time of this writing.

I suspect that even if Google’s top results had lacked that site, Roof might have gone on searching until he found information confirming the bias he already had. Another part of his “manifesto” espousing hatred toward blacks comes from what he called his own “real life” experience, not from Google searches.

But still. Getting the results as right as possible matters, however impossible that might seem. Search is hard. A big challenge for search engineers in the past was dealing with overt spam trying to attain high rankings. Now they have to grapple with “post-truth” content where pages that sound factual or informative to an algorithm can be anything but.

We should continue to hold Google and search engines to a high standard and highlight where things clearly go wrong. But we should also understand that perfection isn’t going to be possible. That with imperfect search engines, we need to employ more human critical thinking skills alongside the searches we do — and that we teach those to generations to come.

Life itself rarely has “one true answer” to anything. Expecting Google or any search engine to give them is a mistake.

[Article on Search Engine Land.]

Marketing Land – Internet Marketing News, Strategies & Tips

(36)