These days, you can learn web design or Python programming from books or online tutorials. But the next major wave of computing, artificial intelligence, belongs to a select few, highly trained experts who earn six-figure salaries. “If you look at how many people are graduating with a data science degree, and what the percentage is of those who have expertise in AI and machine learning, you pretty much end up with thousands only,” says Mazin Gilbert, VP of advanced technology at AT&T Labs.

Even with universities now offering master’s degree programs in data science (as opposed to only PhDs), that still won’t produce enough pros. “You need teams of data scientists who can actually understand neural networks and tweak them,” says Matthew Zeiler, who founded the visual recognition startup Clarifai in 2013 after earning his PhD in computer science. Machine learning, which digests huge amounts of data to identify patterns, is the hottest branch of AI today, with applications as diverse as organizing cell-phone photos, teaching computers to drive autonomous cars, and studying cancer. As Gilbert explains, “No matter how many [people] we train—and other companies are doing the same thing—it’s just not enough to make machine-learning AI mainstream.”

Which is why the industry is moving toward a point-and-click AI revolution. Artificial intelligence is tiptoeing up the accessibility curve that web programming and other technologies have taken as they progress from diplomaed masters to the rest of us. AI is much more complicated than coding a website, but because the software and hardware groundwork has been built, simpler tools for non-experts are emerging. AT&T, Clarifai, and IBM, as well as UC Berkeley, are creating intuitive ways for people with big ideas to start building AI tools without big expertise. This not only helps the technology keep growing, but by preserving and even creating new jobs, it could also be the key to assuaging human fears about the prospect of killer robots—or at the very least, career-killing robots. Opening AI to the masses, in other words, presents an opportunity for humans and machines to thrive.

DIY AI

The cloud is a key ingredient in the mainstreaming of AI. Companies build complex systems that do the heavy calculation work on servers, allowing users to plug into those systems over the Internet. “You don’t have to know about neural networks or machine learning or anything like that. We do all of the tuning for you,” says Zeiler. Clarifai has created an image-recognition system that outlets like the hotel-comparison site Trivago use to determine if a photo depicts a room, a pool, or a beach view, for instance. BuzzFeed relies on Clarifai to tag photos so editors can find the right ones to accompany their stories.

Clarifai’s service is trained to recognize about 11,000 different broad image classes, such as room, window, or furniture. But customers might want to get more specific. So Clarifai brought out a service called Custom Training that can be set up by non-expert users with a few lines of code or even a point-and-click interface.

One customer, Architizer, is a marketplace that matches architects with companies selling building materials like specific stone or wood components. Using Custom Training, Architizer taught a system to recognize such concepts as “facade system,” “Brutalist,” and “living wall” so its users could search a concept and see corresponding photos. You type in the name of a category—let’s say “cantilever”—then drag and drop image files of that architectural feature that train the system to recognize it.

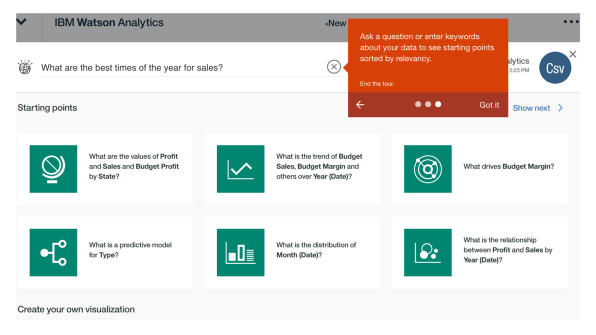

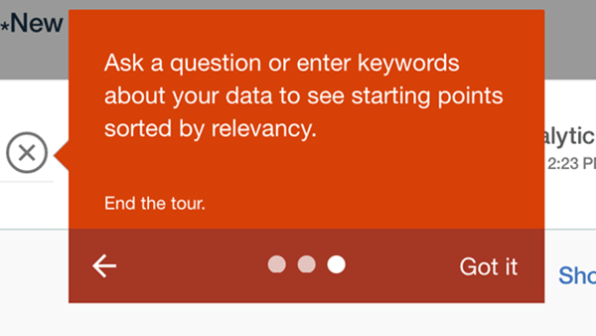

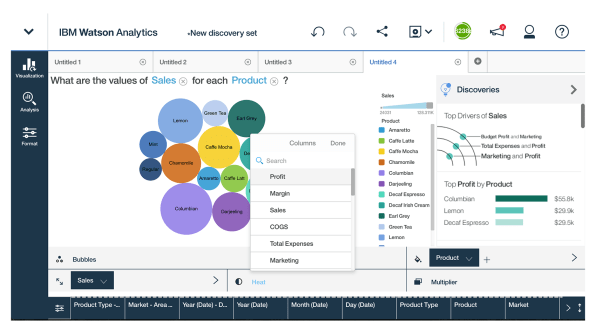

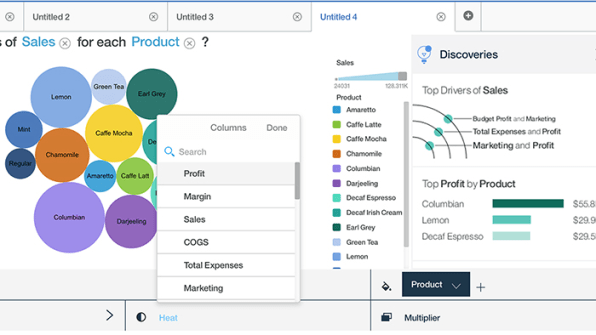

IBM’s cloud-based Watson AI is also moving to non-experts. Watson Analytics allows business users to upload data, such as spreadsheets of sales figures, and kick off an AI analytic process. Right after the upload, Watson chews on the data—the column heads, the range of number values, for instance—and starts proposing ways you might want to slice and dice it. (IBM also has a module that pulls social media information to evaluate how people are discussing a particular topic or product or what they might be planning to buy.)

As an example, IBM sent me a spreadsheet of data from a fictional retail coffee chain, with the column heads “profit,” “margin,” “date,” “market,” “area code,” and “product type.” I could type in the question, “What are the best months for sales?” and get a report with the answer. But Watson Analytics also proposes ways to analyze the data, such as “What is the relationship between profit and sales by year (date)?” and “What drives budget margin?” I could click on those to see where the analysis takes me.

“It enables that natural curiosity where, before [business users] know it, they are actually using analytic algorithms that are helping them identify trends and patterns without ever having to write a line of code,” says Elcenora Martinez of IBM’s Business Analytics division.

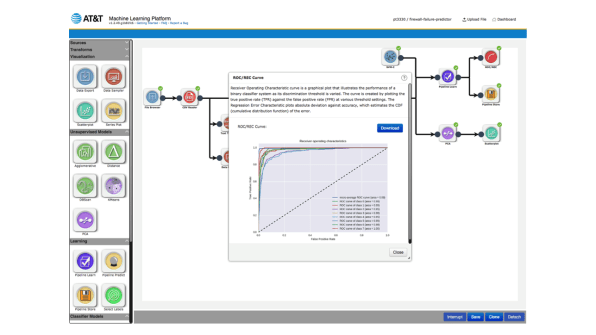

AT&T is going even further by allowing employees to build their own point-and-click AI applications. “We want a quarter of our workforce to use machine learning and AI, and that’s clearly not the case today,” says AT&T Labs’ Gilbert. “Our target is: Lower the barrier of entry to users who have a degree, not necessarily a computer science degree, and cannot even program.” To that end, AT&T is providing its staff with a system, called simply AT&T’s Machine Learning Platform, that lets workers string together AI components (or “widgets” in company parlance), such as a machine learner and natural language processor. (NLP extracts meaning from the free-flowing way people actually speak and write.)

“Today, people write code. They have to sit down and write an application that connects all of these [AI components],” says Gilbert. AT&T is prewriting these connectors, creating “wrappers” around AI widgets that allow them to exchange data. The wrappers also ensure that the widgets only connect in a way that produces a working application. Green checkmarks appear on the widgets if users have connected them properly. “They become like Legos. Now I can take this data; I can process it with that data,” says Gilbert. “I can apply this filter, this natural language [processor], I can try three machine learners . . . and I can do it without any programming.”

For instance, a non-programmer in AT&T customer service could drag and drop an AI tool that would detect customer sentiment from chat logs, says Gilbert. The employee would string together widgets to get the data, process the data, clean the data, run one or more algorithms on it, and output a useful conclusion, such as how satisfied or frustrated AT&T customers are with various support issues.

AT&T’s Machine Learning Platform is in a beta phase. About a dozen employees are creating applications that the company is already using in network reliability, network security, and customer experience. AT&T aims to extend the tool throughout the company and possibly offer it as a product for other companies or organizations. “We are absolutely interested in taking it outside,” says Gilbert.

A Helping Hand For Robots

AI has come a long way in understanding words, numbers, and images in the virtual world. A bigger challenge is dealing with physics in the real world: teaching robots how to move around without crashing or to handle objects without smashing. Robotics professor Ken Goldberg leads a project at University of California, Berkeley called Autolab, which is training robots to perform tricky tasks, from picking up clutter to suturing wounds. Goldberg recently showed me an experiment with a two-armed robot that (very slowly) packs boxes for shipping.

One of the robot’s arms is supposed to pick up objects, a task it figures out by drawing from an online database of 3D shapes. The other arm’s job is to push the pile of objects, spreading them out so they’re easier to spot (via a camera) and grab. The pushing arm is trained by someone who moves it around. “You would grab its hand and . . . say, ‘I want you to push here, here, and here,’” says Michael Laskey, a graduate student who trained the arm. “And then you just do that 50 times and [the robot] infers the pattern of what you are doing.”

This process, called learning from demonstration, isn’t dumbed-down AI. It’s training a robot using the most sophisticated motion controller out there—a human brain. It also recruits a wider swath of people than typical AI jobs. “Why I’m drawn to this approach is it has this prospect of non-experts, not roboticists, being able to teach a robot a new manipulation task,” says Laskey. “For every time you want to make a robot learn to golf, someone else might want to make it learn to pour coffee.”

Or cook an omelet. Or plant a tree. While the opening up of AI won’t save every profession facing extinction in the information age—it won’t stop autonomous vehicles from replacing flesh-and-blood truck and taxi drivers, for instance—it does afford possibility. Business managers, journalists, lawyers, and everyone else can keep doing what they do best, just with new tools.

Fast Company , Read Full Story

(53)