A New York City lawmaker is taking on companies that mine your face

Ritchie Torres was appalled. In March, the New York City Council Member learned from the New York Times that the iconic sports and entertainment arena Madison Square Garden, the legendary home to the Knicks and the Rangers and an endless parade of arena performers, has used facial recognition software to scan the faces of spectators upon entrance. Details remain scant, but the technology is meant to identify “problem” attendees by matching their faces to those stored in a database. Using the largely unregulated technology–and not telling the public what was being done with their faces–“radically challenges privacy as we know it,” says Torres, who represents the Bronx.

In October, he introduced a bill that aims to bring a modicum of transparency to businesses’ use of the biometric technology, as well as iris and fingerprint scanning, by requiring businesses to conspicuously disclose the use of the technology at business entrances.

“We’re increasingly living in a marketplace where companies are collecting vast quantities of personal data without the public’s consent or knowledge,” he says. “In a free and open society, I have the right to know whether a company is collecting my personal data, why a company is collecting my data, and whether a company will retain my data and for what purpose.”

Under the bill, companies would be required to disclose, with signs at every entrance, if and how they are collecting, retaining, converting, and storing the biometric data of their customers. The online component of the bill would require a company to disclose four pieces of information online: the amount of information it retains and stores; the kind of information it collects; a privacy policy; and, most critically for Torres, any information sharing with third parties.

To Torres, facial recognition is the most intrusive yet least regulated form of a fast-growing swath of biometric technology he characterizes as being “shrouded in secrecy.”

“It’s even more intrusive than fingerprinting, [which] is a tangible intrusion into my privacy,” Torres explains. “This facial recognition is an invisible and intangible intrusion–that’s what makes it more pernicious. Even more than that, you’re building a database of private information that can then be commercialized.”

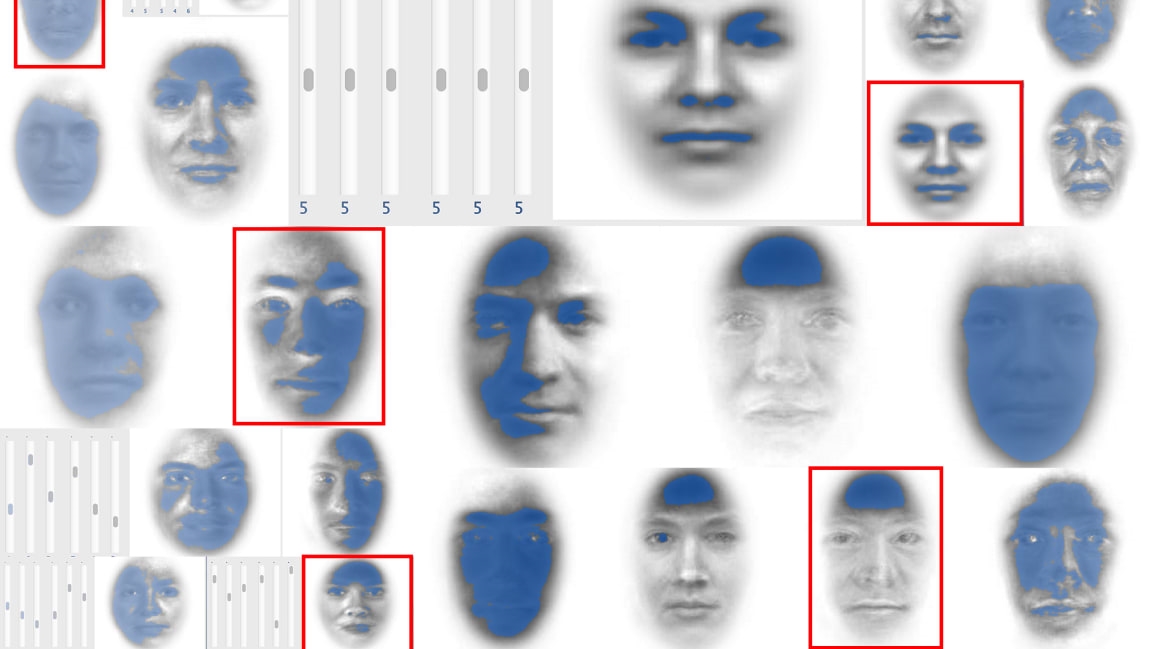

He also cites the potential for false positives in face recognition systems. (During a recent set of tests by London’s Metropolitan police, for instance, clandestine face recognition matches found in public places resulted in a 100% failure rate.) Poorly trained algorithms tend to impact non-white faces, an especially poignant concern for residents of the Bronx, where, according to census data, there is a more diverse array of faces than any other place in the country.

But wherever you live, basic disclosure is “utterly uncontroversial,” Torres says. “If you believe that businesses should be able to collect your personal data without your knowledge or consent, then we disagree on first principles.”

In the absence of federal regulations, the technology is spreading across public spaces: Airports, casinos, and retailers are buying software that can run on real-time feeds from ever-improving CCTV cameras. The software typically comes with access to databases of faces of suspicious individuals, though it’s unclear how those databases are assembled and how to remove faces from them. (Federal law enforcement agencies operate a database said to contain the faces of half of the U.S. population.)

The Dept. of Homeland Security has been rolling out face recognition systems at airport gates across the country as part of a post-9/11 biometric system. Amazon has lately courted controversy with its Rekognition service, a facial scanning software used by law enforcement agencies like ICE, as well as many of its other cloud customers. Facebook is well known for its facial recognition algorithm, allowing the company to identify users and target ads at them accordingly. Last month, Microsoft’s president called for rules around face recognition, while Google said it would not yet sell facial recognition services for the time being, given the ongoing privacy and ethical concerns.

In March, the same month that the Times described MSG’s technology, the ACLU asked 20 of America’s top retailers if they used facial recognition on their customers. All but two of the companies refused to confirm or deny. One company, Ahold Delhaize–a brand that owns supermarkets Food Lion, Stop & Shop, Giant, and Hannaford–responded they did not use face recognition, while the hardware company Lowes said it does use face recognition technology to identify shoplifters.

Torres said he doesn’t know which other New York City businesses are currently using facial recognition technology on customers, or how. And therein lies the problem. “Since there is no regulation, since there is not even the most basic standards of transparency, we don’t know how widespread the use of facial recognition technology is in New York City or elsewhere in the country–we just don’t know,” he says. “Businesses are under no obligation to report on the use of facial recognition technology. I think that is part of the purpose of the bill: to shed light on a world of biometric technology that has historically been hidden from public view.”

While face recognition at places like MSG has been touted as a security measure, the data could also easily be used for other purposes. For several years, advertisers have used facial recognition in electronic billboard campaigns, and the company Bidooh is trying to dominate this market by creating an out-of-home ad network that will target ads at people through facial recognition-equipped electronic billboards. Founded by Abdul Alim, who says he was inspired by the sci-fi film Minority Report, Bidooh aims to be the Google AdWords and Facebook Ads of the out-of-home advertising market.

“Madison Square Garden assures me that the use of facial recognition technology is limited to security,” Torres says. “But here is the concern I have with companies generally: What could begin as a security measure could easily evolve into something else. Once data is retained, it can be readily repurposed for profit. I’m concerned about the commercialization of private data.”

In Illinois, face scanning companies must abide by the U.S.’s most extensive biometric privacy law. Companies must obtain express, opt-in consent from consumers before collecting their face or selling that data to third parties, and must delete any data within three years of collecting it. (Texas and Washington also restrict face recognition.) The Illinois law also empowers people to enforce their data rights in court, and since 2015, Google, Snapchat, Facebook, and others have faced lawsuits for allegedly violating the law. But late last month a judge in Chicago dismissed the suit against Google, saying the plaintiff, whose face was unwittingly captured in 11 photos taken by a Google Photos user, didn’t suffer “concrete injuries.”

Meanwhile, against the protests of privacy experts, a recently proposed amendment to the Chicago municipal code would permit businesses to use face surveillance systems in their stores and venues. As with Torres’s bill, the bill would require the businesses to simply post signs giving patrons notice about some of their surveillance practices, while circumventing the stronger protections provided by the state law.

Torres says he’s “optimistic” his proposal will pass when it comes before a committee hearing, sometime within the next few months. But there are still headwinds, he says. Some stakeholders worry about the law potentially opening the floodgates to lawsuits. And a few advocates felt his legislation could go farther to regulate facial recognition and other biometric technologies.

For example, the law doesn’t address government uses of the technology, including by the NYPD, which came under fire last year when The Intercept described trials of an ethnicity-detecting face recognition system. But another New York City law, authored by former Council Member James Vacca, has recently created the Automated Decision Systems Task Force, which will look into the various algorithms across any government agency that underlie public policy-making. The task force, announced in May by Mayor Bill de Blasio, will issue a report in December 2019, in which it will recommend procedures for “reviewing and assessing City algorithmic tools to ensure equity and opportunity.”

“I see the bill as a floor not a ceiling,” Torres says of his proposal. “It seems to me disclosure is a natural starting point, and if we come to discover abuses in the use of facial recognition or facial screening technology, then we can adopt regulations aimed at preventing those abuses.”

(36)