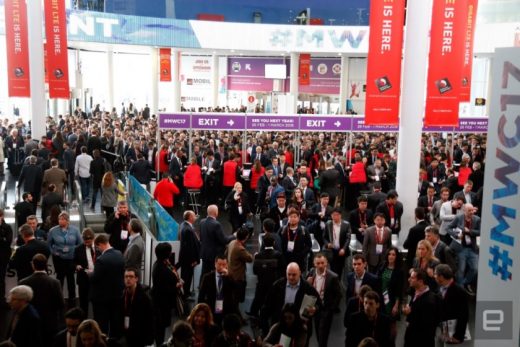

AI continued its world domination at Mobile World Congress

Silicon Valley investor and web pioneer Marc Andreessen said in 2011 that “software is eating the world.” The explosion of app ecosystems seems to prove his point, but things have changed dramatically even since then. These days, it might be more accurate to say that “AI is fueling the software that’s eating the world,” but I’ve never been very quotable. In any case, it’s impossible to ignore the normalization of artificial intelligence at this year’s Mobile World Congress — even if a resurrected 17-year-old phone did end up stealing the show.

When it comes to the intersection of smartphones and AI, Motorola had the most surprising news at the show. In case you missed it, Motorola is working with Amazon (and Harman Kardon, most likely) to build a Moto Mod that will make use of Alexa. Even to me, someone who cooled on the Mods concept after an initial wave of interesting accessories slowed to a trickle, this seems like a slam dunk. Even better, Motorola product chief Dan Dery described what the company ultimately wanted to achieve: a way to get assistants like Alexa to integrate more closely with the personal data we keep on our smartphones.

In his mind, for instance, it would be ideal to ask an AI make a reservation at a restaurant mentioned in an email a day earlier. With Alexa set to be a core component of many Moto phones going forward, here’s hoping Dery and the team find a way to break down the walls between AI assistants and the information that could make them truly useful. Huawei made headlines earlier this year when it committed to putting Alexa on the Mate 9, but we’ll soon see if the company’s integration will attempt to be as deep.

Speaking of Alexa, it’s about to get some new competition in Asia. Line Inc., makers of the insanely popular messaging app of the same name, are building an assistant named Clova for smartphones and connected speakers. It will apparently be able to deal with complex questions in many forms: Development will initially focus on a first-party app, but should find its way into many different ones, giving users opportunities to talk to services that share some underlying tech.

LG got in on the AI assistant craze too, thanks to a close working relationship with Google. The LG V20 was the very first Nougat smartphone to be announced … until Google stole the spotlight with its own Nougat-powered Pixel line. And the G6 was the first non-Pixel phone to come with Google’s Assistant, a distinction that lasted for maybe a half-hour before Google said the assistant would roll out to smartphones running Android 6.0 and up. The utility is undeniable, and so far, Google Assistant on the G6 has been almost as seamless as the experience on a Pixel.

As a result, flagships like Sony’s newly announced XZ Premium will likely ship with Assistant up and running as well, giving us Android fans an easier way to get things done via speech. It’s worth pointing out that other flagship smartphones that weren’t announced at Mobile World Congress either do or will rely on some kind of AI assistant to keep users pleased and productive. HTC’s U Ultra has a second screen where suggestions and notifications generated by the HTC Companion will pop up, though the Companion isn’t available on versions of the Ultra already floating around. And then there’s Samsung’s Galaxy S8, which is expected to come with an assistant named Bixby when it’s officially unveiled in New York later this month.

While it’s easy to think of “artificial intelligence” merely as software entities that can interact with us intelligently, machine-learning algorithms also fall under that umbrella. Their work might be less immediately noticeable at times, but companies are banking on the algorithmic ability to understand data that we can’t on a human level and improve functionality as a result.

Take Huawei’s P10, for instance. Like the flagship Mate 9 before it, the P10 benefits from a set of algorithms meant to improve performance over time by figuring out the order in which you like to do things and allocating resources accordingly. With its updated EMUI 5.1 software, the P10 is supposed to be better at managing resources like memory when the phone boots and during use — all based on user habits. The end goal is to make phones that actually get faster over time, though it will take a while to see any real changes. (You also might never see performance improvements, since “performance” is a subjective thing anyway.)

Even Netflix showed up at Mobile World Congress to talk about machine-learning. The company is well aware that sustained growth and relevance will come as it improves the mobile-video experience. In the coming months, expect to see better-quality video using less network bandwidth, all thanks to algorithms that try quantify what it means for a video to “look good.” Combine those algorithms with a new encoding scheme that compresses individual scenes in a movie or TV episode differently based on what’s happening in them, and you have a highly complex fix your eyes and wallet will thank you for.

And, since MWC is just the right kind of absurd, we got an up-close look at a stunning autonomous race car called (what else?) RoboCar. Nestled within the sci-fi-inspired body are components that would’ve seemed like science fiction a few decades ago: There’s a complex cluster of radar, LIDAR, ultrasonic and speed sensors all feeding information to an NVIDIA brain using algorithms to interpret all that information on the fly.

That these developments spanned the realms of smartphones, media and cars in a single, formerly focused trade show speak to how big a deal machine learning and artificial intelligence have become. There’s no going back now — all we can do is watch as companies make better use of the data offered to them, and hold those companies accountable when they inevitably screw up.

Click here to catch up on the latest news from MWC 2017.

(46)