AI could read your handwriting to figure out your nationality

Using a machine learning algorithm, researchers can break down a person’s handwritten English text in order to determine if the writer is coming from one of five different countries: Malaysia, Iran, China, India, and Bangladesh.

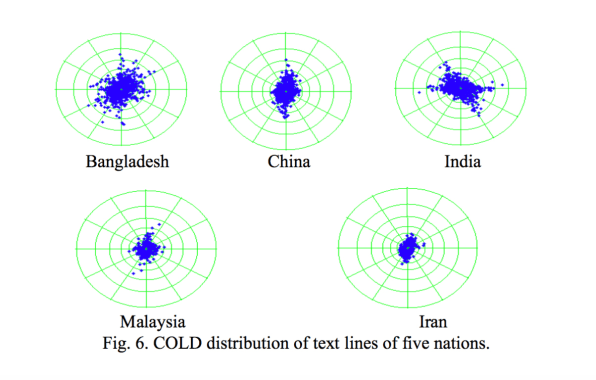

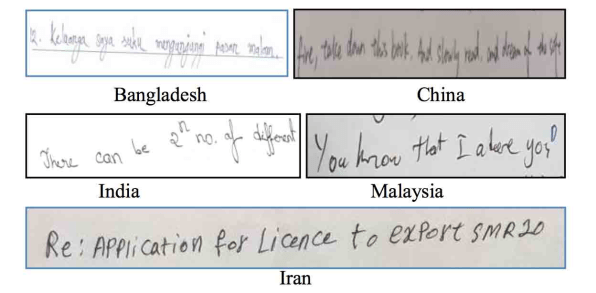

The researchers created a dataset of 100 people from those countries and had them write in English, with 500 lines done in total. With those handwritten lines, a tool called Cloud of Line Distribution, or COLD, breaks down individual letters, measuring the straightness or curviness of the text. The algorithm performed better at determining the nationality of the writer than the current existing method put to the same task, more than doubling the correct predictions for some countries.

The algorithm is doing what machine learning does best—picking up on patterns. For example, when native Chinese writers use the Roman alphabet, they end up writing letters with straighter lines since Chinese characters are usually formed with the combination of straight strokes. On the other hand (pun intended), writers originating from India and Bangladesh have curvier writing as most of their scripts are cursive with more round shapes.

Reading handwriting was one of the first tasks tackled by computer vision researchers. Previous research has tried to detect emotion, classify the gender, and determine the age of a writer but otherwise, not much work has been done trying to tease out more information from handwriting, possibly because no one has found a way to monetize it.

The researchers, who hail from India, China, and Malaysia, propose this technique would be useful for crime investigations. Police are increasingly turning to biometrics to solve crimes, and pulling out identifying information from handwriting could supplement other technologies like face recognition software.

But they do not address any of the privacy or civil rights concerns that might come from the applications of this and similar technologies. Mistakes that amplify existing biases in the training data could, for instance, implicate the wrong person in a criminal investigation. Or companies could use handwriting recognition software to discriminate against potential customers based on traits like someone’s nationality or even their intelligence.

Before law enforcement could even think about using it, however, the researchers would have to expand the small dataset they used to prove out that COLD is more than just an interesting project.

(34)