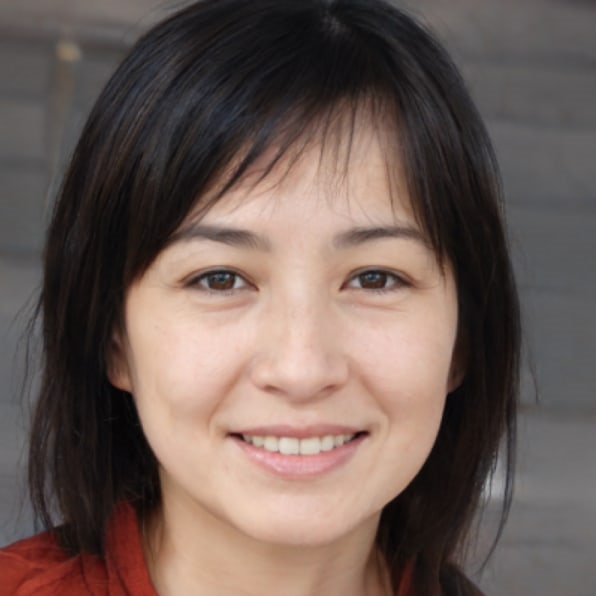

AI-generated faces have crossed the uncanny valley and are now more trustworthy than real ones

You might be confident in your ability to tell a real face from one created using artificial intelligence. But a new study has found that your chance of choosing accurately would be slightly better if you just flipped a coin—and you are more likely to trust the fake face over the real one.

Published in the Proceedings of the National Academy of Sciences, the study was conducted by Hany Farid, a professor at the University of California, Berkeley, and Sophie J. Nightingale, a lecturer at England’s University of Lancaster.

Farid has been exploring synthetic images—and how well people can tell them apart from the real ones—for years. He initially focused on the rise of computer-generated imagery. But the medium’s path has accelerated in recent years as deep-learning-based neural networks known as GANs (generative adversarial networks) have become more sophisticated at generating truly realistic synthetic images. “If you look at the rate of improvement of deep fakes and [GANs], it’s an order of magnitude faster than CGI,” he says. “We would argue that we are through the uncanny valley for still faces.”

The problems with such realistic fakes are manifold. “Fraudulent online profiles are a good example. Fraudulent passport photos. Still photos have some nefarious usage,” Farid says. “But where things are going to get really gnarly is with videos and audio.”

Given the speed of these improvements, Farid and Nightingale wanted to explore if faces created via artificial intelligence were able to convince viewers of their authenticity. Their study included three experiments aimed at understanding whether people can discern a real face from a synthetic one created by Nvidia’s StyleGAN2. After identifying 800 images of real and fake faces, Farid and Nightingale asked participants to look at a selection of them and sort them into real and fake. Participants were correct less than half the time, with an average accuracy of 48.2%.

A second experiment showed that even giving participants some tips on spotting AI-generated faces and providing feedback as they made their decisions didn’t drastically improve their deciphering ability. Participants identified which face was real and which was fake with 59% accuracy, but saw no improvement over time. “Even with feedback, even with training trying to make them better, they did slightly better than chance, but they’re still struggling,” Farid says. “It’s not like they got better and better—basically it helps a little bit, and then it plateaus.”

The difficulty people had spotting faces created by artificial intelligence didn’t particularly surprise Farid and Nightingale. They didn’t anticipate, however, that when participants were asked to rate a set of real and fake faces based on their perceived trustworthiness, people would find synthetically generated faces 7.7% more trustworthy than real ones—a small but statistically significant difference.

“We were really surprised by this result because our motivation was to find an indirect route to improve performance, and we thought trust would be that—with real faces eliciting that more trustworthy feeling,” Nightingale says.

Farid noted that in order to create more controlled experiments, he and Nightingale had worked to make provenance the only substantial difference between the real and fake faces. For every synthetic image, they used a mathematical model to find a similar one, in terms of expression and ethnicity, from databases of real faces. For every synthetic photo of a young Black woman, for example, there was a real counterpart.

Though the type of images GANs can convincingly create at the moment are still limited to passport-style photos, Nightingale says the deceptions pose a threat for everything from dating scams to social media.

“In terms of online romance scams, these images would be perfect,” she says. “[For] things like Twitter disinformation attacks, rather than having a default egg image, you just take one of these images. People trust it, and if you trust something, you’re probably more likely to share it. So you see how these types of images can already cause chaos.”

So how do we protect against people using synthetic images for nefarious means? Farid is a champion of an approach called ‘controlled capture,’ which is being built out by companies like TruePic and the Coalition for Content Provenance and Authentication. The technology captures metadata related to time and location for any photo taken within an app that has built-in camera function.

“I think the only solution is to authenticate at the point of recording, using a controlled capture-type of technology,” he says. “And then, anything that has that, good; anything that doesn’t, buyer beware. I think this [solution] is really going to start to get some traction, and my hope is in the coming years, we start taking trust and security more seriously online.”

Beyond synthetic still images, the study comes as the world of synthetic media is growing. Synthesia, an Australian company, closed a $50 million series B round in December for AI avatars used in corporate communications; and London-based Metaphysic, the company behind viral deep fakes of Tom Cruise, raised $7.5 million earlier this year. As these technologies continue to improve and change what’s possible to do with AI, Nightingale says researchers and companies will have to think seriously about the ethics involved.

“If the risks are greater than the benefits of some new technology, should we really be doing it at all?” she asks. “First of all, should we be developing it? And second, should we be uploading it to something like Github, where anyone can just get their hands on it? . . . As we see, once it’s out there, we can’t just take it back again because people have downloaded it, and it’s too late.”

Answers

1. Fake

2. Real

3. Real

4. Fake

(211)