Given LinkedIn is overflowing with marketers, investors, and tech bros shrieking excitedly about ChatGPT like fans at a K-pop concert, you’d be excused for thinking that OpenAI’s latest generative artificial intelligence is on its way to curing cancer, figuring out the meaning of life, or shedding light on why it’s impossible to lick your own elbow (go try it, I’ll wait). Microsoft is so excited about the tech that it shelled out $10 billion to release a ChatGPT-powered sociopath masquerading as a search engine.

It feels like if your business, your app, or your air fryer doesn’t use generative AI, you are a nobody. Which is why it is so surprising to see how the número uno tech company on the planet is quietly sitting in a corner, thumbing its iPhone, and asking Siri to tell another knock-knock joke. Until now.

According to a Wall Street Journal exclusive, the mountain hath spoken, and it’s saying it’s time to slow down the AI arms race, at least a little bit. “Apple Blocks Update of ChatGPT-Powered App, as Concerns Grow Over AI’s Potential Harm,” reads the headline.

Here’s what happened: When a company called Blix Inc sent the latest version of its iOS mail app for review, Apple replied with “nah, you can’t.” Apparently, the update can generate text using ChatGPT, and for Apple this is a cause for “concern,” given the app did not include proper content filtering that could prevent problematic text output. Blix’s co-founder Ben Volach told the WSJ that the Cupertino company believes this new feature can show inappropriate content, and therefore it should be released with a 17+ age-limit rating in the App Store. Seeing how well Microsoft’s Sydney experiment is going, it makes sense to me, but Volach thinks otherwise. The move will unfairly limit the app’s reach, he says, which will ultimately damage Bink’s business.

The fact that Apple is the first company to pump the brake on generative AI feels quite refreshing. It’s refreshing because, yes, this technology is undoubtedly awesome and full of more creative potential than a genetic chimera of Warhol, Kubrick, and Bowie high on LSD, but it also contains a huge potential for destruction. Refreshing because we need to stop and take a breather to collectively think about how to regulate it. And refreshing because history has taught us that if you leave world-changing technology to the Valley bros, there’s a damn good chance it will go wrong (see our current social media experiment). Someone with power needed to do something to slow down our blind march towards an AI future, even if it’s just slapping a 17+ limit onto an app.

But are those Apple’s motivations or am I just projecting my wishes? I want to believe Apple can clearly see where all this could lead. After all, the company has a long history of blocking things that it considers bad. Steve Jobs famously told a reporter that he wanted to offer a world “free of porn” in his products. And Tim Cook, defending the company’s App Store monopoly, recently argued that the store would become “a toxic mess” without Apple’s control.

I asked Apple about why it blocked the ChatGPT-powered email app and its prompt non-answer was that a) the company is investigating the developer’s complaint; and b) all developers have the option to challenge a rejection via the App Review Board appeals process. No reply to my other questions about ethics and other potential conflicts. Volach’s email exchange—reviewed by the WSJ—just stated that the app needed to be 17+ to get published.

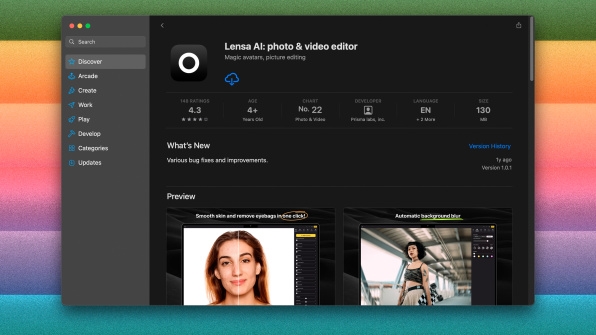

The motivations get muddier if you dig a bit deeper and notice that apps like Lensa—which also uses generative AI—have a 4+ age limit in the App Store. You know Lensa. The app that took the internet by storm by creating AI-generated portraits and raking in tens of millions of dollars in record time for its developer and for Apple. The app that is powered by Stable Diffusion, which has been sued by Getty for using copyrighted images without permission? Yes, that one, the very same app that can accidentally produce nude pictures of its female users. Intimate imagery and a 4+ rating. Weird. (When asked about the Lensa rating incongruence for a second time, Apple didn’t reply. I will update this post if the company responds.)

So maybe, just maybe, Apple is not so concerned about offering a fully toxic-free environment. Maybe Apple is just like Microsoft and Google and the rest of the tech companies. As long as the millions pour in, all is fine.

I honestly can’t yet tell what the answer is. It will be interesting to see where Apple goes with AI beyond crash detection and guessing when you are going to have a heart attack. Where do its ethics truly stand? I’m hoping the company does something meaningful about it beyond stamping a 17+ rating on a mail app most people hadn’t heard of before now.

(23)