Autodesk’s Lego model-building robot is the future of manufacturing

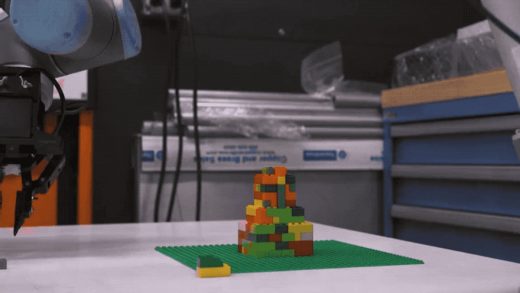

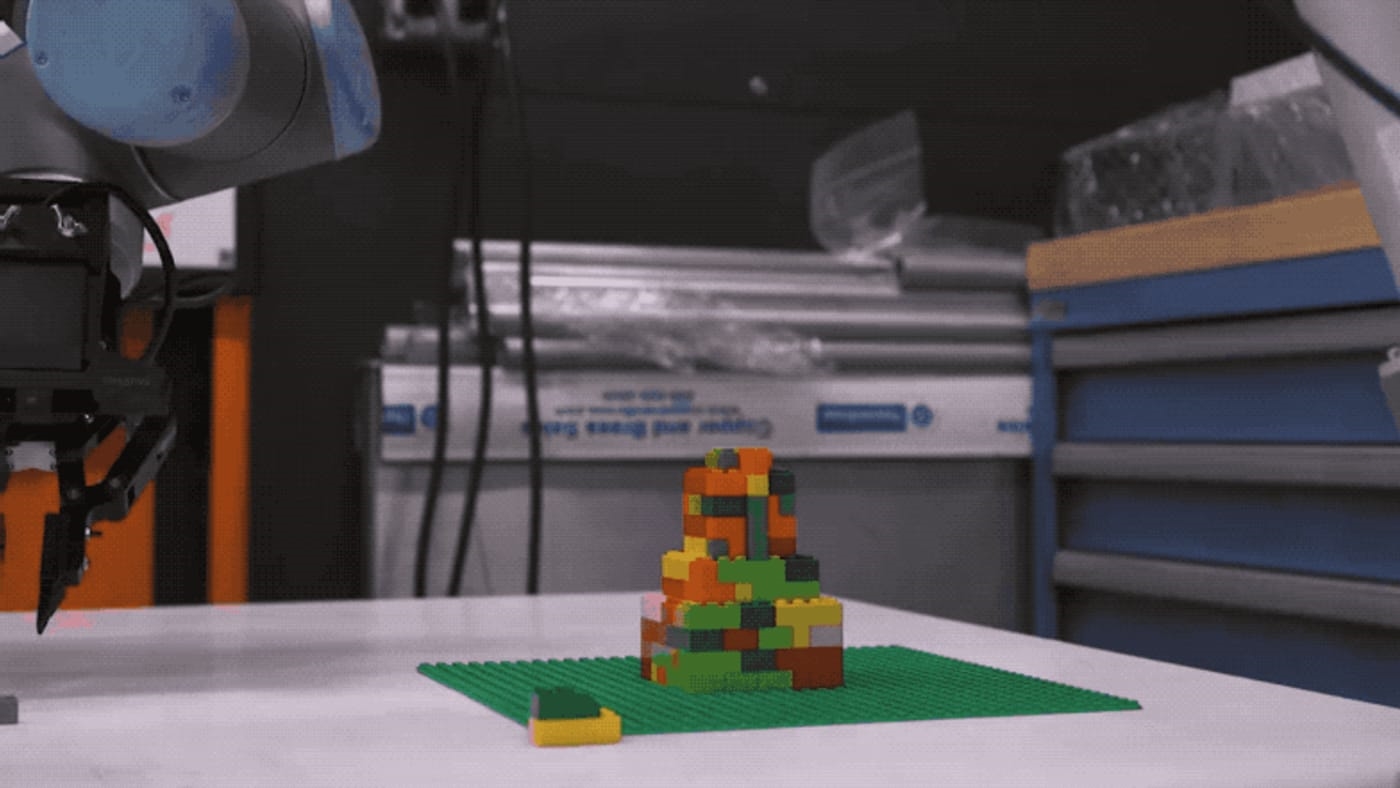

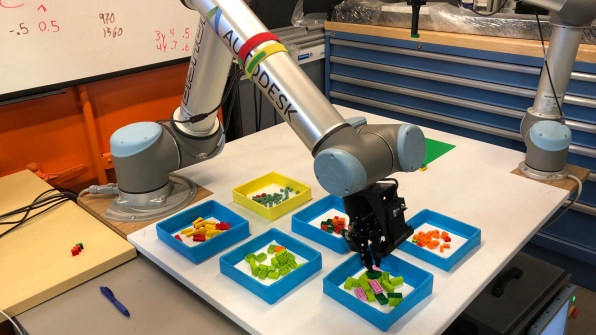

Sitting at opposite corners of a small white table in a bright Autodesk lab with high ceilings inside a maritime building adjacent to San Francisco Bay, two Universal Robots UR10 robotic arms are whirring away, working together, one by one, to pick Lego bricks out of six little square bins and carefully snap them into place on a green pad.

To a casual observer, this might not look like the precursor to a major shift in industrial manufacturing and construction, but to Autodesk, a $30 billion company and one of the largest makers of design software in the world, it could very much be just that.

Specifically, the UR10s–the main actors in a project once called LegoBot, but now, for legal reasons known as BrickBot–are autonomously working to build a rudimentary foot-or-so-tall Lego model resembling San Francisco’s Transamerica Pyramid. Programmed with a 3D model of the skyscraper, the two robots are plucking Legos out of the bins one at a time, figuring out how to place them on the green pad, and then slowly but surely clicking them accurately into position.

Having written at length in the past about the Autodesk’s futuristic Applied Research Lab, its Robotics Lab, and the work the company’s Office of the CTO (OCTO) does to think about products and services it might want to offer years from now, I’d been invited in January 2017 to come take a look at LegoBot, at that point underway for about four months. The goal, I was told then, was to have the robots successfully building Lego models at Autodesk University–the company’s developers conference–by last November. Within three years or so after that, it might be ready for industry.

Suffice it to say, the project has moved slower than planned, in part because it had only a single full-time scientist working on it, and in part because some of the problems Autodesk was trying to solve were just damned hard.

But now, says Autodesk’s head of machine intelligence Mike Haley, the rechristened BrickBot is finally far enough along that the company is ready to talk about it publicly, and talk about how the work Haley’s team has done could soon make industrial manufacturing and construction more flexible and efficient than ever before.

“You speak to most of our customers, they’re all dealing with the same thing,” says Haley. “How can we make a broader range of things more quickly, how can we make decisions later in the cycle, change designs, and then . . . can we design a factory so that we don’t have to build [a] perfectly pristine, predictable environment where nothing ever goes wrong because the robots can’t handle it?”

In other words, a Lego model-building robot is the first step toward that future, Autodesk believes. And they’re getting really close.

Dumb robots

The starting point for BrickBot was the idea that the kind of robots used in manufacturing facilities around the world, like the ones used to make cars are, as Haley puts it, “incredibly dumb.”

Although great at what they do, such robots are programmed to do one job like welding a specific spot. They’re largely inflexible and generally incapable of adapting to real-world conditions: If the object it is supposed to weld is two inches off, the weld will be two inches off as well.

Autodesk wants to give its industrial clients smarter robots–machines that can adapt on the fly to what’s in front of them. “Robots are in no way aware of their higher-order goal,” Haley says. “They have no real sense of what they’re trying to achieve. [BrickBot is] about introducing that sense into the system.”

With BrickBot, Haley and his team–particularly software architect Yotto Koga–have set out to demonstrate that it’s possible to design a robotic system that uses artificial intelligence to address this dynamic. By showing that you can go from a pile of Legos to a constructed model, they believed, you could prove that robots can learn to reason and handle large amounts of complexity–things like strategizing the right brick to pick up from one of several bins in order to proceed with a Lego model, how to grasp it the right way, and how to move it into the right orientation to place it properly.

To Ken Goldberg, a professor of robotics, automation, and new media at the University of California at Berkeley, Autodesk’s work is both interesting and exciting. As someone who is developing a system, known as Dex-Net, that’s meant to teach robots how to grasp objects of myriad shapes and sizes, Goldberg has a keen understanding of the three major sub-tasks with which Haley’s group is grappling.

The first is the grasping and picking out of bins of components of different shapes and sizes–a “non-trivial” problem that exists across most manufacturing sectors.

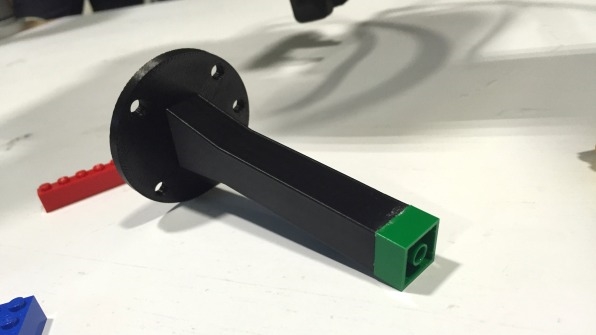

The second is orienting those components properly, “because for any kind of assembly, it’s not enough to pick it up and drop it into a box,” Goldberg explains. “You have to hold it in a specific way [and] that often requires re-grasping, putting it down, and changing the gripper.”

That’s an easy task for people, since our hands offer 22 degrees of freedom in the way we hold things, allowing us to spin a Lego around in our hands. But a robot with a gripper typically has only a single degree of freedom, he says, which mandates picking up an object, looking at it with a camera, putting it down, and then re-grasping it. “That requires an interesting understanding of physics,” Goldberg says, “How do I put it down, and how do I move the gripper to get the new desired orientation between the gripper and the object?”

Finally, while nearly any kid can build a Lego model, asking a robot to do the assembly presents a subtle problem given the difficulties of connecting bricks as precisely as they require–if they’re even a sub-millimeter off, they may not snap together.

Taken together, those three areas are highly interesting from a research perspective, Goldberg believes, especially when you add in the Lego factor–which can capture the popular imagination.

Indeed, Haley says one of the reasons he chose Legos as the medium for the project is that “I wanted something that speaks to [everyone]. If I do a research project, and I want it to affect people, I want it to be in a language that everybody understands. Ship building excites ship builders, but that’s all. Lego–everyone knows.”

Bin picking

In early June, I walked back into Autodesk’s bay-side robotics lab for the first time in eight months. Haley and Koga were eager to show off the version of BrickBot that’s ready for the world to see.

For the first time, Koga explains, the robots were able to grab the Legos they need to complete a model from bins, rather than just picking them from a pile on a table–an important analog for how industrial manufacturing works. Getting there had taken some time, and was probably one of the main reasons the project had been delayed.

In earlier iterations, he says, the robots were using machine learning to take sensor data, and had started out just trying to use camera imagery to pick the right Legos. That didn’t work all that well, so Koga tried binocular, or stereo vision, to try to add depth information. It worked better, but still wasn’t good enough. Finally, after about six months of experimenting, he added depth sensors that can read color.

The depth sensors produced a lot of noise, meaning lost data, so the trick ended up being taking multiple pictures of the bin as the robot would reach in to pick a brick, and merging them to reduce the noise. Over time, the system learned how to distinguish between different colors, and thus, to pick the right Legos out of the bins.

As well, BrickBot was learning how to grasp each individual piece, how to put it down and reposition it, and finally how to pick it up again and snap it into place. At the end of one of the robot arms is a removable tool for grabbing the Legos and then placing them atop other bricks. And that tool is representative of being able to switch out tools in an industrial setting, Haley says. “The idea is that if we can make this whole thing adaptive,” Haley says, “adding more robots doesn’t add more complexity. The system’s just got to learn how to do it.”

From squares and rectangles to goat-shaped bricks

One part of that is teaching the system to know how to handle Lego pieces of all varieties–squares, rectangles, wheels, miniature people, goat-shaped bricks, and so on. Because in the industrial world, components come in all shapes and sizes. In the earliest stages of the project, BrickBot couldn’t do that, but now it seems increasingly unintimidated if it encounters something out of the ordinary. “In one of the experiments, we put the goat in [a bin],” Haley says. “The system wasn’t trained on goats, but it was still able to reasonably deal with it 80% of the time.”

To be sure, Haley acknowledges, BrickBot is still a research project. It’s not yet a product, but it’s well on the way. “The way we like to work here,” he says, “we like to come up with future-facing ideas like this concept. And then, we do a certain amount of work independently, inside Autodesk, to get to the point where you’ve vetted out the feasibility of what you’re doing. That’s exactly where we are now. This thing is feasible.”

One next step is to allow the system to work somewhat outside of the bounds of the step-by-step model-building instructions its given. The idea there is that an intelligent robot would probably choose a different sequence of steps than a human because its efficiencies–of things like gripping and grasping–are fundamentally different than ours. In industrial settings, robots have been programmed to assemble things in human ways, but maybe there’s a better way. Haley’s team considers that a major next step in the project.

But really, BrickBot’s next stage is finding Autodesk customers that are willing to be co-research partners who will let an industrial-scale version of the system into their factories.

UC Berkeley’s Goldberg thinks Autodesk’s progress so far is impressive, but he cautions that while a Lego model-building robot is applicable to industry, it’s by no means a straight line. “That’s the key,” Goldberg says generally of this type of research. “It’s very important to be doing these experiments, but we have quite a way to go before we’re able to put these into practice in a cost-effective way. Having a robot learn to do one step in an assembly line, that’s very exciting. Having it doing multiple steps like this, that’s even more exciting. But that’s going to take time.”

Haley doesn’t disagree. But he does think it’s time to start working with customers to move the technology forward. The trick is finding the right customers among the broad spectrum of auto manufacturers, plane makers, construction companies, and everyone in between, and get the green light to bring it into their factories for a couple years of experimentation. After all, almost every major company in the world, not to mention countless smaller outfits, use Autodesk tools.

Some of those companies are the types that seek to be on the bleeding edge of technology, while others want more standard, current-gen robotics technology. Haley’s team is looking to the former group, who see cutting-edge technology as a competitive advantage, for partners.

“They say, ‘We’d love to work with you guys,’” Haley argues. “‘We get that it’s not a product, but on the other side, we get to discover something with you guys. If it does turn into something, great, we’re the ones leading it.’”

Fast Company , Read Full Story

(56)