Can Using Artificial Intelligence Make Hiring Less Biased?

The resume is on its way out.

“[It’s a] data token that boils you down to a data object,” says Pete Kazanjy, founder of TalentBin, a service that uses social media to find job recruits (now part of Monster). That’s especially true for hard numbers, said Google’s SVP of “people operations” Laszlo Bock in a 2013 New York Times interview. “One of the things we’ve seen from all our data crunching is that GPAs are worthless as a criteria for hiring, and test scores are worthless,” said Bock.

A growing wave of hiring tech firms are ingesting far more information about candidates—surveys, work samples, social media posts, word choice, even facial expressions. Adding artificial intelligence (AI), they promise to assess work skills as well as personality traits like empathy, grit, and prejudice to provide a richer understanding of who the applicant is and whether they will fit. “This is going to explode in the next few years,” says Kristen Hamilton, CEO of Koru, an AI-based assessment company founded in 2013. “We’ve applied this data-oriented approach to every other aspect of our companies and businesses.” I decided to try a bunch of these AI-driven assessments to see what they might reveal about the science, and about me.

Mechanizing An Old Practice

The deep dive into a candidate’s mind isn’t a new idea, says Mark Newman, founder and CEO of HireVue. Founded in 2004, it was one of the pioneers in using AI for hiring. Its specialty is analyzing video interviews for personal attributes including engagement, motivation, and empathy. (Although it also uses written evaluations.) The company analyzes data such as word choice, rate of speech, and even microexpressions (fleeting facial expressions). Like most of the firms I spoke to, HireVue says that it is not yet profitable.

The post-World War II approach to hiring included things like personality tests such as Myers-Briggs as well as the structured behavioral interview: asking every candidate the same questions to objectively compare them. Another classic deep-dive tool is the work-sample test—performing a mock task from the job, like writing code for a software developer or talking to a (fake) irate caller for a customer-service rep. But these interview tactics are performed by busy and potentially biased humans.

“Structured interviews are much better and subject to less bias than unstructured interviews,” says Newman. “But many hiring managers still inject personal bias into structured interviews due to human nature.” Scoring work samples takes staff like software engineers away from their real jobs, says Kazanjy. But what if tireless machines could replace overtaxed humans? “It’s all the science from the last 50 years being empowered by the technology of today,” says Newman.

How far can automated hiring managers go, assuming they can do the job at all? Kazanjy says they can at least knock out people who lack the skills to fit the position.

Launched in July 2015, Interviewed offers several levels of testing, beginning with basics such as multiple-choice exams on how well someone knows software like Microsoft Excel or Salesforce. Kazanjy believes that software could go even further, such as assessing programmers. “Errors in code samples can be programmatically detected, the same way that spelling and grammatical errors in a written English sample can be programmatically detected,” says Kazanjy. “You couldn’t automate judging between the A+, B+, and B work,” he says, “but maybe you could kick out the C work.”

Finding Red Flags

But companies don’t want candidates with stellar skills if they are racist, sexist, or violent. A company called Fama offers to find these problems through automated searches of the web, including news coverage, blogs, and social networks like Facebook, Google+, Instagram, and Twitter. “Forty-three percent of businesses are using social media to screen job candidates,” says Fama’s CEO and founder Ben Mones, quoting a January 2016 study by the Society for Human Resource Management.

Companies who vet candidates on their own with social media may be breaking laws, such as the Fair Credit Reporting Act (FCRA), a 1970 U.S. statute (amended several times) that gives consumers the right to challenge the accuracy of public data collected about them and used for decisions like granting employment and credit. Fama follows FCRA’s requirements, says Mones, such as informing candidates that it is collecting information, obtaining their consent, and sharing the results so they have a chance to respond.

Fama, founded in January 2015, digs into language and photos that indicate things employers are allowed to consider in hiring (which varies by state): signs of bigotry, violence, profanity, sexual references, alcohol use, and illegal drug use or dealing. It hired dozens of people to read social media posts and figure out how to classify and rate how offensive they were, using those results to train its natural language processing (NLP) artificial intelligence on what to search for.

I asked Fama to run a report on me. It pulled up several of my articles and flagged salty quotes from protesters I had tweeted while covering Occupy Wall Street, plus my own use of the word damn. “We don’t score a candidate,” says Mones. “We provide simply a way to automate that filter, where you can find those needles in the social media haystack.”

“We’re creating these very long histories of behavior over time,” says Pete Kazanjy. “If someone tweeted something racist 3,000 tweets ago, you wouldn’t find it, but a machine could.” This summer, Fama expects to roll out the ability to also flag positive things about candidates, like posts about volunteer work.

Getting A Good Fit

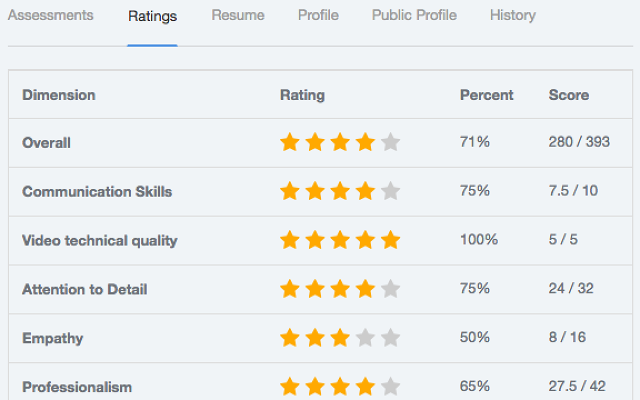

Positive traits are an important part of Interviewed’s tests for consumer service jobs, in which candidates field text chats or calls from bots that represent customers. Interviewed’s clients include IBM, Instacart, Lyft, and Upwork, and the company says it’s close to being profitable. Interviewed is beginning to automate the assessment of what cofounder and COO Chris Bakke calls softer skills. It asked hiring managers to look over test transcripts and rate candidates’ qualities like empathy on a 1-5 scale. Interviewed then applied natural language processing and machine learning that looks for patterns in masses of data. “We found that hiring managers and interviewers are four times more likely to perceive and rate a customer support response as empathetic when it contains three or more instances of please, thank you, or some form of an apology, i.e., I’m sorry,'” says Bakke. That data guides what Bakke calls a structured manual review process: A human still makes the call.

As Interviewed collects data, its assessments are growing more sophisticated, says Bakke, gradually progressing from testing skills toward judging whether candidates are a “culture fit” for an organization. This moves into territory staked by companies like RoundPegg. Its online CultureDNA Profile starts with what looks like a bunch of word magnets on a fridge. From 36 options, users drag words or phrases like “Fairness,” “Being team oriented,” and “High pay for good performance” into columns of their nine most important and nine least important values. “You are going to excel in an environment that is most similar to you,” says Mark Lucier, RoundPegg’s director of accounts. Incorporated in 2009, RoundPegg has attracted big-name clients with its method, such as Experian, ExxonMobil, Razorfish, Xerox, and even HireVue.

RoundPegg works a bit like survey-based dating site OK Cupid: Clients determine what their company culture is by administering the test to current employees. Then applicant assessments show how well personalities match up. Spending about five minutes on the test, I learned that I prefer, like more than 95% of the country, a “Cultivation” company culture, which is described as having “focus on potential and providing opportunities for growth, with less importance placed on rules and controls.” Of the three other cultures, I scored 63 for Collaboration, 62 for Competence (“People are expected to be experts in their field—generalists are not typically appreciated”), and 20 for Command (“Roles are clearly defined and systems and policies are in place to ensure that things are done the same way every time”). That seems right for a freelance writer who recently left an office job.

RoundPegg then helps companies dig deeper with customized interviews to assess their “risk” of not fitting the company’s values. My assessment showed that I may not jibe with RoundPegg’s priority on rewarding team success over individual success. To suss that out, it recommended asking me for an example of how I once handled working in a team success-oriented environment in the past.

Getting The Right Stuff

Koru goes a step further by assessing not only if someone will fit in at the job, but also if they will be good at it. The company started as a training program for college grads, teaching them to develop seven competencies with buzzy names like Grit, Polish, and Impact. Koru expanded to offer a job applicant test that measures these competencies and gathers a bunch of other data. Koru’s focus remains on young workers. In lieu of extensive job experience, companies can evaluate candidates based on Koru’s personal attribute scores.

Artificial intelligence backs up the test, says Kristen Hamilton, because it’s based on reverse engineering the attributes of the most successful people in different types of jobs and companies that use Koru, including REI, Zillow, Yelp, Airbnb, Facebook, LinkedIn, Reebok, and McKinsey & Company.

“They tell us who performs well at different levels, and we say, okay, let’s look for patterns in this data set,” says Hamilton. She cofounded Koru with Josh Jarrett, who headed up the Next Generation Learning Challenges program for the Bill and Melinda Gates Foundation.

Reverse engineering is a mainstay of HireVue’s philosophy as well. “Across our database, we have tens of millions of interview question responses . . . and every response is rich in information we can learn from,” says Newman. “So as you kind of analyze those pieces, and track the outcomes, that’s where you start building these really highly predictive validated models.” Interviewed is moving in the same direction, says Bakke.

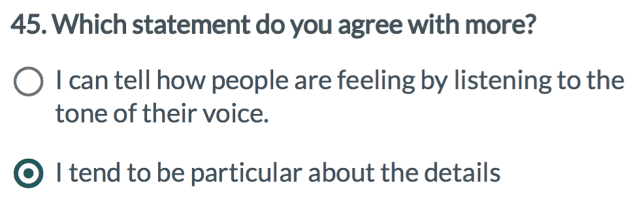

In contrast to the five-minute RoundPegg test, I spent about 30 minutes answering 82 questions and recording a two-minute video for Koru. Some questions were head-scratcher comparisons. Do I agree more with the statement, “I like to see tangible results of my efforts” or “I’m not afraid to ask questions if I don’t understand something”? I also had to handle scenarios, like picking which of four options describes how would I handle my team and boss if we weren’t given enough time or resources to complete a big project.

Of my top three impact skills, I scored very high (8.3 out of 10) on Polish (effective communication) and Curiosity. I ranked high (6.7) on Grit, described thus: “The ability to stick with it when things get hard. When directions are not explicit, hires can make sense of ambiguous situations.”

“The issue that I have as an organizational psychologist is that you can claim to measure all sorts of fancy buzzwords,” says Jay Dorio, director of employee voice and assessment at IBM’s Kenexa Smarter Workforce division. Grit is a great attribute to have, says Dorio, but he calls it “superfluous and funky stuff” that could have an adverse impact—legalese for hiring criteria that appear neutral but could be discriminatory.

Kenexa is also cautious about using artificial intelligence—curious for a division of IBM, which keeps promoting new ways to deploy its AI platform, Watson Analytics. Kenexa uses Watson for some of its offerings, such as analyzing employee survey results, says Dorio. But it doesn’t use AI in evaluating employee assessments.

Koru’s Hamilton was sure to mention adverse impact. “We have conducted multiple research panel studies that affirm our assessment does not have adverse impact on applicants,” explains Hamilton. A data-driven approach, in fact, is likely to be more objective, she says. “What people do typically in an unsophisticated way in some of these hiring processes is say, we like soccer players because they never give up,” says Hamilton. “So let’s get soccer players.”

That’s no joke. Athletic experience has long been a criteria for evaluating candidates to sales jobs, says Pete Kazanjy. It’s believed to indicate high competitiveness, willingness to be coached, and ability to think on their feet. “They may be right, but they’re totally guessing as to whether or not athletes or soccer players or people with a physics class are better employees or better performers,” says Hamilton. “We take the scientific approach and look at any input we want to put in . . . and identify their predictive power.”

Fast Company , Read Full Story

(84)