Can we machine-learn Google’s machine-learning algorithm?

As Google becomes increasingly sophisticated in its methods for scoring and ranking web pages, it’s more difficult for marketers to keep up with SEO best practices. Columnist Jayson DeMers explores what can be done to keep up in a world where machine learning rules the day.

Google’s rollout of artificial intelligence has many in the search engine optimization (SEO) industry dumbfounded. Optimization tactics that have worked for years are quickly becoming obsolete or changing.

Why is that? And is it possible to find a predictable optimization equation like in the old days? Here’s the inside scoop.

The old days of Google

Google’s pre-machine-learning search engine operated monolithically. That is to say, when changes came, they came wholesale. Large and abrupt movements, sometimes tectonic, were commonplace in the past.

What applied to one industry/search engine result applied to all results. This was not to say that every web page was affected by every algorithmic change. Each algorithm affected a specific type of web page. Moz’s algorithm change history page details the long history of Google’s algorithm updates and what types of sites and pages were impacted.

The SEO industry began with people deciphering these algorithm updates and determining which web pages they affected (and how). Businesses rose and fell on the backs of decisions made due to such insights, and those that were able to course-correct fast enough were the winners. Those that couldn’t learned a hard lesson.

These lessons turned into the “rules of the road” for everyone else, since there was always one constant truth: algorithmic penalties were the same for each vertical. If your competitor got killed doing something Google didn’t like, you’d be sure that as long as you didn’t commit the same mistake, you’d be OK. But recent evidence is beginning to show that this SEO idiom no longer holds. Machine learning has made these penalties specific to each keyword environment. SEO professionals no longer have a static set of rules they can play by.

Dr. Pete Meyers, Moz’s Marketing Scientist recently noted, “Google has come a long way in their journey from a heuristic-based approach to a machine learning approach, but where we’re at in 2016 is still a long way from human language comprehension. To really be effective as SEOs, we still need to understand how this machine thinks, and where it falls short of human behavior. If you want to do truly next-level keyword research, your approach can be more human, but your process should replicate the machine’s understanding as much as possible.”

Moz has put together guides and posts related to understanding Google’s latest artificial intelligence in its search engine as well as launched its newest tool, Keyword Explorer, which addresses these changes.

Google decouples ranking updates

Before I get into explaining how things went off the rails for SEOs, I first have to touch on how technology enabled Google’s search engine to get to its current state.

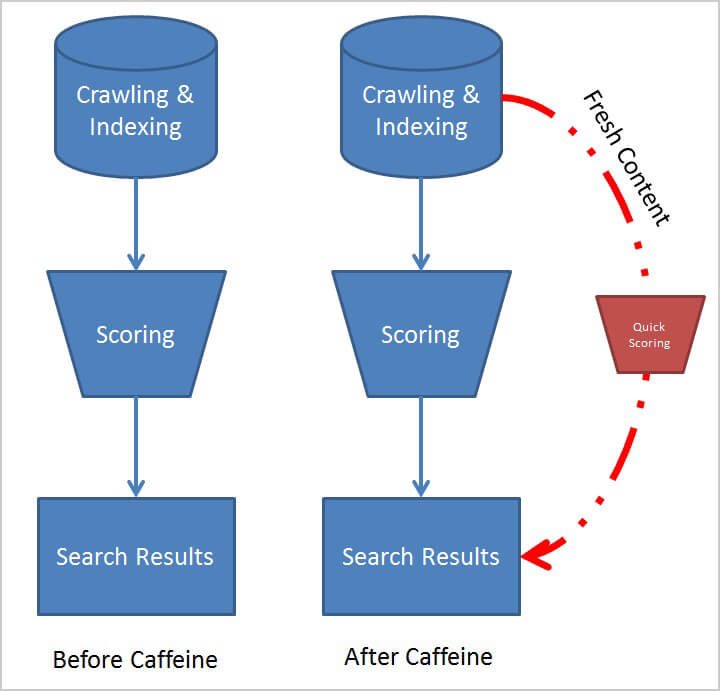

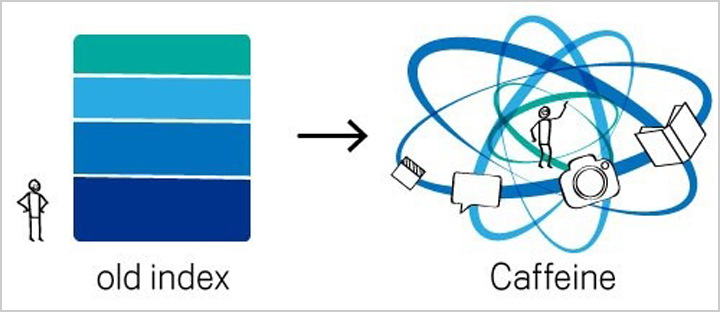

It has only been recently that Google has possessed the kind of computational power to begin to make “real-time” updates a reality. On June 18, 2010, Google revamped its indexing structure, dubbed “Caffeine,” which allowed Google to push updates to its search index quicker than ever before. Now, a website could publish new or updated content and see the updates almost immediately on Google. But how did this work?

Before the Caffeine update, Google operated like any other search engine. It crawled and indexed its data, then sent that indexed data through a massive web of SPAM filters and algorithms that determined its eventual ordering on Google’s search engine results pages.

After the Caffeine update, however, select fresh content could go through an abbreviated scoring process (temporarily) and go straight to the search results. Minor things, like an update to a page’s title tag or meta description tag, or a published article for an already “vetted” website, would be candidates for this new process.

Sounds great, right? As it turned out, this created a huge barrier to establishing correlation between what you changed on your website and how that change affected your ranking. The detaching of updates to its search results — and the eventual thorough algorithmic scoring process that followed — essentially tricked many SEOs into believing that certain optimizations had worked, when in fact they hadn’t.

Source: Google Official Blog

This was a precursor to the future Google, which would no longer operate in a serialized fashion. Google’s blog effectively spelled out the new Caffeine paradigm: “[E]very second Caffeine processes hundreds of thousands of pages in parallel.”

From an obfuscation point of view, Caffeine provided broad cover for Google’s core ranking signals. Only a meticulous SEO team, which carefully isolated each and every update, could now decipher which optimizations were responsible for specific ranking changes in this new parallel algorithm environment.

When I reached out to him for comment, Marcus Tober, founder and CTO of Searchmetrics, said, “Google now looks at hundreds of ranking factors. RankBrain uses machine learning to combine many factors into one, which means factors are weighted differently for each query. That means it’s very likely that even Google’s engineers don’t know the exact composition of their highly complex algorithm.”

“With deep learning, it’s developing independently of human intervention. As search evolves, our approach is evolving with Google’s algorithmic changes. We analyze topics, search intention and sales funnel stages because we’re also using deep learning techniques in our platform. We highlight content relevance because Google now prioritizes meeting user intent.”

These isolated testing cycles were now very important in order to determine correlation, because day-to-day changes on Google’s index were not necessarily tied to ranking shifts anymore.

The splitting of the atomic algorithm

As if that weren’t enough, in late 2015, Google released machine learning within its search engine, which continued to decouple ranking changes from its standard ways of doing things in the past.

As industry veteran John Rampton reported in TechCrunch, the core algorithms within Google now operate independently based on what is being searched for. This means that what works for one keyword might not work for another. This splitting of Google’s search rankings has since caused a tremendous amount of grief within the industry as conventional tools, which prescribe optimizations indiscriminately across millions of keywords, could no longer operate on this macro level. Now, searcher intent literally determines which algorithms and ranking factors are more important than others in that specific environment.

This is not to be confused with the recent announcement that there will be a separate index for Mobile vs. Desktop, where a clear distinction of indexes will be present. There are various tools to help SEOs understand their place within separate indexes. But how do SEOs deal with different ranking algorithms within the same index?

The challenge is to categorize and analyze these algorithmic shifts on a keyword basis. One technology that addresses this — and is getting lots of attention — was invented by Carnegie Mellon alumni Scott Stouffer. After Google repeatedly attempted to hire him, Stouffer decided instead to co-found an AI-powered enterprise SEO platform called Market Brew, based on a number of patents that were awarded in recent years.

Stouffer explains, “Back in 2006, we realized that eventually machine learning would be deployed within Google’s scoring process. Once that happened, we knew that the algorithmic filters would no longer be a static set of SEO rules. The search engine would be smart enough to adjust itself based on machine learning what worked best for users in the past. So we created Market Brew, which essentially serves to ‘machine learn the machine learner.’”

“Our generic search engine model can train itself to output very similar results to the real thing. We then use these predictive models as a sort of ‘Google Sandbox’ to quickly A/B test various changes to a website, instantly projecting new rankings for the brand’s target search engine.”

Because Google’s algorithms work differently between keywords, Stouffer says there are no clear delineations anymore. Combinations of keyword and things like user intent and prior success and failure determine how Google weights its various core algorithms.

Predicting and classifying algorithmic shifts

Is there a way we, as SEOs, can start to quantitatively understand the algorithmic differences/weightings between keywords? As I mentioned earlier, there are ways to aggregate this information using existing tools. There are also some new tools appearing on the market that enable SEO teams to model specific search engine environments and predict how those environments are shifting algorithmically.

A lot of the answers depend on how competitive and broad your keywords are. For instance, a brand that only focuses on one primary keyword, with many variations of subsequent long-tail keyword phrases, will likely not be affected by this new way of processing search results. Once an SEO team figures things out, they’ve got it figured out.

On the flip side, if a brand has to worry about many different keywords that span various competitors in each environment, then investment in these newer technologies may be warranted. SEO teams need to keep in mind that they can’t simply apply what they’ve learned in one keyword environment to another. Some sort of adaptive analysis must be used.

Summary

Technology is quickly adapting to Google’s new search ranking methodology. There are now tools that can track each algorithmic update, determining which industries and types of websites are affected the most. To combat Google’s new emphasis on artificial intelligence, we’re now seeing the addition of new search engine modeling tools that are attempting to predict exactly which algorithms are changing, so SEOs can adjust strategies and tactics on the fly.

We’re entering a golden age of SEO for engineers and data scientists. As Google’s algorithms continue to get more complex and interwoven, the SEO industry has responded with new high-powered tools to help understand this new SEO world we live in.

[Article on Search Engine Land.]

Some opinions expressed in this article may be those of a guest author and not necessarily Marketing Land. Staff authors are listed here.

Marketing Land – Internet Marketing News, Strategies & Tips

(178)