Computerized Composers Are Coming To Adobe To Score Your Life’s Soundtrack

The song I just made is admittedly pretty cheesy, but I don’t feel too bad. After all, I didn’t pick up any instruments or plug in any microphones to create it. I just clicked a few buttons in my web browser. Beyond that, I didn’t use my brain much at all, let alone the creative part of it.

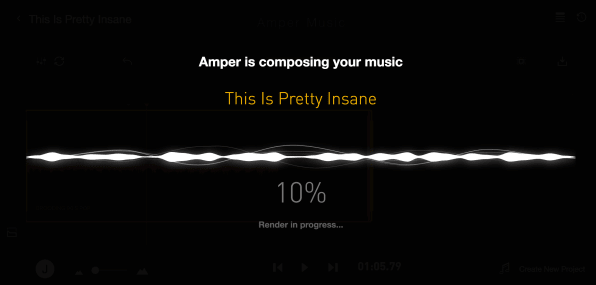

I’m using Amper, a web-based service that uses artificial intelligence to generate music based on a few user-defined parameters like mood, era, and genre. For example, you can churn out “exciting” classic rock or “brooding” ’90s pop. From there, you can swap out different virtual instruments and change parameters like tempo and song length. Today, the startup is announcing an integration with Adobe’s Creative Cloud and the launch of its public API.

“We think that the future of music is going to be created through the collaboration between humans and AI,” says Drew Silverstein, a music composer and Amper’s CEO. “It’s the pinnacle of the next creative evolution.”

The music that Amper creates isn’t going to win any Grammys or show up on a Spotify playlist, but that’s not quite the point of it. The simple, inorganic-sounding songs it creates are more akin to what you’d find in a stock music library, hence its integration with Adobe’s software. Specifically, people using Adobe Premiere Pro can use Amper to generate songs natively inside the popular video-editing software.

Amper is designed to be utilized by non-musicians without any fuss. The interface may resemble digital recording software, but the only input, besides the algorithms under the hood, are the buttons clicked by the user to set various parameters for each track. In addition to choosing different styles, moods, and tempos, you can hand select different musical instrument sounds—toggling guitars, drums, synthesizers, and other instruments off and on.

“This challenge of creating computer-generated music is not just a data science problem,” says Silverstein. “It’s a creativity problem. It just happens to use data science along the way. In essence, we’re building a creative brain much more than we’re building an algorithmic process.”

Amper’s expansion marks a small milestone on a path toward a future that will both excite technologists and horrify artists. Like it or not, computers are learning to write songs. Amper joins the likes of Google Brain’s Magenta project and IBM’s Watson AI platform in pushing artificial intelligence toward more creative modes of thinking and teaching computers to create music. And while the results are not exactly the type of thing you’d zone out to during your morning commute, this music could easily find its way into the background of advertisements, games, and retail environments. By integrating with Adobe’s Creative Cloud and opening up its API, Amper makes this computer-generated music more readily available for precisely these types of projects. And presuming that its API is picked up and used by other developers, Amper’s functionality will find its way into other use cases and interfaces down the line.

“We said, how do we think about music as composers?” says Silverstein, whose team is made up primarily of professional musicians. “When a director asks us for something, why do we do the things that we do? We want Amper to not just make random decisions, but to understand why it’s being asked to do what it’s doing and to make a decision within that context.”

For songwriters, the notion of computers learning how to compose music is nothing short of cringeworthy. In an era when music consumption is shifting toward streaming platforms and the economics are still shaking out for artists and songwriters, it’s just another tech-inspired anxiety to add to the list. And while these songs don’t quite have the emotional or artistic appeal of human-created music, technology—especially artificial intelligence—evolves very quickly these days. It’s not hard to imagine machines disrupting the market for low-end stock music compositions or even mimicking formulaic electronic dance music in the near future. These machines may not one-up Lennon and McCartney or out-produce Beyoncé’s next record, but it’s only 2017, and the fusion of AI with music-making creativity is apparently just getting started.

Like the teams behind Google Magenta and IBM Watson, Silverstein is quick to position Amper as a creative tool to be used by humans rather than something that’s going to put artists out of business. “Everyone on our team is a professional-level musician,” Silverstein says. “That sense of camaraderie with the creative community is an important thing to us.”

Indeed, listening back to the tracks generated by software like Amper, Magenta, or Watson, it’s hard to imagine it upending the craft of songwriting anytime soon. While music has sets of rules that can be taught to computers (and machines are getting better at “hearing” and analyzing sound), there’s still something innately and uniquely human about the motivation and process behind creating music and writing songs.

“At some point, Amper will be making music incredibly well, perhaps indistinguishable from anyone,” Silverstein says. “Whether it’s in two years or a hundred years, it will happen. But as a human race, we intrinsically value making art together and making music with people. That will never go away.”

Fast Company , Read Full Story

(63)