ConversantLabs’ Quest To Make Smartphone Apps Usable For The Visually Impaired

Imagine what it would be like if one day your doctor told you that your eyesight will soon be functionally gone. That’s what Chris Maury experienced back in 2011, but when he started looking into digital accessibility tools that would enable him to stay productive and keyed into his digital life, he found very few. Maury founded Conversant Labs in 2014. It released its first app for the visually impaired, voice-driven shopping app SayShopping, last July.

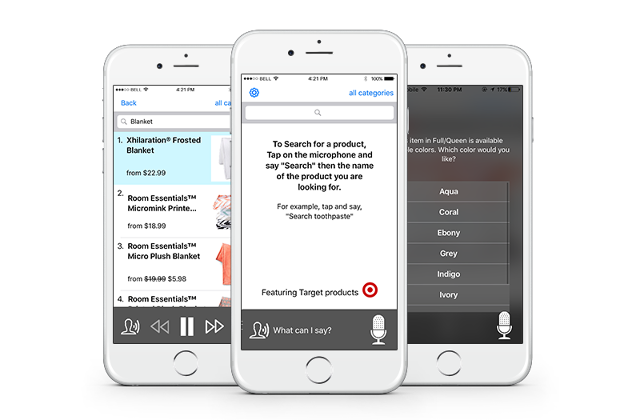

Next up for Conversant Labs: a software kit that will help app developers make their products accessible to the visually impaired. Conversant Labs will soon release its SayKit software development kit (SDK) for iOS applications, which was built with the knowledge the team acquired while they were making SayShopping. SayShopping was something of a test run to try solving a complex problem for the visually impaired. When Maury and his team spoke to people in the visually impaired community, the most popular request was for a tool to help navigate around the physical world—which was a far more difficult problem than Maury felt his startup-sized team and resources could solve. The second-highest request, a shopping app, was feasible—and important.

“Shopping is one of the everyday tasks we take for granted that’s difficult for the blind and visually impaired to do independently,” says Maury. “Shopping, purchasing products, is something that you weren’t able to do with your voice” before SayShopping came around, Maury says.

Currently, the most common visual assistance tool for digital devices is the screen reader, a relatively expensive device (licenses cost $1,000-$2,000 depending on the brand) that audibly reads out words as it scans a website or app’s layout. Not only is the process inefficient, as it waits for the visual page to load before it reads it out, but compliance is spotty, as many developers don’t know or don’t bother coding in tags to help the screen reader to recognize objects in the layout.

And most visual assistance technologies, like screen readers, are focused on people losing their vision and trying to get back into the workforce—in other words, people who are already computer literate, says Maury. A large segment of the blind community is older, with little computer experience. To use a screen reader, they must first be taught how to use and navigate within a computer interface. Sometimes it takes two days of training for an older visually impaired person to learn how to do a simple Google search, says Maury.

“I was talking to someone else who, the way he shops online, he uses a screen reader to find the customer service number and calls it, and says ‘I’m blind, I need you to shop for me.’ The tools like a screen reader are just way too complicated for this large percentage of the blind community to use,” says Maury. “So that was one of the core problems we were trying to solve. Instead of trying to teach them a computer and then this [assistive] technology on top of it, we wanted them to be relying on on behaviors that are much more similar to existing skills, like conversation. So rather than having to navigate a physical environment through swipe gestures in an application, you tap on the microphone and ask what you want to do—you know, send an email to my daughter, post to Facebook, read me the headlines.”

SayShopping launched in partnership with retail giant Target to help create an audio command-driven search tool for products in Target’s database. The SayShopping app needs access to a company’s API to parse through those databases of products, so it only works with select partner companies like Target and e-commerce. After releasing SayShopping, the Conversant Labs team had to decide whether to continue developing SayShopping or to build something completely new. It wasn’t that much of a debate: The Conversant Labs team overwhelmingly voted to create an SDK that other apps could use to make their products more accessible.

It’s a better financial move. Instead of depending on one app for revenue, Conversant Labs’ SDK will be sold as a technology-as-a-service, and so will their additional consulting assistance to help developers and companies integrate the SDK into their products and to help troubleshoot accessibility issues.

It’s a great time to pivot, too, as the tech landscape is becoming more aware of the need for accessibility, says Maury. He recently returned from speaking at last week’s annual CSUN International Technology and Persons With Disabilities Conference and noted that more startups are focusing on accessibility earlier in development. While startups will always be strapped for resources, the old excuse that they don’t have the time or money to program accessibility features is starting to fade.

“For basic applications, whether it’s a website or a mobile app, the amount of effort involved is minimal. The amount of technical proficiency is minimal. For websites, it’s just standard HTML tags. It’s much more a situation of them saying ‘I don’t know what that entails so we’re not going to do it.’ That’s why increasing awareness is improving the situation,” says Maury. “In reality, if you know what to do, it’s not difficult to learn and it’s not difficult to implement—at least at the scale of a startup. The larger challenges with accessibility come in at the larger organizations.”

Conversant Labs is releasing its SDK soon for iOS, which is overwhelmingly the platform of choice for the visually impaired community, says Maury. That’s because Apple has baked in accessibility features as defaults in the iOS app creation process—which isn’t surprising, as Apple has made accessibility a priority since its early days. Maury also lauds Facebook for innovating machine learning that recognizes images in the code and auto-generates alt-text tags, similar to what Twitter just announced.

After Conversant Labs releases the SDK, it can only wait to see how app developers will use it. The release will include a few demo applications Conversant Labs is building to explore the SDK’s potential, like a hands-free cooking app that they’re tentatively calling YesChef.

The team is also thinking about more technical tools for the workplace. When Maury started losing his vision, he became acutely aware of what visual aspects of his job needed to change, like getting coworkers to help him design PowerPoints and getting materials sent to him digitally ahead of time instead of being passed out in a company meeting. Graphic design became very difficult for him, too, so the team is exploring how to build wireframe-assistance tools and new ways to mock up workflows.

Digitization and technological advance hasn’t been a total impediment to the visually impaired. The visually impaired community has benefited from the transition to smartphones, which have less screen real estate, which means less stuff on the screen for the visually impaired to parse through.

But far from being a tool just for the visually impaired, Maury sees developing audio assistance tech as contributing to a tech future we’re already headed toward, one in which Siri-style assistants help with every request.

“Conversational interaction has the potential to be the primary method that the mass consumer market works [within]. [Amazon] Echo is a really good example of this. Virtual reality and augmented reality are going to be a major way if not the primary way that we interact with these devices,” predicts Maury. “Voice is how we use computers in the future, and we think that by focusing on the disabled community today, we can work on the market as voice matures.”

Fast Company , Read Full Story

(51)