DeepMind’s AI has now catalogued every protein known to science

Alphabet’s DeepMind AI is better than you at Atari games

AI has historically had a hard time with ‘Pitfall,’ ‘Solaris,’ and a few other titles.

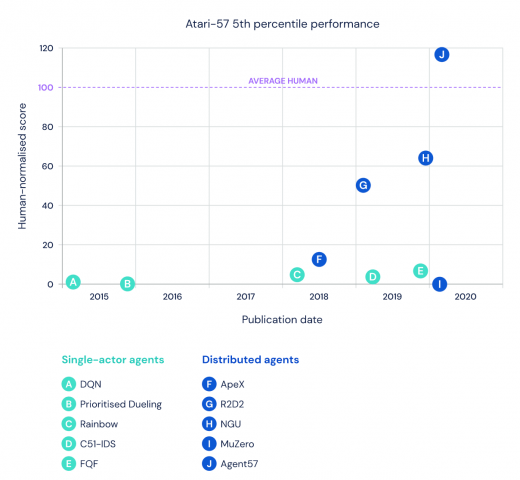

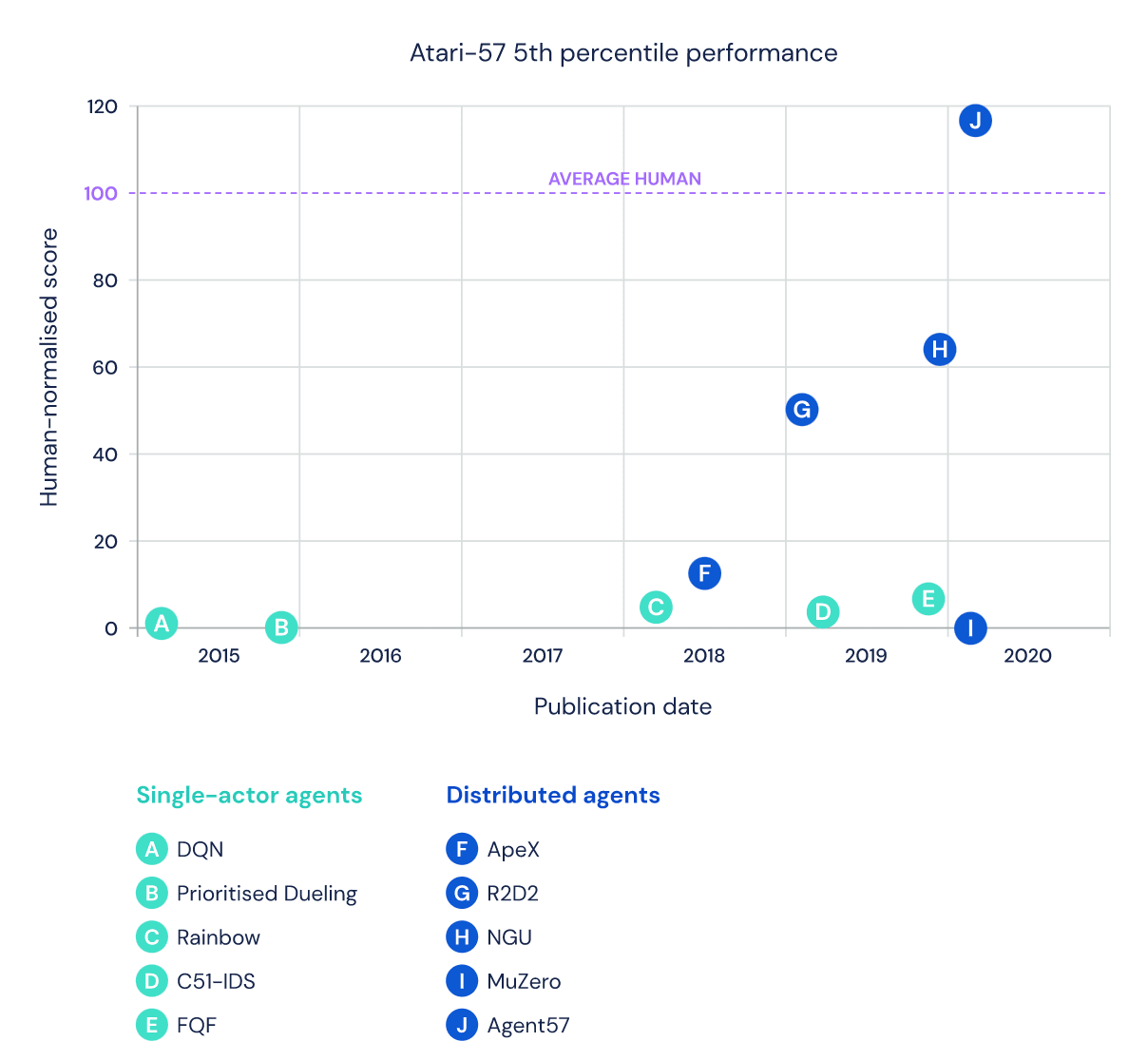

Having AI agents learn how to play simple video games is an ideal way to test their effectiveness, thanks to the ability to measure success via a score. Alphabet’s DeepMind designated 57 particular Atari games to serve as a litmus test for its AI, and established a benchmark for the skills of an average human player. The company’s latest system, Agent57, made a huge leap over previous systems, and is the first iteration of the AI that outperforms the human baseline. In particular, Agent57 has proven its superhuman skills in Pitfall, Montezuma’s Revenge, Solaris and Skiing — games that have been major challenges for other AIs.

According to MIT’s Technology Review, Pitfall and Montezuma’s Revenge require the AI to experiment more than usual in order to figure out how to get a better score. Meanwhile, Solaris and Skiing are difficult for the AI because there aren’t as many indications as success — the AI doesn’t know if it’s making the right moves for long stretches of time. DeepMind built upon its older AI agents so that Agent57 could make better decisions regarding exploration and score exploitation, as well as to optimize the trade-off between short-term and long term performance in games like Skiing.

Technology Review notes that while these results are impressive, AI still has a long way to go. These systems can only figure out one game at a time, which it says is at odds with the skills of humans: “True versatility, which comes so easily to a human infant, is still far beyond AIs’ reach.” That said, AI is already in use across industries. The lessons learned from Agent57 could help improve performance, even if human-level skills aren’t achievable for now.

(35)