Today marks the first anniversary of the school shooting in Parkland, Florida, which left 17 people dead. While the tragedy changed the parameters of the U.S. gun control debate, the massacre also highlighted a thriving and rotten side to the internet. Mere days after the mass murder, conspiracy videos began popping up online repleted with false claims about the event. One video that went viral and was featured in YouTube’s trending section claimed that Parkland survivor David Hogg was an actor.

YouTube has long been criticized for promoting videos that intentionally disseminate misinformation. The examples were especially potent in the wake of nationally televised traumatic events. Moments after a mass shooting, users upload videos to YouTube to claim a counter-narrative. All too often, those videos are given a boost by the company’s algorithm, which helps them garner hundreds of thousands of views.

Every time it happens, YouTube admits to the problem and says it will work to curb conspiracy videos from being promoted. Last week, the company made its strongest statement yet. It said it would no longer recommend videos that even “come close to” violating its terms of service. Ostensibly, this means content that aims to “debunk” well-known moments in history, such as 9/11, or bizarre unfounded theories, like the claim that the earth is flat. YouTube’s latest move was spurred by a recent BuzzFeed News investigation, which showed how easy it was to be fed conspiracy theory content by simply going through YouTube’s “up next” autoplay videos.

But despite YouTube’s best efforts and recent promise to crack down on the problem, conspiracy theories and misinformation are still peddled frequently via its autoplay videos and top recommendations.

Over the last few days, I’ve searched YouTube using only terms related to news events or trending topics. While searches for some tragic events that had previously returned a bevy of conspiracy-laden videos seemed clean, others had questionable results.

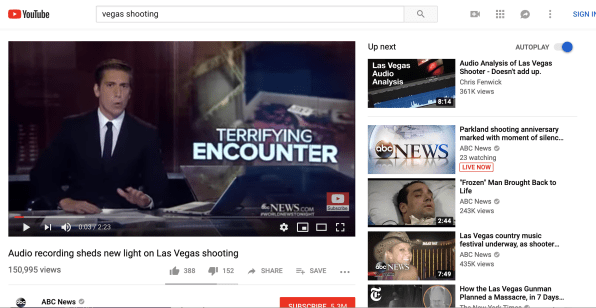

For example, the Las Vegas shooting still seems to be an open playing field for so-called truthers. Although the initial search results are pretty clean–generally from major news channels–when you begin clicking the recommended videos, things get hairy.

One video entitled “I HAVE PROBLEMS WITH THE NEW LAS VEGAS/MANDALAY BAY SHOOTING PHOTOS” is the fifth recommendation after the fourth most popular search result. After following the autoplay trail from a top result posted by The New York Times, I found myself watching an hour-long video from a YouTube channel called “Crowdsource the Truth 2” entitled “Exploring the Hidden Truth of the Las Vegas Shooting.”

Soon, I was shown content that went far beyond Las Vegas. For example, when I clicked on a top search result–also from The New York Times–for the query “vegas shooting,” the sixth auto-played video was “Trey Gowdy Best Moments!!!” The first suggestion below autoplay for this video was “State Fusion Center accidentally releases records on Mind control EMF.” (I should add that this “State Fusion” video, while not auto-played, was peppered throughout my recommendation results.) And, when clicked, it led to some even more bizarre videos, including: “Trump: It’s Not A Conspiracy Theory–Millions Illegally Voted For Hillary.”

Another midway search result for “vegas shooting” is a video from ABC News. The first autoplay video was entitled “Audio Analysis of Las Vegas Shooter–Doesn’t add up.” The following recommendations go down a deep and conspiracy-laden rabbit hole.

It wasn’t just my surfing history bringing about these recommendations either. My editor, Christopher Zara, found a video entitled “Las Vegas Shooting Conspiracy: What They’re Not Showing You,” after one click-through.

Beyond Vegas

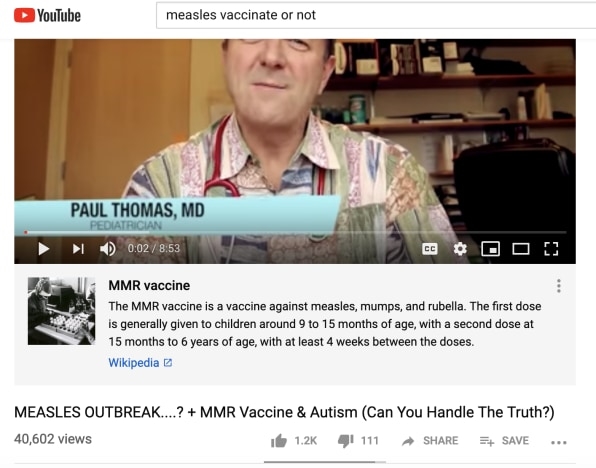

The Vegas shooting is not exactly an outlier here. I began typing “measles vaccination” and chose the auto-fill search of “measles vaccine or not.” The first video is, thankfully, from the Mayo Clinic. But during my tests, the first autoplay video after that was called “MEASLES OUTBREAK….? + MMR Vaccine & Autism (Can You Handle The Truth?),” supposedly uploaded by a doctor. (It should be noted that a day later this was not the autoplay selection, but it was still prominently featured in the other recommendations.) Funnily enough, below these videos was a little blurb from Wikipedia providing some context about vaccines, despite its content directly contradicting what some of these videos claim.

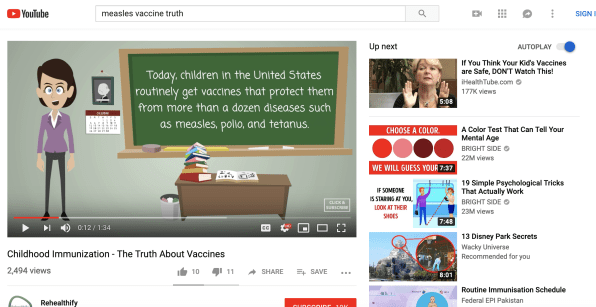

Other questionable search queries and results popped up as well. For example, YouTube recommended I search “measles vaccine truth.” So I did. The third search result was from a bizarre account called “Rehealthify,” featuring zombie-like cartoons speaking with monotone computerized voices and spouting what seems like Googled facts about vaccines. And the first recommended autoplay video was “If You Think Your Kid’s Vaccines are Safe, DON’T Watch This.” I did not.

Other examples

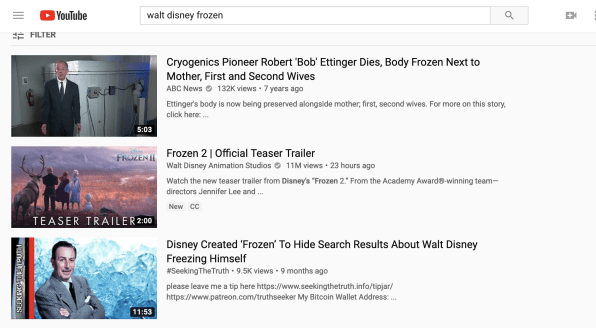

I also decided to try some trending searches, based off of Google Trends, and got some interesting results. For example, Disney’s Frozen was trending thanks to the recent release of the Frozen 2 trailer, and so I decided to search “walt disney frozen.” And, indeed, the third result was entitled “Disney Created ‘Frozen’ To Hide Search Results About Walt Disney Freezing Himself.” I actually do recommend you watch this video, only because the narrator’s cadence is truly something to behold.

All these examples took only minutes to search. They relied on my searching for popular and trending events and only clicking on either the autoplay videos or top recommended suggestions.

I reached out to YouTube for comment, sharing all of the examples above. The company said none of the videos violated its polices. A spokesperson provided me with the following statement:

YouTube is a platform for free speech where anyone can choose to post videos, as long as they follow our Community Guidelines. Over the last year we’ve worked to better surface credible news sources across our site for people searching for news-related topics, begun reducing recommendations of borderline content and videos that could misinform users in harmful ways and introduced information panels to help give users more sources where they can fact check information for themselves. While we’ve made good progress, we also recognize there’s more to do and continue to invest in the user experience on YouTube.

The truth of the matter is that people are going to continue using YouTube to propagate their conspiracy theories. For years, it’s been the go-to place for this sort of content–specifically because the company rarely cracked down. As YouTube–and others, like Facebook–began experimenting with trending and algorithmic searches, it never put in place adequate safeguards for malicious and dangerous content.

The result was a free-for-all that helped propel now-prominent voices like Alex Jones, people who make wild and dangerous claims, such as claiming that 9/11 never happened or that the Sandy Hook shooting was fake. For years, these videos benefited from YouTube’s algorithm, which favored the most outlandish content, and they amassed millions of views as a result.

It’s true that the platforms are beginning to admit that they need better quality control. And, admittedly, it may always be a game of whack-a-mole. But if YouTube is able to use artificial intelligence to instantly figure out if someone has uploaded nudity (and it’s been very effective at eliminating porn), it’s likely that those same engineers would be able to figure out a way to better control this misinformation problem if they really put their minds to it. YouTube tells me that it has a strong bias toward freedom of speech, even when the viewpoints are controversial.

Still, the company insists it no longer has an anything-goes mentality when it comes to content. Over the last year it’s tried to hone its community guidelines and promote more content from credible sources to fix the problem. YouTube admits it’s not perfect yet, but that many of the watch-next panels promote more authoritative content. From my personal experience surfing the platform, that was occasionally true–and then, in an instant, the recommendations would take a dark turn.

On a day like today–the anniversary of a very dark day in American history–we should stop and think about the information we consume and how to better inform others. Platforms like YouTube have made it possible for anyone to say anything and become famous through that. Now, they are beginning to backtrack, but the solutions don’t seem to be working, and the damage is already done.

(15)