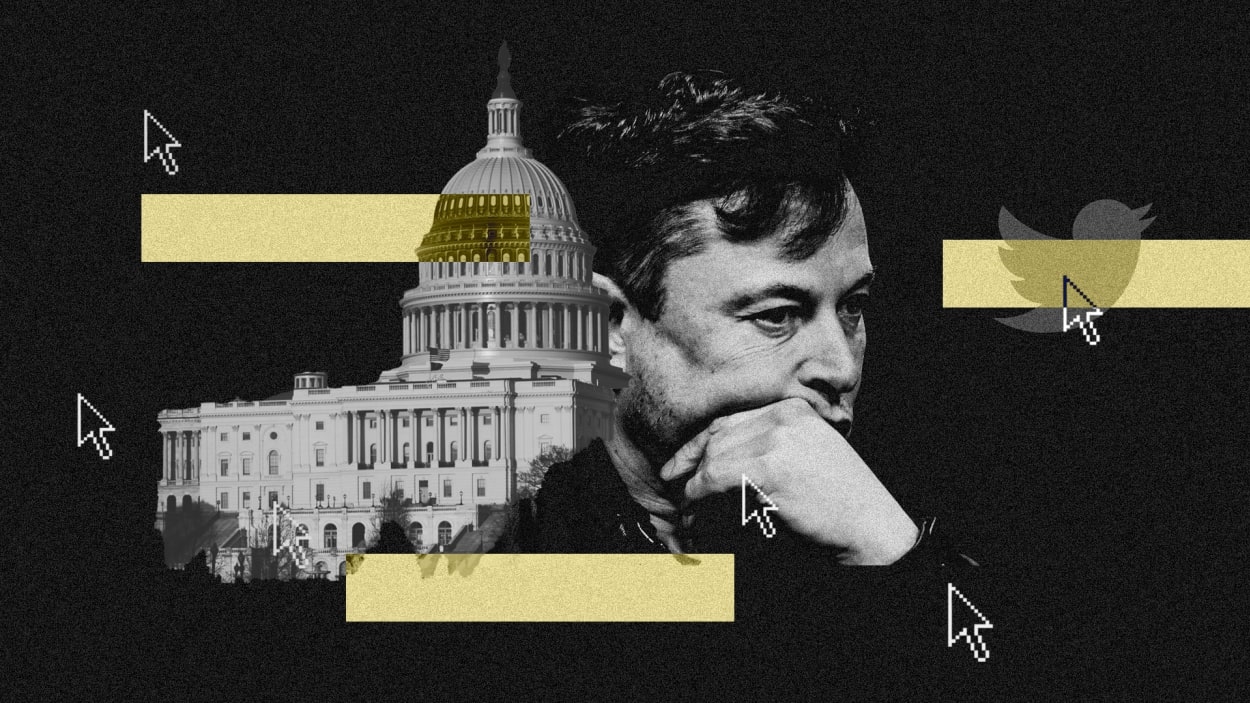

Elon Musk may inadvertently spur Congress to action on social media regulations

Twitter’s reinstatement of previously banned accounts will almost certainly change the day-to-day experience on the platform. In the long term, it also might refocus the debate over how to regulate speech on social networks.

Under Elon Musk’s ownership, Twitter is now simultaneously offering “amnesty” to accounts once banned for spewing hate, abuse, or BS, and showing the door to many of the people once responsible for controlling that kind of content.

Casey Newton reported on Platformer Monday that Twitter has begun the process of reinstating roughly 62,000 accounts, each of which has more than 10,000 followers. These, he writes, include one account with more than 5 million followers, and 75 accounts with over 1 million followers.

Meanwhile, Twitter has now shed, through firings or walkouts, two-thirds of its pre-Musk workforce. The remaining trust and safety staff is spread so thin now that, in some cases, content management for whole countries rests on the shoulders of one person, a source tells Fast Company.

Short-term effects

With the return of previously banned accounts, Twitter could become a noisier place, and perhaps a less useful and trustworthy one. It’s worthwhile to recall why Twitter banned tens of thousands of QAnon and anti-vax accounts, along with those of big names like Donald Trump and Kanye West.

Such accounts demonstrated “a pattern of abuse or hate or misinformation or other policy-violating content,” says Katherine Lo, the content moderation program lead at Meedan, a tech not-for-profit that has worked with Twitter on safety procedures. “Because it was easier to ban people based on an established pattern, it mitigated a lot of these harms.” Twitter will likely now be far more hesitant to impose outright bans on problem accounts.

“If these accounts are coming back, perhaps now with the knowledge of how to evade policy violations, [and] under a new policy that is less likely to ban them, they’re able to perpetuate those harms that they did before,” Lo says.

Lo adds that it’s likely Musk’s Twitter will focus on allowing previously banned accounts with large followings back on the platform. This might be a good business decision because it might increase the time people spend on Twitter. But high follower counts and history of harmful tweets is a bad combination from a safety point of view. Mob abuse becomes a risk.

“These high-profile accounts . . . can be huge vectors for types of harassment that are very difficult to moderate,” Lo says. Even if only one or two accounts post an actual slur against a target, other accounts can do harm by simply piling on, without themselves posting any policy-violating content.

That mob mentality, along with an increasingly toxic feel to Twitter in general, might end up driving more people off the platform for good. When large chunks of users leave, network effects shrink, so you can no longer assume that people you rely on for information and viewpoints will be there. Some of this has already happened.

“I can’t believe it’s been only a month and how much has changed in a month,” said founder and CEO of Anchor Change Katie Harbath, previously Facebook’s elections integrity lead, at a Knight Foundation Informed conference roundtable on misinformation Monday. “[I] can just anecdotally say from my own Twitter feed it’s become less useful; people are leaving.”

Long-term effects

If anything good comes out of a Musk-controlled Twitter, it may be a referendum on the free speech absolutism the billionaire espouses. Musk has said that the platform will allow any speech that doesn’t violate the law of the land, but that the platform may decide not to “boost” or run ads around hateful content.

Of course, there’s no such thing as absolute free speech in organized societies. There’s always a hard-won compromise between absolute free speech and the restriction of harmful speech.

Finding that compromise is hard enough, but it must be done on a whole new playing field with very different attributes and capabilities (i.e. “virality” or “reach”). That matters, because, as Meedan’s Lo points out, it’s possible to harm someone on social without breaking any libel or harassment law on the books.

“We are increasingly at a point where we are recognizing the tremendous political and societal dangers that come from our social media environment,” says George Washington University law school professor Paul Schiff Berman. Our best model for dealing with speech issues is the First Amendment, but that’s designed to restrain governments from stifling the free flow of information—not private companies like Twitter. Social networks on the internet are 21st century “town squares,” and they’re controlled by big for-profit tech companies that are used to a hands-off approach from regulators.

“As a result of that, I think there is increased interest in either having those social media platforms do more self-regulating,” Berman says, “[or] that there will need to be more governmental regulation of these entities in the name of speech or in the name of democracy.”

As for self-regulating at Twitter, so far that seems to mean Musk and his inner circle making ad hoc calls. And they’re not necessarily politically neutral. “It seems to be leaning more toward the right rather than the left or in the center,” says Dennis Baron, an English and linguistics professor at University of Illinois, Urbana-Champaign, who’s written two books on speech in the digital age.

Musk said weeks ago in a tweet that Twitter would refrain from making any account reinstatements before forming an independent council to make content moderation decisions (similar to what Facebook did long ago), an approach Berman favors. But major content moderation decisions are being made, and Musk has said nothing about forming a council since his original tweet.

Government regulation of social networks is possible, if unlikely. “Regulating big tech is one of two issues in which Republicans and Democrats share an interest as we head into the new congressional session (the other is countering China),” former DHS Secretary Christopher Krebs said during the Knight Foundation panel Monday. And Musk’s decision to reinstate previously banned Twitter accounts (like Trump and QAnon) could focus Congress’s interest in imposing some basic trust and safety standards on large social networks.

“The problem is whether you could get consensus on what the regulation should be and how it should be defined,” Berman says. Democrats and Republicans have very different motivations: The former would like to put a check on the control a single tech executive can have over speech; the latter seem interested in any piece of legislation that might make right-wing voices louder on social platforms.

Federal law (Section 230 of the Communications Decency Act) already grants social network operators some immunity from lawsuits over harmful content posted by users, or over content moderation decisions it makes. But 230’s protections have already been narrowed by Congress several times, and now the Supreme Court has granted cert to Google vs. Gonzales, a case that could further narrow its protections.

In the long view, you can see Musk’s Twitter takeover as just one chapter of society’s extended process of deciding on the rules of the road for interaction on a very new kind of communications platform called the social network.

“Think about the printing press,” says Baron. “When that was invented, governments set about deciding who can print what, and religious organizations decide who gets a license to read this stuff or write it. Every time there’s a change in our communications platform, there are new worries about the right approach to controlling it, to make sure the right people are reading and writing and talking.

“A new system always has to find its way,” he adds.

(15)