Empathic AI: The Next Generation of Vehicles Will Understand Your Emotions

Empathic AI: The Next Generation of Vehicles Will Understand Your Emotions

Transportation will never be the same once our machines know how we feel.

We are all entering a wholesale, global disruption of the way people move from one place to another. More than any other change in this sector, the one that is likely to have the most significant impact on human society is the rise of autonomous (i.e., self-driving) vehicles.

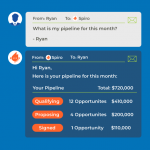

The transportation industry, historically dominated by a handful of large vehicle-manufacturing brands, is evolving into an ecosystem of ‘mobility services,’ underpinned by artificial intelligence (AI). A major step in the development of AI is to give it ‘empathy,’ allowing our physiological and emotional states to be observed and understood. This connection will mostly be achieved by connecting to wearable or remote sensors, the same way that fitness bands allow our physical state to be monitored.

By feeding this sensor data into AI systems, we can train them to know how we feel and how to respond appropriately. This kind of empathy can also be enhanced by giving AI its own artificial emotions, imbuing it with simulations of feelings.

Empathic technology will have no small effect on the mobility sector. How might an empathic vehicle look?

Safe Travels

There is already a growing body of research from top-tier auto companies into what kind of empathic interactions will protect drivers, passengers and everyone around them from harm. To investigate this, biometric sensors, cameras, and microphones are being used to detect:

- Fatigue & drowsiness: e.g., monitoring head or eye movements, posture or heart/breathing rate.

- Distraction: e.g., gaze detection to ensure the driver is watching the road.

- Intoxication: e.g., using infrared optics or analyzing voice or breath.

- Medical incidents: e.g., detecting a potential cardiac event from a wearable heart-rate sensor.

A Comfortable Journey

After ensuring the safety of the humans in the system, empathic tech can be employed to optimize the ride experience. There is a universe of auto-suppliers you’ve probably never heard of, who build all the components and systems that end up in the well-known vehicle brands. They are leading the way to a more empathic ride, with innovations such as:

- Environmental controls: e.g., lighting, heating, AC, sound and olfactory output, customized to suit your current mood.

- Physical controls: seat position, engine configuration, etc.

- Humanising AI feedback: the virtual assistants like Alexa and Siri that are invading our homes and phones are also reaching into our vehicles. With empathic AI we can tailor their feedback to suit our preferred style of interaction.

An Entertaining Ride

Now that our customer is safe and comfortable, they can benefit from AI that knows how to push the right emotional buttons at the moment. This is particularly likely to apply to the onboard music and infotainment systems. Other subtle ways in which a vehicle could be designed to optimize the thrill of the ride include offering to increase the engine’s power output when the driver is feeling confident and happy.

The New Norms of Autonomous Society

An autonomous vehicle doesn’t exist in a bubble. Much of its intelligence is based on sensing its environment and making rapid judgments about how to act. Each vehicle will also be integrated with a global network of systems, able to share information ranging from weather forecasts to road obstructions. By connecting each vehicle to its neighbors and the wider world, we will see the emergence of a new type of ‘social’ structure with its own norms of behavior.

This AI-driven ‘society’ will involve interactions not just between the vehicles and their drivers or passengers, but also with onboard devices, nearby pedestrians, other vehicles, and their occupants, as well as surrounding infrastructure. The etiquette and rules of what the market calls ‘vehicle-to-everything’ (V2X) communications will establish themselves as we gradually let go of the wheel and hand our mobility needs over to ‘the machines.’

This mobility ecosystem is also likely to share data and processes with the rest of the AI in our lives, such as in our smartphones and home-automation systems. If coordinated correctly, this unified data architecture would allow empathic vehicles to know us much better, behaving ever more like a trusted friend.

This is not just a technological problem; it’s a monumental user-experience challenge too. Gradually increasing the empathic capability of the system will support the evolution of the transport experience towards one that is not only safe and comfortable but also delightful.

The future of mobility is emotional.

Editor Note & Disclaimer: The author is a member of the Sensum team, which is an alumnus of our ReadWrite Labs accelerator program.

The post Empathic AI: The Next Generation of Vehicles Will Understand Your Emotions appeared first on ReadWrite.

(34)