Enterprise AI needs high data quality to succeed

Enterprise AI needs high data quality to succeed

There’s no doubt that AI has usurped big data as the enterprise technology industry’s favorite new buzzword. After all, it’s on Gartner’s 2017 Hype Cycle for emerging technologies, for a reason.

While progress was slow during the first few decades, AI advancement has rapidly accelerated during the last decade. Some people say AI will augment humans and maybe even make us immortal; other pessimistic individuals say AI will lead to conflict and may even automate our society out of jobs. Despite the differences in opinion, the fact is, only a few people can identify what AI really is. Today, we are surrounded by minute forms of AI, like the voice assistants that we all hold in our smart phones, without us knowing or perceiving the efficiency of the service. From Siri to self-driving cars, a lot of promise has already been shown by AI and the benefits it can bring to our economy, personal lives and society at large.

The question now turns to how enterprises will benefit from AI. But, before companies or people can obtain the numerous improvements AI promises to deliver, they must first start with good quality, clean data. The success of AI relies on accurate, cleansed and verified data.

Data Quality and Intelligence Must Go Hand-in-Hand

Organizations currently use data to extract numerous informational assets that assist with strategic planning. The strategic plans dictate the future of the organization and how it fairs within the rising competition. Considering the importance of data, the potential impact caused by low quality information is indeed intimidating to think of. In fact, bad data costs the US about 3 trillion per year.

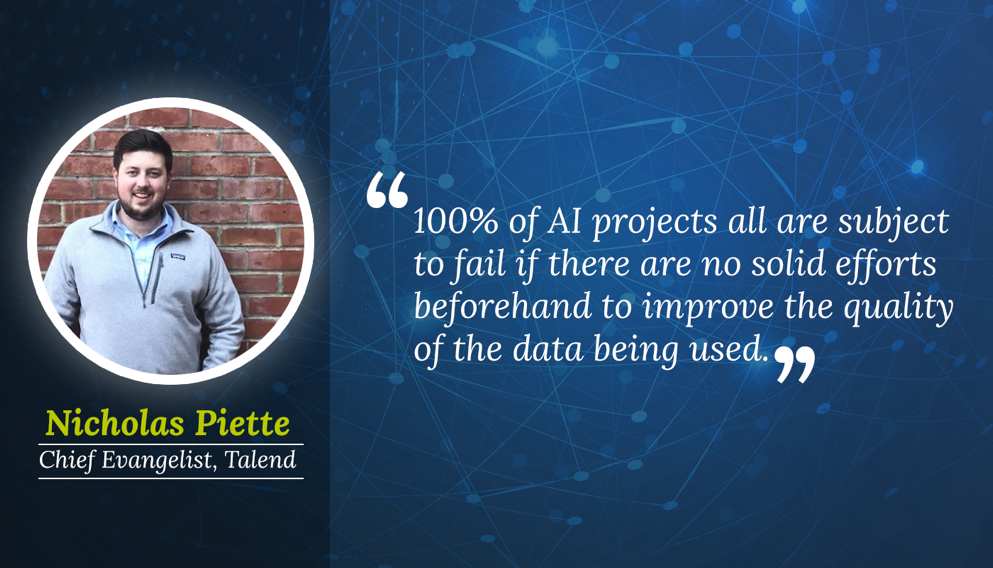

Recently, I had the opportunity to interview Nicholas Piette and Jean-Michel Francofrom Talend, which is one of the leading big data and cloud integration company. Nicholas Piette, who is the Chief Evangelist at Talend, has been working with integration companies for nine years now and has been part of Talend for over a year.

When asked about the link between both Data Quality and Artificial Intelligence, Nick Piette responded with authority that you cannot do one without the other. Both data quality and AI walk hand-in-hand, and it’s imperative for data quality to be present for AI to be not only accurate, but impactful.

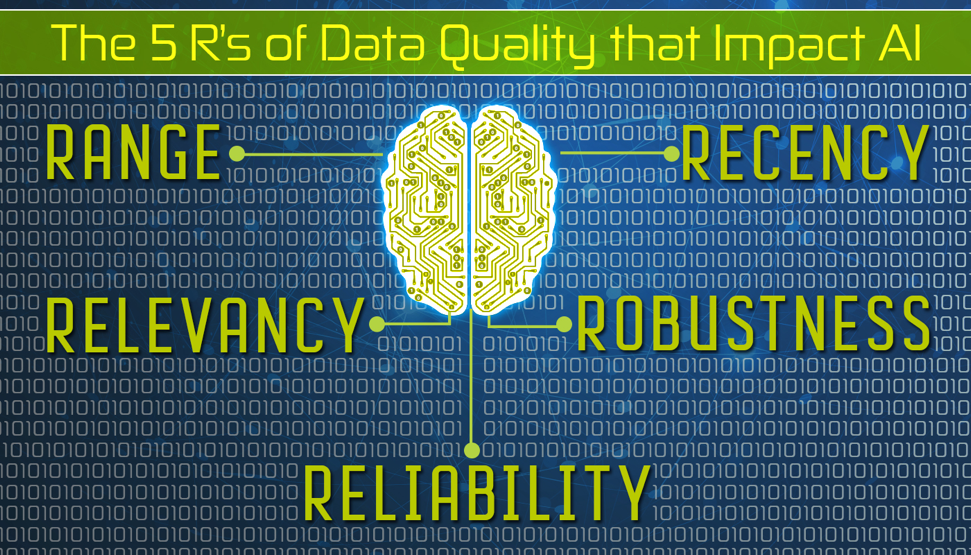

The Five R’s

To better understand the concept of data quality and how impacts AI, Nick used the five R’s method. He mentioned he learned this method from David Shrier, his professor in MIT. The five R’s mentioned by Nicholas include:

- Relevancy

- Recency

- Range

- Robustness

- Reliability

If the data you are using to fuel your AI driven initiatives ticks off each one of these R’s, then you are off to the right start. All five of these hold a particular importance, but relevancy rises above the rest. Whatever data you have should be relevant to what you do, and should serve as a guide and not as a deterrent.

We might reach a point where the large influx of data we have at our fingertips is too overwhelming for us to realize what elements of it are really useful vs what is disposable. This is where the concept of data readiness enters the fold. Having mountains of historical data can be helpful for extracting patterns and forecasting cyclical behavior or re-engineering processes that lead to undesirable outcomes. However, as businesses continue to advance toward the increase use of real-time engines and applications, the importance of data readiness—or information that is the most readily or recently made available—takes on greater importance. The data that you apply should be recent and should have figures that replicate reality.

AI Use Cases: A look at Healthcare

When asked for the best examples of the use of AI at work today, Nick said he considered the use of AI in healthcare as a shining example of both what has be achieved using AI to-date and what more companies can do with this technology. More specifically, Nick said:

“Today, healthcare professionals are using AI technology to determine the chances of a heart attack in an individual, or predict cardiac diseases. AI is now ready to assist doctors and help them diagnose patients in ways they were unable to do before.”

Our understanding or interpretation of what the AI algorithms produce dictates the use of AI in healthcare. This is true regardless of its current accolades. Thus, if an AI system comes up with new insights that seem ‘foreign’ to our current understanding, it’s often difficult for the end-user to ‘trust’ that analysis. According to Nick, the only way society can truly trust and comprehend the results delivered by AI algorithms is if we know that at the very core of those analyses is quality data.

Quality-Driven Data

Nicholas Piette added that ensure data quality is an absolutely necessary prerequisite for all companies looking to implement AI. He said the following words in this regard:

“100% of AI projects are subject to fail if there are no solid efforts beforehand to improve the quality of the data being used to fuel the applications. Making no effort to ensure the data you are using, is absolutely accurate and trusted—in my opinion—is indicative of unclear objectives regarding what AI is expected to answer or do. I understand it can be difficult to acknowledge, but if data quality mandates aren’t addressed up front, by the time the mistake is realized, a lot of damage has already been done. So make sure it’s forefront.”

Nick also pointed out that hearing they have a data problem is not easy for organizations to digest. Adding a light touch of humor, he said “Telling a company it has a data problem is like telling someone they have an ugly child.” But the only way to solve a problem is to first realize you have one and be willing to put in the time needed to fix it.

First Step is Recognition

Referring to the inability of the companies to realize that they have a problem, Nicholas pointed out that more than half of the companies that he has worked with did not believe that they have a data problem until the problem was pointed out. Once pointed out, they had the AHA! Moment.

Nick Piette further voiced his opinion that it would be great if AI could, in the future, exactly tell how it reached an answer and the computations that went into reaching that conclusion. Until that happens, data quality and AI run parallel. Success in AI will only come from the accuracy of data inputted.

“If you want to be successful, you have to spend more time working on the data and less time working on the AI.”

Nicholas Piette (Talend)

If you want to learn more about the concept of data quality you can click here.

About the Author

Ronald van Loon is an Advisory Board Member and Big Data & Analytics course advisor for Simplilearn. He contributes his expertise towards the rapid growth of Simplilearn’s popular Big Data & Analytics category.

If you would like to read more from Ronald van Loon on the possibilities of Big Data and the Internet of Things (IoT), please click “Follow” and connect on LinkedIn and Twitter.

The post Enterprise AI needs high data quality to succeed appeared first on ReadWrite.

(70)