Exclusive: Apple software chief Craig Federighi on iOS 17’s new privacy features, why he’s afraid of AI, and why he’s not

It used to be just technology journalists and privacy advocates who got excited about the new privacy features that Apple would announce at its annual Worldwide Developers Conference. Now, data privacy and safety are at the top of many iPhone consumers’ minds.

These people will not be disappointed by Apple’s announcements today at this year’s WWDC. The company showed off a number of privacy and safety enhancements that will be part of iOS 17, iPadOS 17, watchOS 10, and macOS Sonoma operating systems when they’re released this fall.

I previewed the new privacy and safety features last week—there were dozens. Then, in an exclusive interview, I heard more about them directly from Apple’s senior vice president of software engineering, Craig Federighi, who oversees the iOS operating system that powers the iPhone—arguably Apple’s most important product. He explained how some of them came to be, what they mean for the company, and what they mean for all of us. He also shared some more general thoughts about how AI is likely to affect our privacy in nefarious and unexpected ways.

Getting home safely

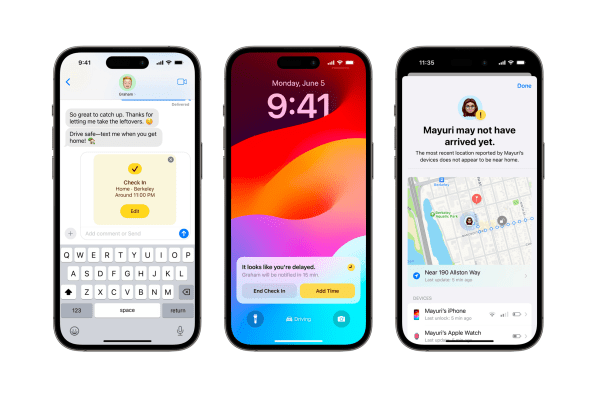

A groundbreaking new feature revealed today called Check In demonstrates Apple’s growing commitment to protecting not just users’ data, but the users themselves.

It allows an iPhone user to select contacts who will be automatically notified when the user arrives home after a night out, providing peace of mind. But if Check In notices that the user is not progressing as expected—say, the user said they would be home by midnight, but it’s 11:50 p.m. and they are still across town—Check In will touch base with the user to make sure they are okay. If the user doesn’t respond, Check In will send the user’s selected contacts a message with the user’s precise location, cell service status, the battery level of their iPhone, and the last time they actively used their iPhone. If something bad has happened, this data could be critical in finding them.

“There are so many people who have said [they] feel a little insecure when they’re walking home from dinner, walking from the library to their dorm,” Federighi tells me. Check In is a way “that we can provide some level of comfort and security for a large number of people.”

Check In is typical Apple in that all the information sent to your contacts is end-to-end encrypted, so it can’t be read by the company. The feature takes the hassle out of having to remember to manually share your location with a friend before you head out, and it even provides more privacy than the old, manual method because the friend won’t get access to your location unless you’ve failed to check in—when that location data is actually needed.

Check In is the newest safety feature added to iPhone, and it follows a personal safety feature Apple introduced with iOS 16 last September, called Crash Detection, in which the iPhone or Apple Watch can detect when a user has been in a car crash and automatically call emergency services. It’s a feature Federighi said made him realize just how helpful an iPhone could be in allowing its owner to get assistance in emergency situations.

“When we shipped Crash Detection, I was amazed [by] how many letters we got, within days, from people who had been in car crashes. I was like, “Oh, my God, how many cars crash in a day?” The answer turns out to be quite a few,” Federighi says. The people involved in the crashes “were confused and discombobulated. Maybe Crash Detection helped them get help a little earlier. In some cases, it saved their lives. It’s been a real eye-opener for us and helps us realize how much we can help.”

Protecting journalists and dissidents

The original Lockdown Mode, which Apple launched last year for iPhone, iPad, and Mac, allows a user to disable many of their devices’ features and services in order to thwart hackers. Apple acknowledges that most users will never have to worry about enabling Lockdown Mode: The types of attacks it protects against require the attacker to be extremely well funded and possess resources and skills your average hacker lacks. The main perpetrators of these kinds of infiltrations are nation-states and other deep-pocketed groups with access to extremely advanced spyware tools like NSO Group’s Pegasus.

Still, Federighi acknowledges that a limited group of iPhone users, including journalists, activists, and government officials, are already being targeted by such attacks—and may find themselves increasingly vulnerable, especially if governments don’t place legal limits on spyware tools like Pegasus. That’s why, with iOS 17, Apple is not only beefing up Lockdown Mode (by blocking the iPhone from connecting to 2G cellular networks and from auto-joining insecure wireless networks) but bringing Lockdown Mode to the Apple Watch for the first time.

“You have a class of users who may have real reason to believe that they could be targeted. For them, we can take advantage of a real asymmetry. Normally, attackers are looking across every surface area of code in the operating system looking for chinks in the armor, a narrow path through,” Federighi explains. “With Lockdown Mode, we can close the bulk of the access to those surfaces,” making attacks “much more expensive” to carry out “and much less likely to be successful.”

Working with AI, and combatting deepfakes

While we were on the topic of threats, I asked Federighi what he thinks about AI, especially when it comes to privacy and security issues. It’s a topic Federighi seems to have considered quite a bit.

“There’s no doubt that AI-based tools are going to become better and better at spotting potential security defects and paths for exploitation,” Federighi admits. “The good news is that those tools can be used by those trying to harden code and protect users, in addition to those who would be attempting to exploit them.”

In other words, it’s not just the bad guys who will benefit from AI. Federighi notes that Apple already uses a number of static and dynamic analysis tools to help the company spot potential code defects that may be hard for a human to detect. “As those tools get more and more advanced” with AI, he says, “we will be on the forefront of using those tools to find problems and to close them before attackers who might have access to similar tools would be able to use them.”

What does concern Federighi from a privacy and security standpoint, however, is the human element. Specifically, he worries about a rise in the use of deepfakes, AI-generated audio and video that can make it look like anyone is saying or doing anything. As AI tools become more accessible in the years ahead, deepfakes could increasingly be used in so-called social engineering attacks, in which the attacker persuades a victim to hand over valuable data by tricking them into thinking they are communicating with someone they’re not.

“When someone can imitate the voice of your loved one,” he says, spotting social engineering attacks will only become more difficult. If “someone asks you, ‘Oh, can you just give me the password to this and that? I got locked out,’ ” and it literally sounds like your spouse, that, I think, is going to be a real threat.” Apple is already thinking through how to defend users from such trickery. “We want to do everything we can to make sure that we’re flagging [deepfake threats] in the future: Do we think we have a connection to the device of the person you think you’re talking to? These kinds of things. But it is going to be an interesting time,” he says, and everyone will need to “keep their wits about them.”

Privacy Enhancements Galore

The privacy and safety enhancements Apple is introducing this year—across iOS 17, iPadOS 17, watchOS 10, and macOS Sonoma —are myriad.

One of my favorites is Link Tracking Protection, in Messages, Mail, and Safari Private Browsing. This feature cuts off the extra bits in a link’s URL that is added by marketers and sites to track a user around the web. This is the bit of the URL that contains user-level tracking information, for example: www.examplewebsite.com/top10vacationdestinations?clickId=d77_62jkls. That “?clickId=d77_62jkls” bit is the tracking part. By auto-stripping this unneeded user-level tracking information out in iOS 17, iPadOS 17, and macOS Sonoma, Apple is making it harder for marketers and others to stalk your movements.

Apple is also extending what it calls Sensitive Content Warnings to adults. If you’ve ever been in a public space and received an anonymously AirDropped photo containing indecent content, you’ll appreciate the new feature, which auto-hides the content of the media when your device detects that the photo may contain nudity. The image will be obscured by default, though you can choose to view it if you wish. And Apple is now also enhancing its existing Sensitive Content Warnings for children by automatically alerting them when a video that is texted to them contains nudity. (Previously this only applied to photos.) The child is then instructed about their various options, including reporting the content to their parents. Apple-watchers will note that the company is sticking with its decision not to scan a user’s photo library in the cloud. The iPhone analyzes photos and videos for sensitive content on the device itself. Neither Apple nor any third parties ever have access to or are aware of any of the flagged content.

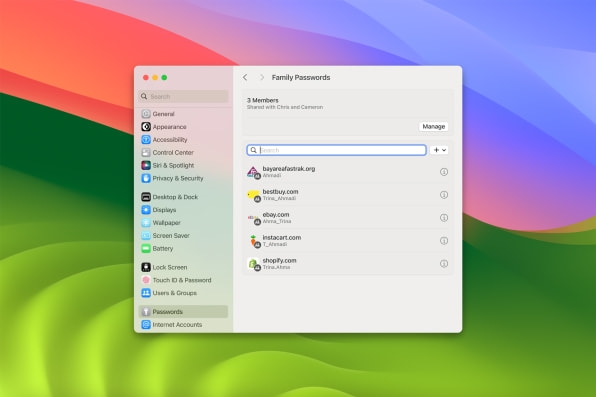

There are two other privacy features I find particularly noteworthy. One is password and passkey sharing. There are valid reasons that we may want to share an account password with friends or family members. Typically, we send that password in plain text format via a message or email—making it vulnerable to prying eyes. But now, iPhone, iPad, and Mac users will be able to securely share passwords and passkeys with whomever they choose without exposing the password in a message. Best of all, the person with whom you share the password will also receive the real-time synced two-factor authentication code if there is one associated with the password-protected account.

The other is Live Voicemail: When someone calls and leaves you a voicemail (ugh), the transcription of the voicemail message will automatically appear on your lock screen in real time as the caller is leaving it. If the user wants to talk to the caller, they can pick up the live call. But since you can see the voicemail as it is being left, you can also tell immediately if it’s from a marketer, which you can then ignore or block.

Of all the features we discussed, Federighi says he’s probably most personally interested in Check In; he looks forward to seeing the benefits the new safety feature brings to users.

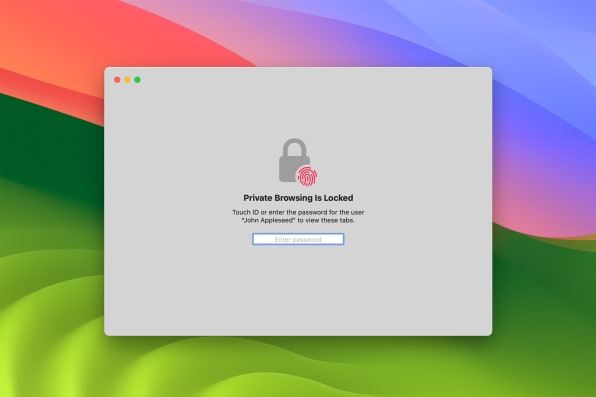

But when it comes to the new privacy features, Apple’s software chief is visibly enthusiastic about the improvements Apple is making specifically for Safari, which include an awesome new feature I haven’t mentioned yet: the auto-locking of private browsing tabs when they are not in use. This will be super helpful for people who work in environments where they may step away from their desks for a while. When they do, their Safari private browsing tabs will auto-lock and they’ll stay that way until the user authenticates with Touch ID or their Mac’s password to unlock them again. The feature is also available in Safari on iPhone and iPad.

“I am excited about the things we’re doing in Safari,” Federighi says, “because Safari has been a somewhat unheralded pioneer of private browsing, and so many privacy and security features, and this year it’s just a tour de force. The link protection, fingerprinting protections, auto-locking private browsing windows when you don’t use them for a while. . . . Browsing the internet is one of the major privacy threat vectors. And this is a huge, huge year” for Safari.

Following today’s WWDC preview, Apple has made beta versions of iOS 17, iPadOS 17, watchOS 10, and macOS Sonoma available to developers. Public betas for interested non-developers who want to get their hands on the new software as soon as possible will be released later this summer, and all of Apple’s new privacy and safety features will be available to iPhone, iPad, Apple Watch, and Mac owners this fall.

(21)