Exclusive: Microsoft aims for the holy grail—video conferencing that actually works

Two things are said on nearly every video conference meeting: “Can you see my screen?” and “Can you move closer to the microphone?”

Tele-meetings–especially ones with more than five people–are clunky at best and headache-inducing at worst. In a typical scenario, 10 people are gathered in a room in the home office and seven or eight souls traveling or working at home have to call in, or Skype in from their laptops. Some of them keep the mic on mute and shut off the camera, making them a purely passive participant. Since the remote people often can’t see the faces of the other participants, they have no idea when it might be their turn to speak. When they break in to the discussion, it sounds like this: “Hi hey hi hey hello? Hello? It’s Ed in Phoenix, can you hear me?” And they can’t see the body language of the people on the other end, so they have no idea if their comments are eliciting nods, shrugs, or blank stares.

It’s a miserable experience, one that many companies have tried to solve over the years to no avail. Now, Microsoft thinks it may have the answer—with a new technology that enhances collaboration, rather than put up barriers to it.

[Photo: Mark Sullivan]

Microsoft demonstrated its new meeting technology in a mocked-up conference room set during one of the keynotes at its Ignite conference Monday in Orlando. (Until a last-minute change, the demo was initially supposed to take place during CEO Satya Nadella’s keynote.) I was allowed in to one of Microsoft’s labs to watch one of the rehearsals for the presentation.

Some of the technology I saw is now generally available, some of it will be released later this year, and some of it is still very much in the experimental stage. The meeting technology pulls in the work of many of Microsoft’s groups, including Office 365, Teams, Bing, Cognitive Services (AI), and even HoloLens.

Before the meeting

Good meetings get the right people talking about the right things at the right times. Microsoft believes that process begins long before the meeting itself.

Microsoft’s idea is that every meeting should be represented by a place in the cloud where all relevant information about both the subject of the meeting and the people involved can be assembled. It’s a more advanced and resource-rich version of the old calendar event. This “meeting object” is used for communication and collaboration before, during, and after the meeting, Microsoft CVP Brian MacDonald told me. Before the meeting, the Microsoft AI might add or suggest logistical information, information about the participants, and relevant files and background information. New files might be added during the meeting, like collaboration notes and a real-time transcript of the discussion. Post-meeting notes like action items can be added, along with follow-up notes and communications.

[Image: courtesy of Microsoft]

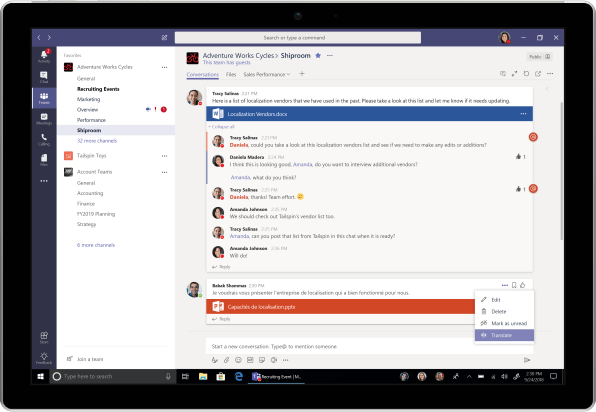

The meeting object often originates from within Microsoft Teams, the company’s Slack-like communications and collaboration environment. Teams has been available for about two years and, Microsoft says, is used by 329,000 organizations globally, including 87 of the Fortune 100.

Microsoft’s MacDonald, who originally proposed the Teams idea to CEO Satya Nadella and now leads the Teams group, told me to think of Teams as the center hub of all the apps and services within Microsoft 365 (which includes all desktop and cloud-based versions of Word, Excel, Edge, and many others).

“Anytime we talk about meetings, most of that will show up on Teams,” MacDonald said.

That message wasn’t lost on people who watched the presentation at the Ignite conference. “Microsoft’s latest announcements at Ignite highlight the ongoing efforts to make Teams the hub for communications, collaborative working and getting work done . . .” said CCS Insight analysis Angela Ashenden in a statement. “Microsoft Teams is increasingly becoming the focal point for employees within Office 365.”

Teams

Meetings can originate from Teams in a couple of different ways. If a few people are discussing an issue in a Teams channel, there’s always a button nearby to escalate the conversation to a live video chat. There’s another button in the chat window that initiates a formal meeting and starts a meeting object that begins attracting data relevant to the meeting.

In the center of the demo’s mocked-up conference room is a mysterious cone-shaped object with a fish-eye lens at its tip. Actually, this is a prototype device that isn’t on the market yet. But its job is to watch and listen to the people sitting around the table. At the start of the demo, you see a screen on the large monitor at the front of the room with a strip at the top showing the 360-degree view from the fish-eye lens, with name labels next to all four people at the table.

Below that, in a left pane you see the transcript of the meeting being formed in real time by the camera and some natural language AI. The cone-shaped device uses both sight and sound to identify the faces and voices of the people sitting around the table, so it knows who’s talking at any time (even when people are talking at the same time). The system could also translate the words it heard into another language. In the right pane on the screen was a space reserved for “insights and notes” that could be created by anybody in the meeting.

The screen was used to display lots of things. Anybody in the meeting could project the screen of their laptop (Surfaces all around) onto the display. At one point a Cortana assistant bot was called up to help arrange a future meeting.

That stuff was mostly to express Microsoft’s vision for meetings. It’s not stuff you can buy for your company today. But the demo also showed some things that are ready for prime time.

Search everywhere

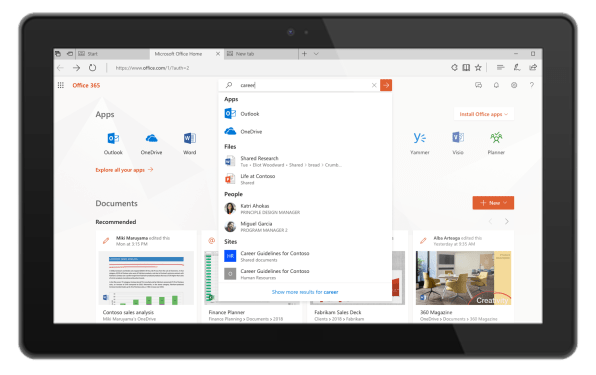

To get the right people talking about the right things during meetings, search is pretty important. You need to be able to grab details about invitees, logistical information, relevant documents, and contextual information like previous discussions that have taken place on the subject of the meeting. This information usually comes from places on the open web, and from digital assets behind the company’s firewall.

So Microsoft has been working to extend the reach of its search engine to the web for more general and public information (via Bing), into LinkedIn for professional information about people inside and outside the company, and into private company information.

A meeting attendee, if logged in with a Office 365 account, might look up someone who will also be present, to understand who they’ll be talking to. A search within Microsoft 365 can find the person’s office location, an org chart, files the person has shared publicly, or a LinkedIn post they wrote. A Conversations view shows the discussions the person has been having in Microsoft’s Yammer chat app and in Teams.

[Image: courtesy of Microsoft]

The search engine can now also return results from within third-party apps the company may use, like SAP or Adobe.

At one point in the demo, one of the attendees suddenly wondered if he could take the wife and kids with him on the annual sales getaway. He typed, “Can I take my wife and kids along with me on this business vacation?” into a search box at the top of the Powerpoint screen he happened to be working in (Microsoft has now put the search box at the top of all its productivity apps). The man got back a mix of links to work-related and non-work-related content. Across the the top of the screen he saw four cards include bookmarks (to company docs), groups (workgroups), files, and relevant company intranet sites. Those links took care of the company policy part of the query. Below them, Bing returned links to relevant supplemental information from the open web–such as information on locations, flights, and hotels, for example.

“Using AI from the Bing consumer search engine, we can deliver search results that are more personalized, relevant, and consumerized,” said Naomi Moneypenny, director of product management for Teamwork and Search.

Building the company brain

The AI underneath Microsoft 365 is always learning from the “signals” it receives from the apps that a client uses. All those signals–which could mean the way a company plans meetings, shares documents, or secures strategy documents–go into a graph, which can be thought of as the company’s brain, Microsoft says. The AI (borrowed from Bing) constantly draws upon information from the graph to learn, analyze, and make recommendations based on the work habits of the company. When a user is organizing a meeting, she might see search results that are informed, in part, by meeting organization habits detected in the past. If, for example, a meeting of a certain set of people usually has taken place in a certain conference room, the AI might suggest that location for future meetings of the same people.

“Microsoft Graph is really the way we learn about the way organizations are using content, and how they meet, and how the collaborate,” Moneypenny said. “We can learn their everyday work patterns.”

Microsoft says it’ll be rolling out the expanded search to Microsoft 365 apps, Bing.com, Office.com, and the SharePoint mobile app. And it will start showing up in Microsoft Edge, Windows, and Office in the future.

Video meetings get smart

Microsoft has added a new feature to video meetings that allows participants to blur out the background of their location. This can be used to make sure other participants can’t see that the user is linking in from a Starbucks or that a cat or baby is in the room. An unbusinesslike or unpredictable background can be a deterrent for people to keep their camera on during a meeting, which can shut the user off somewhat from full participation in the discussion.

Blurring a background is pretty easy for a still image. Background blur involves some fairly serious artificial intelligence, points out Lori Wright, general manager of Microsoft 365 teamwork and collaboration tools. “AI is letting us know what’s your face and what isn’t,” Wright explains. “And the AI has to do it every time you move.”

[Photo: Mark Sullivan]

One person in the demo meeting showed how Microsoft has added a meeting recording feature that lets users play back recorded meetings content anytime, including on a mobile. The recordings include the transcript (or translation) of the meeting, which include time codes so that you can click on a certain sentence and go right to that part of the video. And you can search for words or topics within the meeting, like your own name, or the name of a client.

“This is my Netflix binging of my work life,” says Raanah Amjadi, product marketing manager for Teams and Skype.

Later this year, Microsoft 365 users will be able to prepare and launch live streaming events from within Teams, Microsoft Stream, or the Yammer chat app. This is a great way for management and others to reach out to far-flung workforces. People in different time zones can use the Stream mobile app (iOS and Android) to replay the event, even when offline.

A new Surface Hub

At one point in the demo, two of the team members switched over to the new Surface Hub 2 digital whiteboard to work on a project together. The two were able to log into the device by touching a fingerprint reader on the bottom edge of the screen. Since the Hub then had both user’s account credentials, they were both able to bring up one of their own documents on the same screen. They were also able to move content back and forth from one user’s document to another. So, for example, User B might pull a statistic into their Powerpoint presentation from User A’s Word or Excel doc. Microsoft says it’ll release the Surface Hub 2 in the second quarter of 2019.

The beginnings of HoloMeetings

The coolest part of the demo was when two of the participants–one of them in another city–examined and discussed a 3D virtual object using HoloLens. In the demo scenario, the remote person was the manager of a smart building who could see from the sensor data that seven of his building’s conference rooms weren’t being used very much. The fictional company in the demo wanted to help the man (their client) find out why. So the building manager and one of the fictional company’s engineers strapped on their HoloLens headsets to look at a 3D virtual model of the building together. They were both able to pinch and drag the model to view it in different ways.

The other people in the meeting couldn’t control the 3D building image, but they could see it on a monitor in the room. To do this they used a third-party camera software that oriented the people and digital objects from HoloLens into the meeting setting (seen by a camera up in the corner of the room). In the view on the monitor, the building manager’s image sat at the head of the table in front of the building model. His image has far from sharp; he was heavily pixelated, but it was good enough to act as an avatar.

By looking at the 3D model the fictional engineer and the fictional building manager discovered that all seven of the unpopular rooms were exposed to bright sunlight every day. A theory was floated that those rooms were too hot, a problem easily fixed with window coverings and AC. Had the people merely been looking at the problem represented as numbers in a spreadsheet they may have seen the what but not the why. Some problems are like that.

The HoloLens experience shown in the demo isn’t available today. It’s still a little clunky and Microsoft is working on it. For now HoloLens is meant for front line workers who need to access information without moving their hands, or gaze, from the thing they’re working on. Remote workers may one day attend meetings using virtual reality or augmented reality.

The life cycle of a meeting

Some of us dread meetings because they eat up time during the day. But they’re still important; they’re where decisions get made. They’re where action items get created and assigned, and where people are motivated to carry them out.

Most good meetings come down to the organizer preparing well for the meeting, says Moneypenny. “If I’m a great meeting organizer, and I take the time to schedule the right attendees, and if I’ve gotten all prior meeting notes, and I’ve made sure everyone has the right information, those are all good things.”

During the meeting, Moneypenny says, the organizer has to make sure everyone can hear, that there are no translation or communication barriers, and that the right action items are being captured. After the meeting they have to make sure the action items get distributed.

“Those are all things we require humans to do today, so there’s a lot of variation in how things are done,” Moneypenny said, “because I’m depending on how and when a human does it.” Microsoft wants to eventually have intelligent bots take over much of the work of meeting planning. “Artificial intelligence can come in and remove that variation and do those tasks for us,” she says.

Some of the meeting-related pain points Microsoft addressing seem pretty basic. But the company is interested in moving past addressing pain points and toward creating tools that make meetings more productive than most people have seen before. This may mean a situation where you put on a headset, and suddenly you’re dropped into a meeting that looks so real that you forget you’re in a virtual space.

That may be a far-away goal, but it shows how much better meetings can get. And because of its stronghold in the workplace, Microsoft is well-positioned to pursue it.

(40)