Facebook announces the winner of its Deepfake Detection Challenge

In September of 2019, Facebook launched its Deepfake Detection Challenge (DFDC) — a public contest to develop autonomous algorithmic detection systems to combat the emerging threat of deepfake videos. After nearly a year, the social media platform announced the winners of the challenge, out of a pool of more than 2,000 global competitors.

Deepfakes present a unique challenge to social media platforms. Capable of being produced with little more than a consumer-grade GPU and software that can be downloaded from the internet. With it, individuals can quickly and easily create fraudulent video clips, the subjects of which appearing to say or do things that they actually didn’t. Facebook’s challenge seeks to combat this misinformation at scale by automatically detecting and flagging potentially offending videos for further review.

“Honestly I was pretty personally frustrated with how much time and energy smart researchers were putting into making better deepfakes without the commensurate sort of investment in detection methodologies and combating the bad use of them,” Facebook CTO Mike Schroepfer told reporters on Thursday. “We tried to think about a way to catalyze, not just our own investment, but a more broad industry focus on tools and technologies to help us detect these things, so that if they’re being used in malicious ways, we have scaled approaches to combat them.”

Hence the Deepfake Detection Challenge. Facebook spent around $10 million on the contest and hired more than 3,500 actors to generate thousands of videos — 38.5 days worth of data in total. It was the amatuer, phone-shot sort you’d usually see on social media rather than the perfectly-lit, studio-based vids created by influencers.

“Our personal interest in this is the sorts of videos that are shared on platforms like Facebook,” Schroepfer explained. “So those videos don’t tend to have professional lighting, aren’t in a studio — they’re outside, they’re in people’s homes — so we tried to mimic that as much as possible in the dataset.”

The company then gave these datasets to researchers. The first was a a publicly available set, the second a “black box” set of more than 10,000 videos with additional technical tricks baked in, such as adjusted frame rates and video qualities, image overlays, unrelated images interspersed throughout the video’s frames. It even included some benign, non-deepfakes just for good measure.

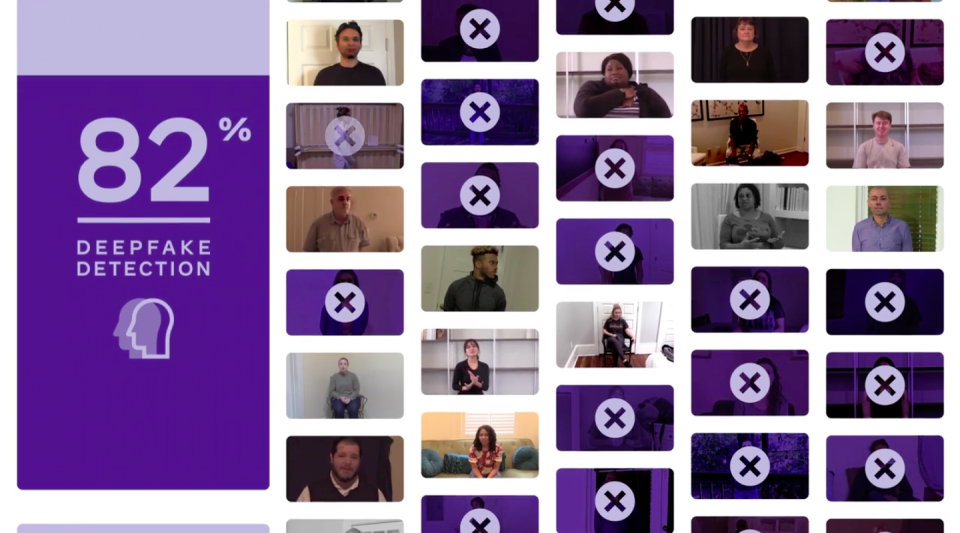

On the public data sets, competitors averaged just over 82 percent accuracy, however for the black box set, the model of the winning entrant, Selim Seferbekov, averaged a skosh over 65 percent accuracy, despite the bevy of digital tricks and traps it had to contend with.

“The contest has been more of a success than I could have ever hoped for,” Schroepfer said. “We had 2000 participants who submitted 35,000 models. The first entries were basically 50 percent accurate, which is worse than useless. The first real ones were like 59 percent accurate and the winning models were 82 percent accurate.” More impressively, these advances came over the course of months rather than years, Schroepfer continued.

But don’t expect Facebook to roll out these various models on its site’s backend anytime soon. While the company does intend to release these models under an open source license, enabling any enterprising software engineer free access to the code, Facebook already employs a deepfake detector of its own. This contest, Schroepfer explained, is designed to establish a sort of nominal detection capability within the industry.

“I think this was a really important point to get us from sort of zero to one to actually get some basic baselines out there,” he said. “And I think the general technique of spurring the industry together… We vectored our attention to that problem so we’ll see as we go, this general technique of using competitions to get people to focus on problems.”

“A lesson I learned the hard way over the last couple years, is I want to be prepared in advance and not be caught flat footed, so my whole aim with this is to be better prepared in case [deepfakes do] become a big issue,” Schroepfer continued. “It is currently not a big issue but not having tools to automatically detect and enforce a particular form of content, really limits our ability to do this well at scale.”

(31)