Facebook pulls back the curtain on its content moderators

When someone reports an offensive post on Facebook (or asks for a review on a message caught by its automatic filters, where does it go? Part of the the process is, as it always has been, powered by humans, with thousands of content reviewers around the world. Last year Facebook said it would expand the team to 7,500 people, and in an update posted today explaining more about their jobs, it appears that mark has been hit.

The size is intended to have people available for review in a post’s native language, although some items like nudity might be handled without regard to location. Of course, there’s extensive training and ongoing reviews to try and keep everyone consistent — although some would argue that the bar for consistency is misplaced.

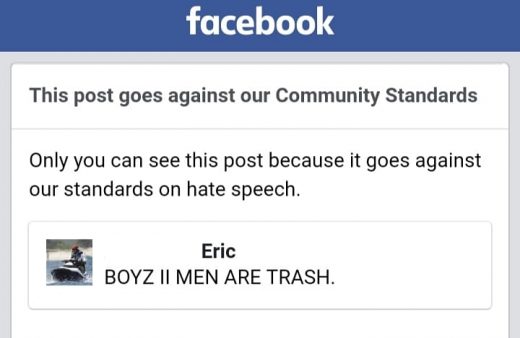

Facebook didn’t reveal too much about the individuals behind the moderation curtain, specifically citing the shooting at YouTube’s HQ, even though it’s had firsthand experience with leaking identities to the wrong people before. It did however bring up how the moderators are treated, insisting they aren’t required to hit quotas while noting that they have full health benefits and access to mental health care. While it might not make understanding Facebook’s screening criteria any easier — or let us know if Michael Bivins is part of the rulemaking process — the post is a reminder that, at least for now, there is still a human side to the system.

(22)