Facebook Reports Double-Digit Drop In Hate Speech And Harmful Content, Cites Advances In AI

Facebook Reports Double-Digit Drop In Hate Speech And Harmful Content, Cites Advances In AI

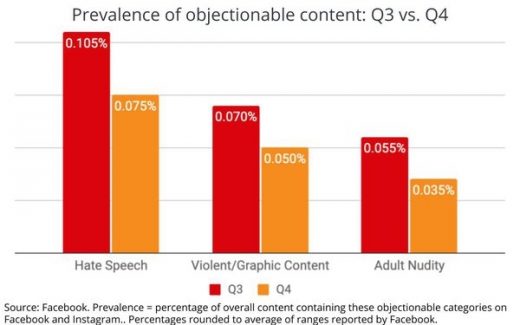

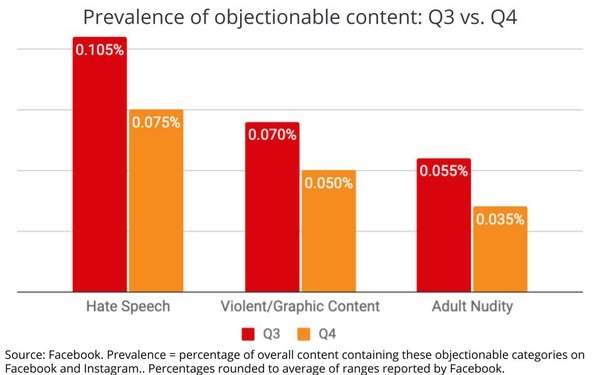

Facebook today released findings of its fourth-quarter 2020 Community Standards Enforcement Report showing marked improvements in the prevalence of objectionable content appearing on both Facebook and Instagram vs. what it disclosed in its third-quarter 2020 report.

The top lines are that the prevalence of all forms of content remains very low, and declined 29% for hate speech and violent or graphic content, and 36% for adult nudity, but much of today’s briefing focused on additional steps the company is taking to improve its policies, processes and technology to thwart harmful content being distributed across the social networks it operates.

While Facebook’s methods still are evolving, its executives said they believed they are developing the “lead the technology industry” and could well become standards adopted by the rest of the industry.

That said, they described their approach as continuing to evolve as the world around social media evolves, especially as it relates to both the context of speech and social sharing, as well as new technologies — especially AI — used to detect and moderate them.

Facebook CTO Mike Schroepfer largely attributed the reduced prevalence of objectionable content to advances Facebook has made in AI, but said that content — especially in multimedia cases like memes combining text and images, as well as video — remains a challenge.

To illustrate the role of context, he cited an example of someone commenting “This is great news” to two different life moments: the birth of a child or the death of a loved one.

Facebook’s execs said that is the reason the company initiated a global “hateful memes challenge” as an “open source” competition to help inform its AI to better understand the context of objectionable content in a multimedia environment.

The team reiterated the volume of objectionable content, hate groups and pages already removed from its platforms and said the prevalence of data is an indication of how well it’s working.

They explicitly cited the removal of “militarized” social groups, as well as QAnon groups, content and pages.

They also reminded journalists of steps Facebook took proactively to help law enforcement prior, during and after the January 6 Capitol insurgency, as well as recent updates Facebook was making to combat misinformation associated with the COVID-19 pandemic.

(33)