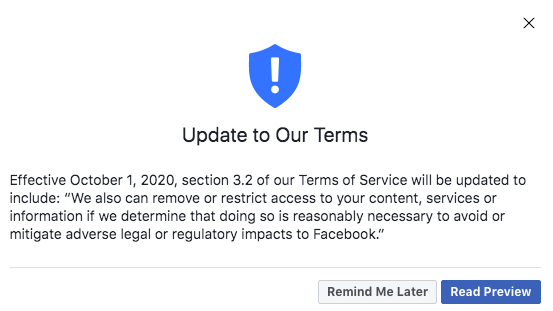

Many Facebook users this week had their user experience briefly interrupted by a pop-up message warning them of a forthcoming change to the platform’s terms of service.

“Effective October 1, 2020, section 3.2 of our Terms of Service will be updated to include: ‘We also can remove or restrict access to your content, services or information if we determine that doing so is reasonably necessary to avoid or mitigate adverse legal or regulatory impacts to Facebook.’”

The message was unusual, to say the least, as Facebook doesn’t typically slide into your timeline to let you know about one specific change it’s making to the volumes and volumes of rules that users agree to but never read. Unless you speak legalese, the message was also a bit cryptic. What kind of “regulatory impacts” is the world’s largest social networking service expecting?

The message has sparked speculation that Facebook is ramping up efforts to remove or censor objectionable content, or otherwise curtail the free exchange of ideas in advance of the U.S. election. Such chatter is understandable given that Facebook is not especially well trusted when it comes to issues such as transparency and data privacy, so we thought we’d try to parse what’s happening. Here’s what we know:

Why did Facebook send this message?

Facebook is currently fighting a high-stakes legal battle in Australia, where lawmakers have proposed a rule that would allow news publishers to demand payment from social media companies for the content shared on their platforms in some situations. Facebook definitely does not want that—in a blog post Monday, it even threatened to prevent Australian users from sharing news on Facebook if the proposal becomes law.

But this TOS change is about more than Australia. If lawmakers are successful there, other countries could conceivably replicate the model, prompting a fundamental shift in the economic dynamics of user-generated sites where content is shared freely in exchange for eyeballs, clicks, and taps.

Reached for comment about the TOS change, a Facebook spokesperson said, “This global update provides more flexibility for us to change our services, including in Australia, to continue to operate and support our users in response to potential regulation or legal action.”

So this affects users outside of Australia too?

Yup. It’s global.

How big a change is this?

The language of the update is broad, but it doesn’t appear to mark a major shift in what Facebook has the power to do, according to Mason Kortz, a clinical instructor at the Harvard Law School Cyberlaw Clinic.

“Essentially what Facebook is saying is, ‘If governments try to make us responsible for certain types of content—including compensating content creators, as the proposed Australian regulations would do—we’re just going to delete the content instead,’” Kortz told Fast Company in an email. “This is a sweeping statement, but I don’t know if it’s a material change in Facebook’s power.”

He goes on to note that Facebook’s terms could already be reasonably interpreted to mean that it can arbitrarily remove content. And Facebook is, of course, a for-profit company that can set its own content guidelines.

What does this really mean for users?

It’s hard to predict how the fight in Australia, or regulatory fights elsewhere, will play out in the long run, but Facebook clearly wants us to think that this type of regulation will negatively impact the user experience.

“I think the plan here is rally their users against potential regulation, making potential regulators out to be the ‘bad guys,’” Kortz says. “Of course, this message is directed at governments as well: If you regulate us, we’re going to take our ball and go home.”

(54)