Facebook’s Portal learned its video skills from some of Hollywood’s best cameramen

Facebook’s Portal home camera has its drawbacks–privacy concerns not least among them–but it does have one undeniably cool feature: its ability to intelligently frame shots and follow the action around a room during video calls. It’s a dramatic improvement over smartphone-based video calling where it’s entirely up to the humans on both ends to position the cameras (this often results in plenty of jitter, wall views, and up-the-nose shots). With Portal, it’s more like a separate human camera operator is thoughtfully framing scenes, moving smoothly from one subject to another.

Portal relies on some fairly new thinking in computer vision AI to create that experience, much of it developed in just the last couple of years.

And, it turns out, a lot of human experience and expertise went into training the artificial intelligence that runs Portal’s camera. Portal’s AI has a good deal of film-industry knowledge embedded into the layers of its neural network. It knows, for example, what a “cowboy shot” is (a shot from mid-thigh up that shows not only the subject’s face, but what he’s packing in his holster). It knows when and how to focus in on people, and ignore the environment around them.

A lot of interesting ingredients–artificial and human–went into building Portal’s camera, and the company is just now talking about it. I spoke to three Facebook engineers who were intimately involved with the development of Portal, the social networking company’s first foray into hardware.

Faces and bodies

Portal’s key innovation is a lightweight computer vision model that recognizes not only the faces, but the bodies of the people in front of its camera.

Head and face detection in consumer technology is nothing new. Consumer cameras use computer vision to detect human heads or faces for auto-focus, for example (you may have seen the bounding boxes some systems apply when they recognize someone). But those simple systems don’t gather much information about a person’s body posture.

“If we just knew where you were and not anything about your body orientation–let’s say if you were lying down on the couch–it would be next to impossible for us to get a good shot or a close-up of your position,” said Portal engineer Eric Hwang.

The Portal engineers knew they’d need a computer vision model that could reliably recognize people’s heads and trunks and limbs, a model that would know to frame and track a shot of a person cooking in a kitchen differently than a group of people sitting around a table.

Facebook’s AI research team had already developed a computer vision model called Mask R-CNN (short for “regional convolutional neural network“) in April 2017 that could recognize 2D images of people and their body movements. But that model was designed to run on desktop GPUs, and Facebook wanted to run Portal’s computer vision model solely on a smaller mobile chip within the device. Having to constantly make calls to a cloud server running the model would surely create lag time in the video calls, the thinking went.

Dramatically scaling down the R-CNN model ended up being the biggest challenge the engineers had to overcome during Portal’s breakneck two-year development cycle. AI teams from across Facebook chipped in to find a solution, and one was eventually found. It was a process of slimming down, optimizing, and making trade-offs.

“We developed a set of processes that start with this big heavy neural network, and then refine and refine until it reduces the number of layers, reduces the number of channels within those layers–which all represent computation–until it fits within the [computing capability] envelope of the target architecture,” said Matt Uyttendaele, the director of Facebook’s Seattle-based Core AI team.

The end result was a new model called Mask R-CNN2Go. It’s just a few megabytes big, small enough to run on Qualcomm’s Snapdragon Neural Processing Engine. Facebook’s engineers say they worked closely with the chip maker to optimize the processor for R-CNN2Go.

In practice, Portal’s scaled-down computer vision model constantly analyzes each of the 30 frames-per-second shot by the camera looking for anything that might be a subject for video shot. It outputs point data on heads and trunks and limbs that then informs the composition of the video shot. One of the model’s biggest jobs is knowing when to discount objects that don’t matter. Like a human face hanging on the wall in a picture frame. It has to know how to forget about a person who suddenly leaves the room. Or to ignore a person who is just walking by in the background and stay focused on the person in the foreground talking.

All these little choices add up, and result in camera shots that can feel more natural and intimate.

“So as you’re moving around on a video call, the other person feels like they’re right there next to you,” Hwang said. “You don’t get that sense of distance you often get if you were to set up an iPad running FaceTime on a stand.”

The computer vision model behind Portal’s camera was trained on millions of open-source training images to teach it to recognize all kinds of people and postures. Facebook also provided some of its own training data because the open source material didn’t contain enough imagery from home settings where video calling will often take place.

Calling Hollywood

But even after the Facebook engineers had taught Portal to zoom, pan, and track to relevant subjects based on the 2D pose data, it still wasn’t quite right. The camera could frame shots around people in a logical way, but its movements still felt “stiff and mechanical,” say the engineers about the early prototypes. They knew they had to add some art to the science. And that’s when they called Hollywood.

Facebook’s engineers brought in camera operators, cinematographers, and documentary filmmakers to learn the tried-and-true techniques used by the pros to frame shots and follow action. Beyond the consultations, the Facebook engineers did a series of experiments to find out how the camera operators would respond to some of the unique challenges the Portal would face shooting in real time in a home environment. They asked the operators to shoot random scenes from odd or awkward camera positions to find out how they handled it–what they focused on, how they moved the camera. The Facebook people then boiled down the camera approaches they saw to a series of techniques that could be introduced into Portal’s algorithms.

“[There’s a] tendency to build a lot of framing based on the traditional one-on-one anchors of head shots and torso shots and all that,” said Facebook VP of hardware Rafa Camargo. “I think the team was able to get the camera to behave in sophisticated ways that require quite a bit of technique and understanding of the way human beings behave when we do tracking shots, and panning and zooming at the same time . . .” Camargo was the engineering lead of Google’s ATAP group until August 2016 when he was recruited to take over the Portal group at Facebook.

Those techniques may be technically sound and perhaps more complex, but part of the reason they’re pleasing to the eye may be because they create an effect that seems familiar.

“It feels so natural because the framing you’re used to seeing in TV or in movies actually they do it that way because over time we’ve learned that that’s what computes well on the human brain,” Camargo told me.

Facebook offers a basic Portal ($199) that shoots only in landscape mode, and a more expensive Portal Plus ($349) can shoot in both landscape and portrait mode. The Portal Plus’s portrait mode is meant to be used for tighter one-to-one video conversations. That’s a very different experience than landscape, the engineers realized, requiring a different set of camera techniques. Camera operators normally shoot in–and cinematographers usually think in–landscape mode, but Facebook asked them to shoot a variety of portrait mode scenarios to see what kind of choices they made. They found, for instance, that in portrait mode, the operators based their compositions on the people in front of the camera, not the setting. Those lessons were also baked into the Portal Plus’s algorithms for portrait mode operation.

The engineers still had to make some value judgements on how to shoot certain situations, they told me. Scenario: A family is doing a video call with grandma, and a child jumps up from a mother’s lap and moves quickly over to a dark corner in the room. Should Portal’s eye follow the child or widen the shot to keep the child in the frame?

“There’s actually quite a bit of subjectivity that goes into deciding what the Smart Camera should be doing,” Hwang told me. “Some people would say if the child is moving too fast, maybe you don’t want them in the frame, but what turns out from our studies is that people (like Grandma) just generally want to see the people on the other side–especially the kid.”

Camargo points out that the user can exert some control over how the camera handles such things. There is a completely automated mode where the camera just naturally follows people around to include them in the frame. But they can also tell the camera to focus on one main person and ignore other people who may be coming in and out of the frame, he explained.

AR and the future

None of this may matter to you if don’t own a Portal or plan to. In fact, as of now, you only get Portal’s full computer vision goodness if both you and the person on the other end of the video call have one of the devices (Facebook has two-Portal deals starting at $298). But the AI underpinning Portal may matter to you in the future.

Big tech companies like Google, Facebook, and Amazon know that the camera will play a huge role in the future of computing. Each is investing heavily in developing the brains behind the cameras and the platforms where the resulting content might be distributed.

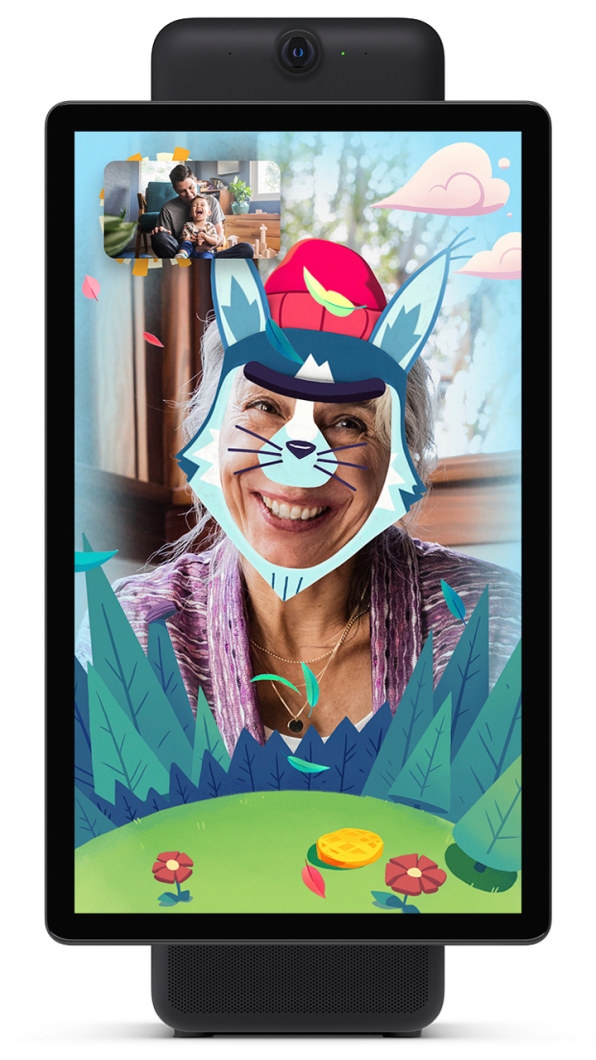

The camera will be at the center of what’s very likely the next great computer interface after the PC and the smartphone–augmented reality (AR), or the mixing of digital content with real-world content within a camera view. Right now that camera lens lives primarily on phones, but AR will get much more interesting when it begins to migrate to other devices–like glasses. Or home cameras like Portal. Facebook already used its own Spark AR platform to add AR effects to Portal’s Story Time interactive reading feature.

AR’s coolest applications probably haven’t even been thought of yet. But efficient and accurate face and body tracking will likely be a big part of it. Facebook is now testing the Mask R-CNN2Go model on mobile phones, which could pave the way for some interesting experiences. A user may be able to cast a moving image of their own body into an augmented reality space and even dress it up in digital accessories. Or cover the face with a digital mask. The AI might let users control mobile games with their body movements.

In the nearer term, Facebook’s smart camera technology will start to show up in other contexts. Right now it runs within Facebook Messenger exclusively, but the engineers are already working on a version that runs within WhatsApp.

The Portal hardware may not end up being a huge hit, but the AI inside it probably has a big future.

(28)