Five Things Amazon’s Alexa Needs to Make Sense On Smartphones

Ever since the failure of its Fire phone, Amazon has needed a way to embed its services more deeply into smartphones. Now, the company has an opportunity in Alexa, its virtual assistant that’s become a hit through the Amazon Echo speaker.

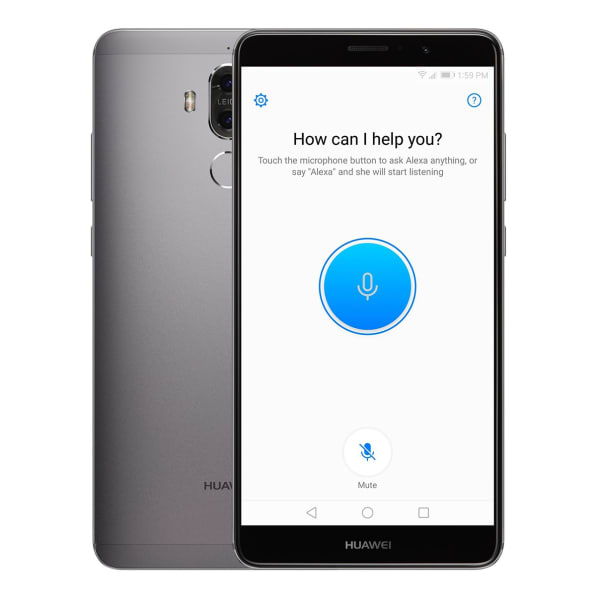

Last month, Huawei’s Mate 9 became the first smartphone to include an app for Alexa voice commands, and Lenovo says it will add Alexa integration to Motorola phones later this year. (Amazon has also added Alexa to its main shopping app for iOS.)

But while Alexa is an indispensable presence in smart homes, it’s practically a proof-of-concept on phones. Based on my experience with the Mate 9, Alexa falls short of other virtual assistants, such as Google Assistant and Apple’s Siri in too many ways.

Here’s what Amazon Alexa still needs for Alexa to make sense on smartphones:

1. Quicker Activation

Most Android phones can summon Google when the user holds down the home button, but bringing up Alexa on the Mate 9 isn’t so simple. Users must either tap the Alexa app icon or draw a customizable “knuckle gesture,” which involves knocking on the screen and scribbling a letter. Neither approach feels frictionless, and in both cases, users must then tap a button or say “Alexa” within the app to begin a voice command. A dedicated hardware button or hands-free voice activation would make Alexa much easier to access.

Phone makers may be somewhat limited in where they can put Alexa, due to Google’s conditions for including the company’s services and app store on Android devices. One of those conditions, according to The Information, is that Google must be the phone’s default virtual assistant and voice search provider. If phone makers want to make Alexa more accessible, they’ll need to come up with workarounds. (Lenovo has announced that users won’t have to unlock their phones to summon Alexa, so it must have something in mind. And Samsung’s new Galaxy S8 and S8+ have dedicated buttons for the company’s upcoming Bixby Voice, showing that it’s possible for Android phones to have quick access to an assistant that isn’t Google Assistant.)

2. Visual Responses

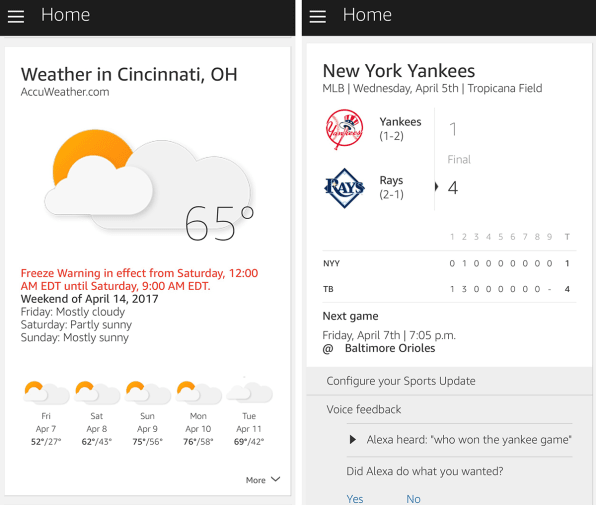

Although the Mate 9 has a massive 5.9-inch display, Alexa doesn’t put the screen to use. Amazon’s assistant only responds to questions by voice, so you can’t just glance at the long-range forecast or a box score. And for Amazon.com listings and Yelp suggestions, there’s no convenient way to get more information about what you just searched for.

This situation will likely improve in the future. Amazon’s Fire TV devices and Fire tablets already provide an onscreen presence for Alexa, and in January, Amazon announced that it would extend this capability to devices made by other companies. Alexa will feel more useful on phones once it’s possible to see the assistant’s responses on the screen.

3. Phone-Friendly Skills

Even if Alexa were easier to access on the Mate 9, it still wouldn’t support all the things you’d want to accomplish on a smartphone. Some examples: You can’t get directions to or from a specific place, dictate or listen to text messages, initiate a phone call, adjust phone settings, or set time- and location-based reminders. The Mate 9 also doesn’t support some major functions that do work on the Echo, such as music playback and alarms.

Granted, some of those functions are already available with existing smartphone assistants, such as Google Assistant and Siri. But how many users will really want to think about which assistant to summon based on what they’re trying to accomplish? Alexa needs to be equal to or better than what’s already available if users are going to bother with it at all.

4. Deep Links To Other Apps

Once Alexa starts gaining more phone-friendly functions, it’ll also have to do better at connecting with existing smartphone apps. If you ask to play a song in Pandora, for instance, Alexa on a smartphone should open the Pandora app, rather than playing music in its own self-contained Pandora pop-up. It should also link out to the phone’s default mapping app–on Android phones, that’s Google Maps–for directions, and present users with a link to the phone’s calendar app for further options after creating an appointment.

To some extent, Alexa is already capable of this. On the Mate 9, if you ask for restaurant recommendations on Yelp, for instance, you can then open the Alexa companion app to view a log of all past requests. From there, you can tap on Yelp’s suggestions to view them within the Yelp app. But in other instances, such as calendars and maps, Alexa leaves users at a dead end.

5. A Proactive Element

In recent years, other virtual assistants have moved beyond simply responding to requests. Both Google Assistant and Microsoft’s Cortana, for instance, provide a feed of useful information in their apps, including flight delays, sports scores, package tracking, and traffic alerts. The idea is that a helpful assistant should sometimes provide information without being asked first.

Proactive alerts wouldn’t make much sense on a speaker like the Amazon Echo–you wouldn’t want it blurting out information at random, or to the wrong person–but they’re right at home on a smartphone.

The common thread here is one I’ve touched on before: Alexa has been successful in part because it was designed entirely around voice. Without the crutch of visual feedback, Amazon and third-party developers were forced to make sure the voice interactions were fast and easy to understand.

But this restriction is starting to become a liability as Alexa expands beyond speakers. Amazon has a lot more work to do if it wants to compete with Google and Siri on their native turf.

(33)