If they aren’t careful—or potentially even if they are—TikTok’s youngest users could find themselves bombarded with dangerous video content within minutes of joining the platform, a new study claims.

The report, released Wednesday evening by the nonprofit Center for Countering Digital Hate (CCDH), aims to decrypt TikTok’s algorithm by using accounts that posed as 13-year-old girls, among the platform’s most at-risk users. Every 39 seconds, TikTok served the harmful content to the “standard” fake accounts created as controls. But the group also created “vulnerable” fake accounts—ones that indicated a desire to lose weight. CCDH says this group was exposed to harmful content every 27 seconds.

Researchers set up a duo of standard and vulnerable accounts in four English-speaking countries: the United States, the U.K., Canada, and Australia. They scrolled each account’s For You feed, which provides an inexhaustible supply of videos when a user opens the app. According to TikTok, For You is “central to the TikTok experience” because it’s “where most of our users spend their time.” Every time a video relating to body image, mental health, eating disorders, or self-harm played, CCDH’s team liked it, then paused for 10 seconds. Everything else got skipped.

TikTok’s algorithm has made the app immensely popular and its owner, ByteDance, extremely valuable ($300 billion currently). But speaking to press, CCDH chief executive Imran Ahmed called TikTok’s recommendations “the social media equivalent of razor blades in candy—a beautiful package, but absolutely lethal content presented within minutes to users.”

Researchers let a random-female-name generator decide the standard accounts’ usernames. Vulnerable accounts got the same treatment, only the unsubtle phrase “loseweight” was added to them. All eight accounts were set up on new IP addresses using clean browsers with no cookies; TikTok was given no way to identify personal details or infer interests. Researchers watched 240 minutes’ worth of these accounts’ recommendations, coming to a grand total of 595 “harmful” videos, according to CCDH.

Today, two-thirds of American teenagers are on TikTok, for an average of 80 minutes per day. CCDH says standard accounts were exposed to more pro-eating disorder or self-harm content every 3.5 minutes—that is, 23 daily exposures for the average TikToker. Vulnerable accounts were shown 12 times more harmful content, however. While standard accounts saw a total of six pro-suicide videos (or 1.5 apiece, spread over 30 minutes), the vulnerable accounts were bombarded with another every 97 seconds.

CCDH’s definition of “harmful content”—versus pro-ED or pro-self-harm—could admittedly make the group some enemies. It lumped educational and recovery material in with negative content. Its researchers say that in many cases they couldn’t determine the intent of videos, but the group’s bigger argument is that even positive content can cause distress, and there’s no way to predict this effect on individuals: That is why trigger warnings were invented.

An explosion of young users

Like all of the major social platforms, TikTok—which last year hit one billion monthly active users, the largest proportion of whom haven’t reached drinking age—has enacted policies for years that are supposed to eliminate harmful content. User guidelines ban pro-ED and pro-self-harm content, a policy enforced by a tag-team of AI and human moderators. Nevertheless, activists still accuse TikTok of doing too little to halt its proliferation. Recently, the company has stepped up efforts in response. Searches for glaring hashtags (#eatingdisorder, #anorexia, #proana, #thinspo) now redirect to a National Eating Disorder Association helpline. Teenagers reportedly merit “higher default standards for user privacy and safety,” and policies have focused on them; they aren’t supposed to see ads for fasting or weight-loss cures anymore, for instance.

Safeguards got at least three refreshes over the past year, too. “Too much of anything, whether it’s animals, fitness tips, or personal well-being journeys, doesn’t fit with the diverse discovery experience we aim to create,” the company explained last December, to signify a push to “diversify” user content. Months later, an official ban on disordered eating was announced—no more content glorifying overexercise, short-term fasting, and the like. And in July, TikTok identified content types that “may be fine as a single video, but potentially problematic if viewed repeatedly,” a list that included “dieting, extreme fitness, sadness, and other well-being topics.”

Such policies may have made harmful content harder to find. But TikTok’s critics dispute whether they’ve shrunk it. “Our findings reveal a toxic environment for TikTok’s youngest users, intensified for its most vulnerable,” the CCDH report states.

TikTok forbids the obvious dangerous hashtags now, and blatant misspellings thereof. But research indicates that savvy members of the pro-ED and pro-self-harm communities have devised whack-a-mole-style coded tags to evade language bans—#edsheeran may replace #eatingdisorder, or there’s #sh for #selfharm. Often, posts will also include a hashtag like #fyf or #fyp, shortcuts that help creators boost a video’s For You feed visibility.

Separately, experts have argued that TikTok is no different from Facebook, in that it can cocoon users inside “filter bubbles,” hyper-personalized feedback loops that distort reality and even reaffirm negative traits that aren’t socially acceptable outside the bubble. TikTok has admitted its algorithm is capable of producing these bubbles, possibly reinforcing self-destructive tendencies in the process.

Elsewhere, these bubbles foment echo chambers and conspiracy theories. But humans’ early teenage years are supposedly some of their most impressionable. Per CCDH, this means that suggestible 13-year-olds prone to body-image problems are being set up for “every parent’s nightmare”: “Young people’s feeds are bombarded with harmful, harrowing content that can have a significant cumulative impact on their understanding of the world around them, and their physical and mental health.”

The minimum age to join TikTok is 13, as it is for many social media sites. Calls to raise the minimum age run across the political spectrum, ranging from progressives like Senator Ed Markey of Massachusetts to the evangelical group Focus on the Family.

How body-image struggles manifest online

Previous research shows that young people unhappy with their body image often incorporate this struggle into their social-media usernames, reflecting a problem that can double-dip in mental-health issues: Examples of usernames that one researcher found on social platforms included “all-i-eat-is-water,” “anasaved-me,” and “imsorrythatiwasborn.” One of the highest-profile cases lately—that of 14-year-old Molly Russell, who took her life after viewing some 2,100 social-media posts about self-harm and depression—involved the username “Idfc_nomore,” internet slang for “I don’t fucking care no more.” (Russell’s father, Ian, is partnering with CCDH on this project.)

That pattern spurred CCDH to, in its words, “test if naming accounts on TikTok influenced the content shown on the For You feed.” The group believes its own findings suggest there’s at least a correlation.

Asked to clarify if usernames influence the algorithm, TikTok denied that they play any role in determining what appears on users’ For You feeds. A spokesperson also told Fast Company that the company believes CCDH’s methodology “does not reflect genuine behavior or viewing experiences of real people,” a reference to the fact that people generally watch a variety of videos when scrolling, not one category exclusively. “We’re mindful that triggering content is unique to each individual,” the spokesperson added, “and remain focused on fostering a safe and comfortable space for everyone.”

Yet CCDH disagrees that the platform is a safe space even broadly, arguing that its researchers, in effect, stumbled onto a covert eating-disorder community with well north of 10 billion views. Fast Company was allowed to view the videos that they scrolled through on the accounts’ feeds. (They turned on Android’s built-in screen-recording feature for 30 minutes once the feeds were live.)

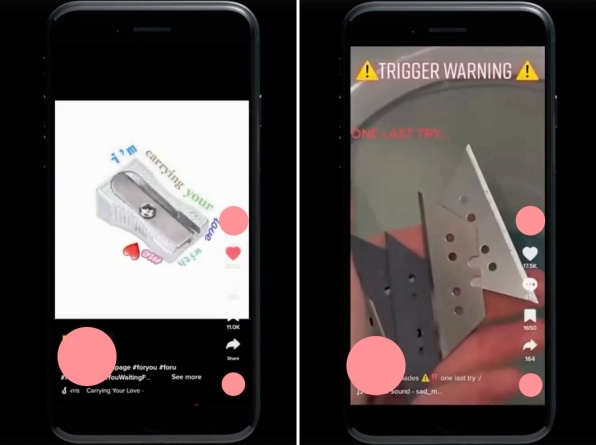

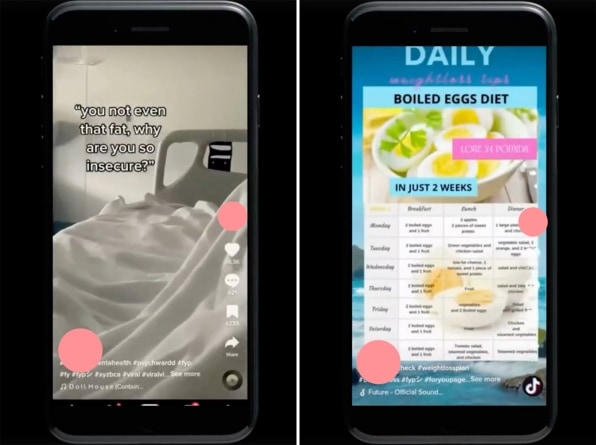

Video content (screenshots visible above) ran the gamut from boiled-egg diets, weight-loss drinks for sale, rebates for tummy tucks, montages of skinny bodies alongside the words “Man I’m so ugly,” clips of wrists, and a scene from a hospital bed in which “You not even that fat, why are you so insecure?” flashes above #ed and #sh.

CCDH examined these posts’ hashtags, and researchers found 35 that they claim linked to pages of pro-ED videos. Many used nonsense names like #wlaccount, #wlacc, #kpopwlacc, #edtøk, and #kcaltok and text that states “fake situation” to fly under TikTok’s radar. Yet these communities were known by enough users to have racked up 60 million views.

Researchers identified another 21 hashtags that were pulled from both positive and negative videos—a set that boasted almost 13.2 billion views. On the press call, Ahmed asked why TikTok hasn’t affixed warnings or links to any resources to these 56 hashtags, instead of making them “a place where recovery content mingles freely with content that promotes eating disorders.” He says CCDH has a small 20-person team, and “we managed to do this research over a period of a couple months.”

One answer may be that TikTok is distracted: ByteDance, which is partly owned by China, has created new geopolitical problems of late. Just this week, a bipartisan group in the Senate introduced a bill that would ban TikTok in America outright. Former President Donald Trump tried doing the same by executive order, and Biden officials have more recently claimed that TikTok poses a range of security concerns. The China ties have put TikTok in enough hot water to land Chief Operating Officer Vanessa Pappas in a Senate hearing in September. She stressed TikTok’s commitment to safety during her testimony. It just introduced a new rating system to prevent users from seeing videos deemed inappropriate, she touted, with a focus on “safeguarding the teen experience.”

The CCDH report calls her assurances “buzzword-laden empty promises that legislators, governments, and the public have all heard before.” TikTok is now the fastest-growing social platform, but the algorithm lacks transparency, CCDH argues, and many parents and even journalists fail to understand the potential dangers. “It has overtaken Instagram, Facebook, and YouTube in the bid for young people’s hearts, minds and screen time,” Ahmed said. “And the truth is most parents and adults understand very little about how it works.”

(28)