Google and MIT prove social media can slow the spread of fake news

During the COVID-19 pandemic, the public has been battling a whole other threat: what U.N. Secretary-General António Guterres has called a “pandemic of misinformation.” Misleading propaganda and other fake news is easily shareable on social networks, which is threatening public health. As many as one in four adults has claimed they will not get the vaccine. And so while we finally have enough doses to reach herd immunity in the United States, too many people are worried about the vaccines (or skeptical that COVID-19 is even a dangerous disease) to reach that threshold.

However, a new study out of the Massachusetts Institute of Technology and Google’s social technology incubator Jigsaw holds some hope to fixing misinformation on social networks. In a massive study involving 9,070 American participants—controlling for gender, race, and partisanship—researchers found that a few simple UI interventions can stop people from sharing fake news around COVID-19.

How? Not through “literacy” that teaches them the difference between reliable sources and lousy ones. And not through content that’s been “flagged” as false by fact checkers, as Facebook has attempted.

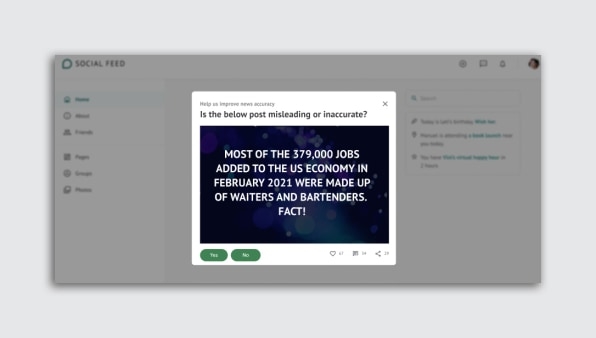

Instead researchers introduced several different prompts through a simple popup window, all with a single goal: to get people to think about the accuracy of what they’re about to share. When primed to consider a story’s accuracy, people were up to 20% less likely to share a piece of fake news. “It’s not that we’ve come up with an intervention you give people once, and they’re set,” says MIT professor David Rand, who was also lead author of the study. “Instead, the point is that the platforms are, by design, constantly distracting people from accuracy.”

At the beginning of the experiment, people were given a popup prompt, like being asked to rate the accuracy of a neutral headline. One example was, “‘Seinfeld’ is officially coming to Netflix.” This was simply to get them thinking about accuracy. Then they were presented higher-stakes content related to COVID-19 and asked if they would share it. Examples of the COVID-19 headlines people had to parse were, “Vitamin C protects against coronavirus” (false) and “CDC: Coronavirus spread may last into 2021, but impact may be blunted” (true). People who were primed to think about the accuracy of headlines were less likely to share false COVID-19 content.

“A lot of the time, people can actually tell what’s true and false reasonably well. And people say, by and large, they don’t want to share inaccurate information,” Rand says. “But they may do it anyway because they’re distracted, because the social media context focuses their attention on other things [than accuracy].”

What other things? Baby photos. A frenemy’s new job announcement. The omnipresent social pressure of likes, shares, and follower counts. Rand explains that all of these things add up, and the very design of social media distracts us from our natural discernment.

“Even if you are someone who cares about accuracy and is generally a critical thinker, the social media context just turns that part of your brain off,” says Rand, who then recounted a time in the past year he discovered that he’d shared an inaccurate story online, when he is in fact a researcher on just this topic.

MIT first pioneered the research theory. Then Jigsaw stepped in to collaborate on and fund the work while using its designers to build the prompts. Rocky Cole, research program manager at Jigsaw, says the idea is “in incubation” at the company, and he doesn’t imagine it being utilized in Google products until the company ensures there are no unintended consequences of the work. (Meanwhile, Google subsidiary YouTube is still a dangerous haven for extremist misinformation, promoted by its own suggestive algorithms.)

Through the research, MIT and Jigsaw developed and tested several small interventions that could help snap a person back into a sensible, discerning state of mind. One approach was called an “evaluation.” All that amounted to was asking someone to evaluate whether a sample headline seemed accurate, to the best of their knowledge. This primed their discerning mode. And when subjects saw a COVID-19 headline after being primed, they were far less likely to share misinformation.

Another approach was called “tips.” It was just a little box that urged the user to “Be skeptical of headlines. Investigate the source. Watch for unusual formatting. Check the evidence.” Yet another approach was called “importance,” and it simply asked users how important it is for them to share only accurate stories on social media. Both of these approaches worked to curb the sharing of misinformation by about 10%.

An approach that didn’t work was around partisan norms, which was a prompt that explained how both Republicans and Democrats felt it was important to share only accurate information on social media. Interestingly, when this “norms” approach was mixed with the “tips” approach or the “importance” approach, guess what? Tips and importance both became more effective. “The overall conclusion is you can do lots of different things that prime the concept of accuracy in different ways, and they all pretty much work,” Rand says. “You don’t need a special magical perfect way of doing it.”

The only problem is that we still don’t understand a key piece of the puzzle: How long do these prompts work? When do their effects wear off? Do users begin to tune them out?

“I’d hypothesize [these effects are] quite ephemeral,” Cole says. “The theory suggests people care about accuracy . . . but they see a cute cat video online and suddenly they’re not thinking about accuracy, they’re thinking about something else.” And the more you see accuracy prompts, the easier it is to ignore them.

These unknowns point to avenues for future research. In the meantime, we do know that we have tools at our disposal, which can be easily incorporated into social media platforms, to help curb the spread of misinformation.

To keep people sharing accurate information, sites could require a constant feed of novel ways to get users to think about accuracy. Rand points to a prompt Twitter released during the last presidential election. He considers this prompt to be a very good bit of design, as it asks readers if they want to read an article before retweeting it, reminding them about the topic of accuracy. But Twitter has not updated the prompt in the many months since, and it’s probably less effective as a result, he says. “The first time [I saw that] it was like ‘Whoa! Shit!’” Rand says. “Now it’s like, ‘yeah, yeah.’”

(47)