Google DeepMind Testing Personal Life AI Tools

Google DeepMind Testing Personal Life AI Tools

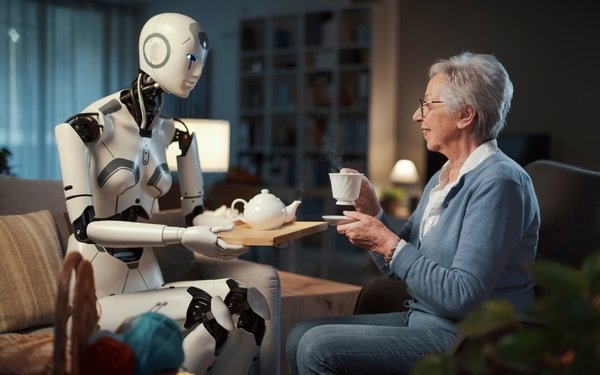

DeepMind, Google’s robotics and artificial intelligence (AI) company, is testing a new tool that could soon become a “personal life coach” for those seeking answers.

The project uses generative AI to perform at least 21 different types of personal and professional tasks, including life advice, ideas, planning instructions and tutoring tips. It also is being tested to determine how well the assistant can answer personal questions about people’s lives, according to documents seen by The New York Times.

For example, a user might one day ask the chatbot how to approach a friend with a sensitive topic. The tool has an idea-creation feature that will make suggestions or recommendations based on different situations.

Google earlier this year merged its Brain team from Google Research with DeepMind — two primary AI research units — to become one.

The move brought together two powerful divisions at Google, despite experts’ warnings about the ethical concerns around AI and human relationships and mounting calls for regulation around the technology.

This AI life-coach option apparently is something DeepMind researchers have been working on for some time. In April, the company published a paper on how it builds human values into AI. But whose values are they, and how are they selected? Principles help to shape the way people live their lives and provide a sense of right and wrong. For AI, per DeepMind, the approach ranges from trade-offs in decision making like the choice between prioritizing productivity or helping those most in need.

In the paper, published in April in the Proceedings of the National Academy of Sciences, developers turned to philosophy to find ways to better identify principles that guide AI behavior. In an experiment the company used a concept known as the “veil of ignorance,” a thought experiment intended to help identify fair principles for group decisions that can be applied to AI.

The approach encouraged people to make decisions based on what they thought was fair, whether or not it benefited them directly, and whether they were more likely to select an AI that helped those who were most disadvantaged when they reasoned behind the veil of ignorance.

(19)