Google Details How It Uses AI, Machine Learning To Improve Search

Google Details How It Uses AI, Machine Learning To Improve Search

Google made several announcements on Thursday intended to transform the way people search and the way brands interact with consumers.

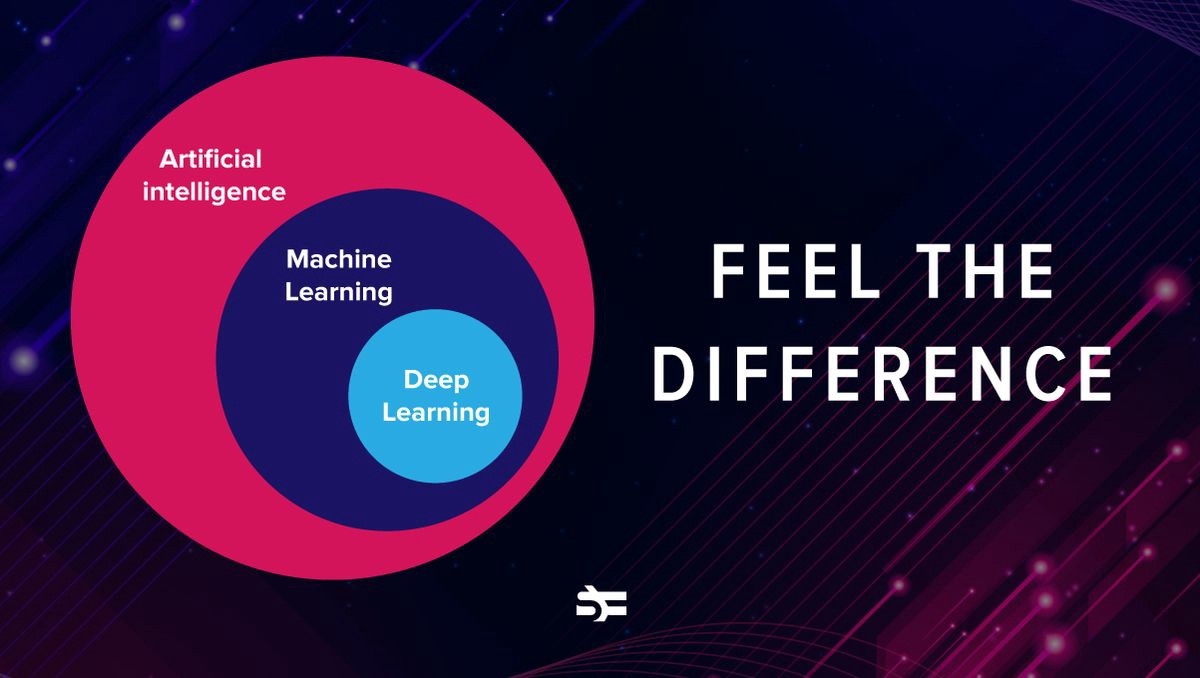

This was made possible through advancements in artificial intelligence (AI), including a new spelling algorithm, the ability to index specific individual passages from web pages, and new techniques to help people find a wider range of results — as well as investments in research into the understanding of language and last year’s introduction of Bert or Bidirectional Encoder Representations from Transformers, is a Google technique for natural-language processing.

Technology like this has enabled Google to continue to improve its ability to understand misspelled words. One in 10 queries daily are misspelled. A new spelling algorithm introduced today uses a deep neural net to significantly improve the ability to decipher misspellings. This one change will make a greater improvement to spelling than all of our improvements over the last five years, according to Cathy Edwards, vice president of engineering at Google, during a virtual presentation.

“Perfect search is the Holy Grail and we will never stop in our quest,” she said. “Breakthroughs in AI has enabled us to make some of the biggest improvements ever.”

Google has spent the past 18 years building a technology by looking for mistakes. It is used to predict what people searching actually intend to do. By the end of this month, Google will introduce a new spelling algorithm that uses a deep neural net with 680 million parameters that can better model context and mistakes. It runs in under 3 milliseconds, which Edwards says is faster than a hummingbird’s wings.

Beyond spelling, AI is improving specific searches. It will help search marketers point to specific content in a page full of information.

It will also help Google to index individual passages in web pages — finding the needle in a haystack. Starting next month, this technology will improve 7% of search queries across all languages. It also will help with content in videos.

Some video creators will mark key moments in the video, but this AI-driven approach enables those who are searching for information to jump to the exact spot in the video. It can tag these movements and navigate them like chapters in a book.

Visual search also has been approved. While Lens already allows people to search for a product by taking a photo or screenshot, now Google lets consumers tap and hold an image on the Google app or Chrome on Android to find the exact or similar items. It also suggest ways to style it. This feature is coming soon to the Google app on iOS.

Soon Google will bring the car showroom to consumers’ homes using artificial intelligence and three-dimensional modeling. Porsche already offers an app that does this, but now the manufacturer is working with Google, along with Volvo.

While Lens can help people find and buy products, it also assists students in learning, which can be helpful during these times of home-schooling.

From the search bar in the Google app on Android and iOS, students can tap Lens to get help on a homework problem. A step-by-step guide and video can help students learn and understand the foundational concepts to solve math, chemistry, biology and physics problems.

Google has also introduced Hum to Search, where people can tap the microphone in the search bar and hum or whistle find a specific song.

Here’s how it works. When someone hums a melody into Search, Google’s machine-learning models transform the audio into a number-based sequence representing the song’s melody. The models were trained to identify songs based on a variety of sources, including humans singing, whistling or humming, as well as studio recordings.

(33)