Google has taught an AI to doodle

Hot on the heels of the company’s art and music generation program, Project Magenta, a pair of Google researchers have taught a neural network to sketch simple drawings all on its own.

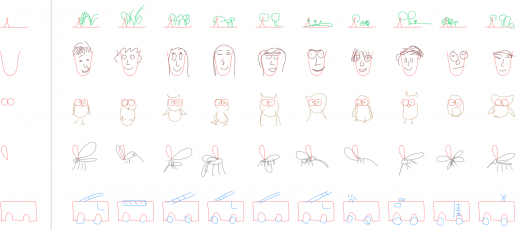

The researchers relied on data from Quick, Draw!, an app that asks users to draw a simple item and then guesses at what it could be. They used 75 classes of item (say owls, mosquitos, gardens or axes), each of which contained 70,000 individual examples, to teach their recurrent neural network (RNN) to draw them itself in vector format.

“We train our model on a dataset of hand-drawn sketches, each represented as a sequence of motor actions controlling a pen: which direction to move, when to lift the pen up, and when to stop drawing,” David Ha, one of the researchers, wrote in a recent blog post. The team also added noise to the data so that the model can’t directly copy the image, “but instead must learn to capture the essence of the sketch as a noisy latent vector.”

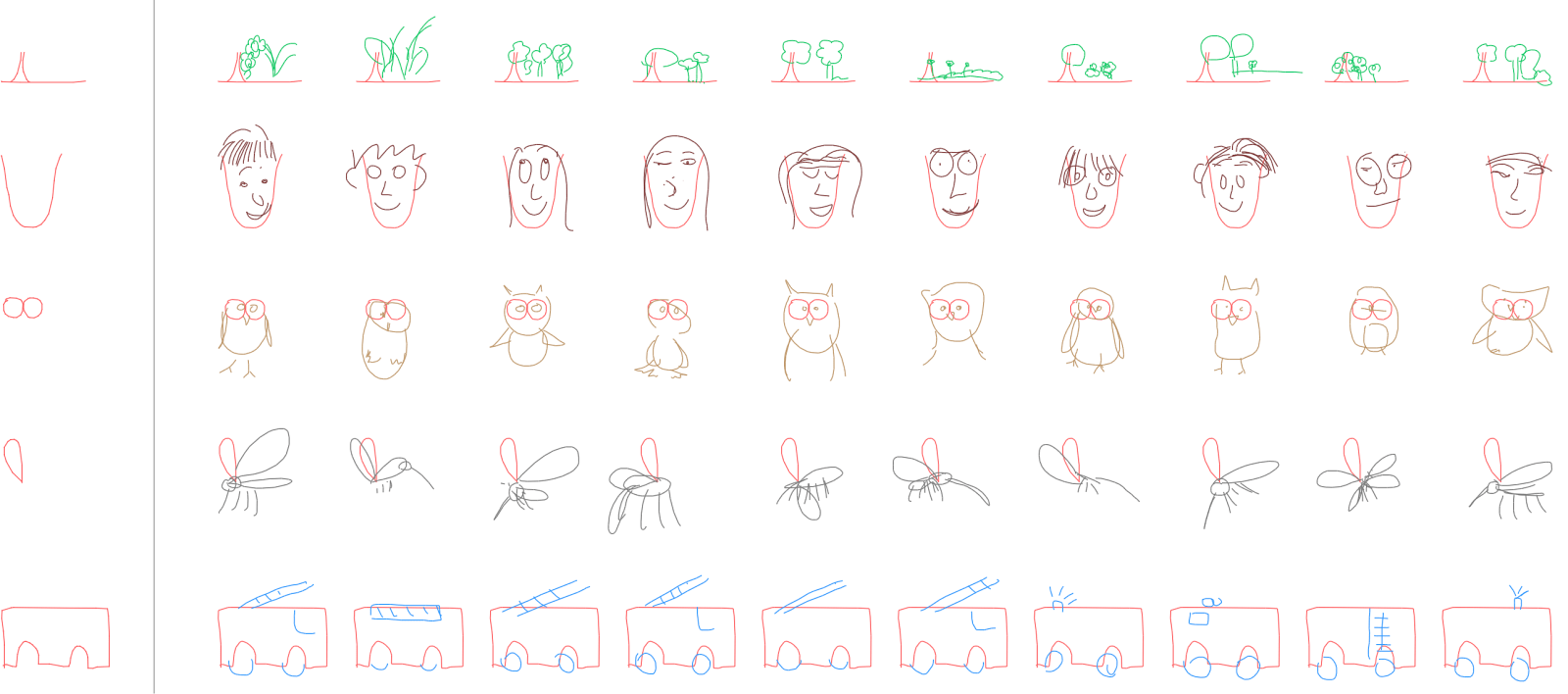

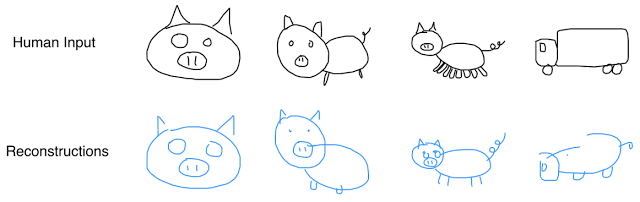

That is, the AI isn’t simply throwing together bits and pieces of images from its memorized dataset, it actually learned how to draw these objects. To prove this, the researchers presented a model that had been taught to draw pigs with a number of purposefully incorrect inputs. “When presented with an eight-legged pig, the model generates a similar pig with only four legs,” Ha wrote. “If we feed a truck into the pig-drawing model, we get a pig that looks a bit like the truck.”

What’s more, the AI can merge a pair of disassociated images to create a series of uniquely intersecting hybrids. It’s the same basic idea as the pig-truck above but able to produce a large number of similar but unique designs. This feature could be of great use to advertisers, graphic designers and textile manufacturers once fully developed. Ha also figures that it could be used as a learning aid for people teaching themselves to draw.

(39)