Introducing Cloud Natural Language API, Speech API open beta and our West Coast region expansion

Following our announcements from GCP NEXT in March, we’re excited to share updates about Cloud Platform expansion and machine learning. Today we’re launching two new Machine Learning APIs into open beta and expanding our footprint in the United States.

Cloud Machine Learning APIs enter open beta

Google Cloud Platform unlocks the capability for enterprises to process unstructured data through machine learning. Today, we’re announcing two new Cloud Machine Learning products that are entering beta: Cloud Natural Language and Cloud Speech APIs. We spend a lot of time thinking about how computer systems can read in order to process human language in intelligent ways. For example, we most recently open-sourced SyntaxNet (which includes Parsey McParseface), a natural language model that analyzes the grammatical structure of text with the best accuracy, speed and scale. The new Google Cloud Natural Language API in open beta is based on our natural language understanding research. Cloud Natural Language lets you easily reveal the structure and meaning of your text in a variety of languages, with initial support for English, Spanish and Japanese. It includes:

- Sentiment Analysis: Understand the overall sentiment of a block of text

- Entity Recognition: Identify the most relevant entities for a block of text and label them with types such as person, organization, location, events, products and media

- Syntax Analysis: Identify parts of speech and create dependency parse trees for each sentence to reveal the structure and meaning of text

The new API is optimized to meet the scale and performance needs of developers and enterprises in a broad range of industries. For example, digital marketers can analyze online product reviews or service centers can determine sentiment from transcribed customer calls. We’ve also seen great results from our Alpha customers, including British online marketplace Ocado Technology. To see Cloud Natural Language API in action, check out our demo that uses Cloud Natural Language to analyze top stories from The New York Times.

Google’s Cloud Natural Language API has shown it can accelerate our offering in the natural language understanding area and is a viable alter native to a custom model we had built for our initial use case. – Dan Nelson, Head of Data, Ocado Technology

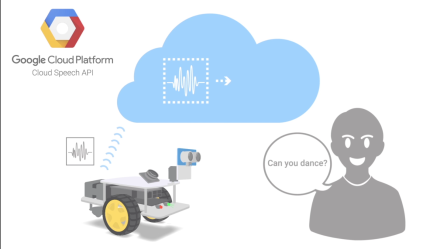

Cloud Speech API will also enter open beta today. Enterprises and developers now have access to speech-to-text conversion in over 80 languages, for both apps and IoT devices. Cloud Speech API uses the voice recognition technology that has been powering your favorite products such as Google Search and Google Now. More than 5,000 companies signed up for Speech API alpha, including:

- HyperConnect, a video chat app with over 50 million downloads in over 200 countries, uses a combination of our Cloud Speech and Translate API to automatically transcribe and translate conversations between people who speak different languages.

- VoiceBase, a leader in speech analytics as a service, uses Speech API to let developers surface insights and predict outcomes from call recordings.

This beta version adds new features based on customer feedback from alpha, such as:

- Word hints: custom words or phrases by context can be added to API calls to improve recognition. Useful for both command scenarios (e.g., a smart TV listening for “rewind” and “fast-forward” when watching a movie) and adding new words to the dictionary (e.g., recognizing names that may not be common in a specific language)

- Asynchronous calling: the API has been substantially simplified with new asynchronous calls that make developing voice-enabled apps easier and faster

To use our newest machine learning APIs in open beta and see more details about pricing, check out Cloud Natural Language and Cloud Speech on our website.

Google Cloud Platform expands on the North American West Coast

For Cloud Platform customers on the west coast of North America and Canada, we’re pleased to announce our Oregon Cloud Region (us-west1) is now open for business. This region initially includes three of our core offerings: Google Compute Engine, Google Cloud Storage and Google Container Engine, and features two Compute Engine zones to support high availability applications. Our initial testing shows that users in cities such as Vancouver, Seattle, Portland, San Francisco and Los Angeles can expect to see a 30-80% reduction in latency for applications served from us-west1, compared to us-central1. One industry where latency is critical is gaming. Players of today’s premium games expect twitch-fast networks to enable immersive, real-time gaming experiences. Multiplay is a unique video game hosting specialist behind many of today’s top AAA games. Multiplay’s games hosted out of the new us-west1 data center ensures that players in the western region of North America have a consistent, fast user experience on top of Google Cloud Platform.

Regional latency is a major factor in the gaming experience. Google Cloud Platform’s network is one of the best we’ve worked with, from a tech perspective but also in terms of the one-on-one support we’ve received from the team. – Paul Manuel, Director of Multiplay Game Services

And as we announced in March, Tokyo will be coming online later this year and we will announce more than 10 additional regions in 2017. For a current list of GCP regions, please have a look at the Cloud Locations page.

(107)