Google sorry about audio review scandal, details privacy changes for Assistant

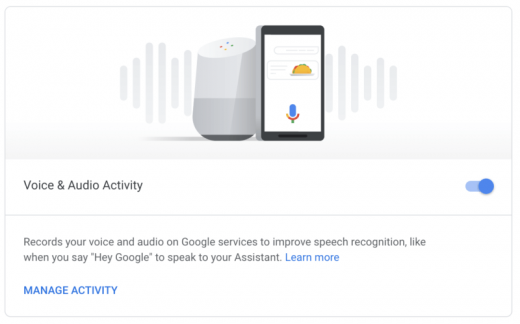

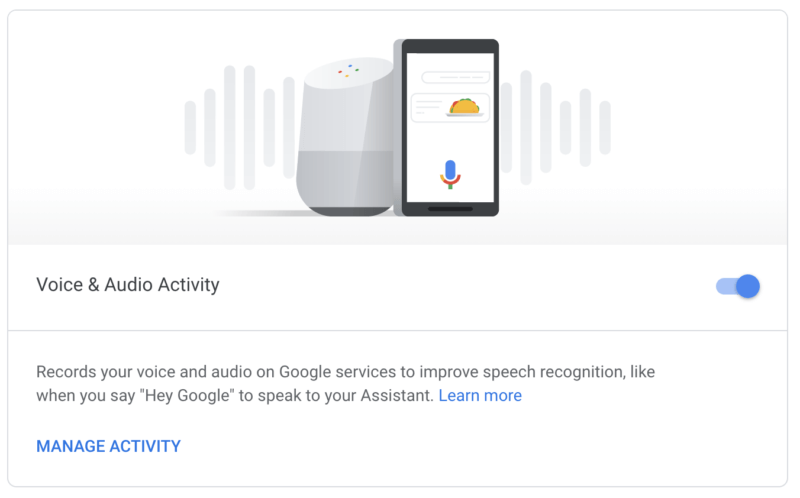

Voice & Audio Activity (human review) will now become opt-in, but it’s still turned on by default with the company discouraging opt-outs.

Amazon, Google, Microsoft, Facebook and Apple have all used humans (employees and contractors) to listen to and transcribe voice recordings from their virtual assistants or messaging apps. This is done, according to the companies, to improve the quality of speech recognition. But in a climate of privacy sensitivity and suspicion all have been forced to suspend, revamp or discontinue this and change their practices.

In July of this year, Google had to address a report from a Belgian broadcaster about third-party subcontractors being able to access recordings of Google Home device owners, which included enough information to reportedly determine their home addresses. The company explained that the subcontractor “violated our data security policies” and that it was going to “take action . . . . to prevent misconduct like this from happening again.”

Google is now offering further clarification of its audio review process and giving users additional privacy protections. The company also apologized for not letting users know what was going on with their audio data in the past.

Here’s are the changes and updates Google says it’s going to make:

- It says it doesn’t and won’t keep audio recordings.

- It will make Voice & Audio Activity (VAA) opt-in. That allows the company to send recordings to human reviewers to improve speech recognition.

- It says that opting in to VAA “helps the Assistant better recognize your voice over time, and also helps improve the Assistant for everyone by allowing us to use small samples of audio to understand more languages and accents.”

- For those opting in to VAA, Google says there are multiple protections that prevent the voice snippets from being associated with particular users.

- Google says the Assistant deletes any recordings from unintentional activations. The company says it will continue to improve this safeguard, including a forthcoming way to adjust “how sensitive your Google Assistant devices are to [voice] prompts.”

Users can review and delete their Assistant activity and change their VAA settings here.

Despite the above, as of this morning, VAA remains opt-out and copy on the site discourages people from opting out, saying “Pausing Voice & Audio Activity may limit or disable more personalized experiences across Google services. For example, Google may not understand you when you say ‘Hey Google’ to speak to your Assistant. This setting will be paused on all sites, apps, and devices signed in to this account.”

Why we should care. Striking the right balance between privacy and functionality is critical for Google, Amazon, Apple and others. It’s also what Google’s trying to do with its new “transparency, choice and control” initiative around ad personalization more globally.

Paranoia about surveillance is growing though is hasn’t stopped people from using services. But there’s some evidence that privacy concerns — “smart speakers are always listening” — may be hurting adoption and growth in that market. And although the example of Facebook defies this prediction, I believe we will see brand trust become increasingly tied to revenue performance going forward.

Marketing Land – Internet Marketing News, Strategies & Tips

(58)