Here’s what Apple’s big steps to foil child abusers do—and what they don’t

Most technology is morally neutral. That is, it can be judged “good” or “bad” based only upon how someone chooses to use it. A car can get you from point A to point B, or it can be used to ram into a crowd of people. Nuclear technology can power cities or be used to make the most destructive bombs imaginable. Social media can be used to expand free speech, or it can be used to harass and intimidate.

Digital photography is no different. Most of us love digital images because they allow us to quickly capture our experiences, loved ones, or creativity in a medium that can be saved and easily shared. But digital photography is also what allows child sexual abuse material (CSAM) to be shared around the world in mere seconds and in nearly unlimited quantity. And it’s the spread of such material that Apple aims to tackle with three significant new child protection features across its platforms in the coming months.

Those features include communication safety in the Messages app, automated CSAM detection for iCloud Photo Library, and expanding guidance in Siri and Search—all of which will go live after iOS 15, iPadOS 15, and MacOS Monterey ship later this fall. Apple gave me a preview of the features in advance of today’s announcement, and while each is innovative in its own right and the intentional end result is a good one, news of Apple’s announcement has set off a firestorm of controversy. That debate specifically surrounds one of the three new features—the ability for Apple to automatically detect images of child sexual abuse in photos stored in iCloud Photo Library.

Now, some of the ire about Apple’s announcement that it will begin detecting CSAM in images—which leaked early on Thursday—relates to a misunderstanding of how the technology will work. Judging from a great many comments on tech sites and Mac forums, many people think that Apple is optically scanning user libraries for child pornography, thus violating the privacy of law-abiding users in the process. Some seem to believe Apple’s software will judge whether something in the photo is an instance of child pornography and report the user if it thinks it is. This assumption about Apple’s CSAM-detection methods misunderstands what the company will be doing.

However, some privacy advocates who are clear on Apple’s methods rightly point out that good intentions—like preventing the spread of child sexual abuse imagery—often lead to slippery slopes. If Apple has tools to detect user data for this specific offense, could it start delving through other user data for less-heinous offenses? Or what happens when governments, repressive or otherwise, compel Apple to use the technology to surveil users for other activities?

This sort of tool can be a boon for finding child pornography in people’s phones. But imagine what it could do in the hands of an authoritarian government? https://t.co/nB8S6hmLE3

— Matthew Green (@matthew_d_green) August 5, 2021

These are questions that people are right to ask. But first, it’s important to fully understand all the measures Apple is announcing. And let’s do just that—starting with the less-controversial and misunderstood ones.

Communication safety in Messages

Apple’s Messages app is one of the most popular communication platforms in the world. Unfortunately, as with any communication platform, the app can and is used by predators to communicate with and exploit children. This exploitation can come in the form of grooming and sexploitation, which often precede even more egregious actions such as sex trafficking.

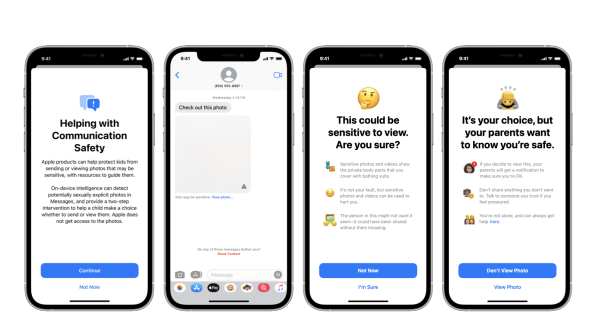

For example, a predator might begin talking to a 12-year-old boy via his Messages account. After befriending the boy, the predator may eventually send him sexual images or get the boy to send images of himself back. But Apple’s new safety feature will now scan photos sent via the Messages app to any children in a Family Sharing group for signs of sexual imagery and automatically blur the photo if it finds them.

The child will see the blurred photo along with a message that the photo may be sensitive. If the child taps “view photo,” a notice will appear in language a child can understand that the photo is likely to contain images of “the private body parts that you cover with bathing suits.” The notice will let children know that this is not their fault and the photo could hurt them or be hurtful to the person in them. If the child does choose to view it anyway, the notice will tell them that their parents will be notified of the image so they can know the child is safe. From there, the child can continue to view the photo—with the parents being notified—or choose not to view it. A similar notice will appear if the child attempts to send images containing nudity to the person they are messaging.

This new feature could be a powerful tool for keeping children safe from seeing or sending harmful content. And because the user’s iPhone scans photos for such images on the device itself, Apple never knows about or has access to the photos or the messages surrounding them—only the children and their parents will. There are no real privacy concerns here.

Siri will warn of searches for illegal information

The next child-safety feature also aims to be preventative in measure—stopping an action before it becomes harmful or illegal. When Siri is updated later this fall, users will be able to ask Apple’s digital assistant how to report child exploitation and be directed to resources that can help them.

If users search for images of child sexual abuse or related material, Siri and Apple’s built-in search feature will notify them that the items they are seeking are illegal. Siri will instead offer them resources to receive help for any urges that may be harmful to themselves or others.

Again, it’s important to note that Apple won’t see users’ queries seeking illegal material or keep a record of them. Instead, the aim is to make the user aware privately that what they are seeking is harmful or illegal and direct them to resources that may be of help to them. Given that no one—not even Apple—is made aware of a user’s problematic searches, it seems clear that privacy isn’t an issue here.

iCloud will detect images of child sexual abuse

And now we get to the most significant new child-safety feature Apple introduced today—and also the one causing the most angst: Apple plans to actively monitor users’ photos to be stored in iCloud Photo Library for illegal images of children and report users who attempt to use iCloud to store child sexual abuse images to the National Center for Missing and Exploited Children (NCMEC), which works with law enforcement across the country to catch collectors and distributors of child pornography.

Now, upon hearing this, your first response may well be “good.” But then you may begin to wonder—and many clearly have—what this means for Apple and its commitment to user privacy. Does this mean Apple is now scanning every photo a user uploads into their iCloud Photo Library to determine which are illegal and which are not? If true, the privacy of hundreds of millions of innocent users will be violated to catch a vastly smaller group of criminals.

But even as Apple redoubles efforts to keep children safe across its platforms, it’s designing with privacy in mind. This new feature will detect only already known and documented illegal images of children without ever scanning any photos stored in the cloud and without ever seeing the photos themselves.

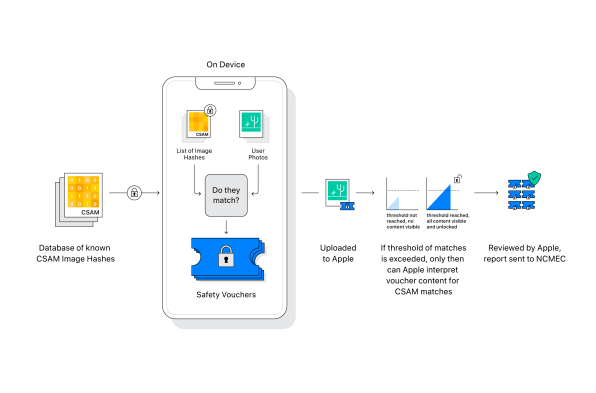

How is that possible? It’s achieved through a new type of cryptography and processing system that Apple has developed. Called NeuralHash, it involves insanely complicated algorithms and math. To put it in simple terms, a user’s iPhone itself will “read” the fingerprints (a digital hash—essentially a long unique number) of every photo on a user’s iPhone. Those fingerprints will then be compared with a list of fingerprints (hashes) of known child sexual abuse images maintained by NCMEC. This list will also be stored on every iPhone but will never be accessible to users themselves.

If the fingerprint of a user’s photo matches the fingerprint of a photo from the list provided by NCMEC, a token is created on the user’s iPhone. The tokens are uploaded automatically to Apple, but only once the number of tokens reaches a certain threshold will the infringing list of fingerprints/hashes be readable Apple, where a human reviewer will then manually verify whether the images are actually of child sexual abuse. If they are, Apple will disable the user’s iCloud account and contact NCMEC, who will then work with law enforcement on the issue.

Apple says its new method preserves privacy for all of its users and means the company can now scan photo libraries for harmful, illegal images while still keeping a user’s pictures hidden from the company.

What about false positives?

Now we get to the first concern people have about Apple’s new detection technology—and it’s an understandable issue.

While Apple’s new ability to scan for child sexual abuse images is pretty remarkable from a cryptographic and privacy perspective, many have voiced the same fear: What about false positives? What if Apple’s system thinks an image is child sexual abuse when it’s really not, and reports that user to law enforcement? Or what about photos that do contain nudity of children but aren’t sexual—say, when parents send a photo of their 2-year-old in a bubble bath to the grandparents?

To the first point, Apple says its system is designed to reduce false positives to roughly one in one trillion—and that’s before an employee at Apple manually inspects a suspected child sexual abuse image and before it is ever sent on to NCMEC. The chances of a false positive, in other words, are infinitesimally low.

As for the photo of the child in the bubble bath? There’s no need to worry about that being flagged as child sexual abuse imagery, either. And the reason for this can’t be emphasized enough: Because the on-device scanning of such images checks only for fingerprints/hashes of already known and verified illegal images, the system is not capable of detecting new, genuine child pornography or misidentifying an image such as a baby’s bath as pornographic.

Unlike the new Messages and Siri and Search child-safety measures, Apple’s system for scanning iCloud Photos is playing defense. It’s able to spot only already known images of child sexual abuse. It cannot make its own call on unknown photos because the system cannot see the actual content of the images.

What about that slippery slope?

While a better understanding of the CSAM technology Apple plans to engage in should alleviate most concerns over false positives, another worry people have voiced is much harder to assuage. After all, Apple is the privacy company. It’s now known almost as much for privacy as it is for the iPhone. And while its intentions for its new child-safety features are noble, people are rightly worried about where those features could lead.

Even I have to admit that I was surprised when Apple filled me in on their plans. Monitoring of user data—even to keep kids safe—feels unnatural in a way. But it only feels unnatural because it’s Apple doing it. If Facebook or Google had announced this initiative, I don’t think it would have struck me as odd. Then again, I also don’t think they would have built the same privacy-preserving features into CSAM detection as Apple has, either.

What if Apple had no choice but to comply with some dystopian law in China or Russia?

When I was working on my first novel, Epiphany Jones, I spent years researching sex trafficking. I came to understand the horrors of the modern-day slave trade—a slave trade that is the origin of many child sexual abuse images on the internet. But as a journalist who also frequently writes about the critical importance of the human right to privacy, well, I can see both sides here. I believe that Apple has struck a sensible balance between user privacy and helping stem the spread of harmful images of child abuse. But I also do understand that nagging feeling in the back of users’ minds: Where does this lead next?

More specifically, the concern involves where this type of technology could lead if Apple is compelled by authorities to expand detection to other data that a government may find objectionable. And I’m not talking about data that is morally wrong and reprehensible. What if Apple were ordered by a government to start scanning for the hashes of protest memes stored on a user’s phone? Here in the U.S., that’s unlikely to happen. But what if Apple had no choice but to comply with some dystopian law in China or Russia? Even in Western democracies, many governments are increasingly exploring legal means to weaken privacy and privacy-preserving features such as end-to-end encryption, including the possibility of passing legislation to create backdoor access into messaging and other apps that officials can use to bypass end-to-end encryption.

So these worries people are expressing today on Twitter and in tech forums around the web are understandable. They are valid. The goal may be noble and the ends just—for now—but that slope can also get slippery really fast. It’s important that these worries about where things could lead are discussed openly and widely.

Finally, it should be noted that these new child-safety measures will work only on devices running iOS 15, iPadOS 15, and MacOS Monterey, and will not go live until after a period of testing once those operating systems launch this fall. Additionally, for now the iCloud Photos Library scanning will work only on photographs and not videos. Scanning for child abuse images will occur only for photos slated to be uploaded to iCloud Photo Library from the Photos app on an iPhone or iPad. These detection features don’t come into play for photos stored anywhere on an Apple device, such as in third-party apps. The features only come into play for photos stored in the company’s iCloud Photos service.

Those interested in the cryptographic and privacy specifics of the new child-safety features can delve into Apple’s wealth of literature on the subjects here, here, and here.

Fast Company , Read Full Story

(53)