How a 1981 conference kickstarted today’s quantum computing era

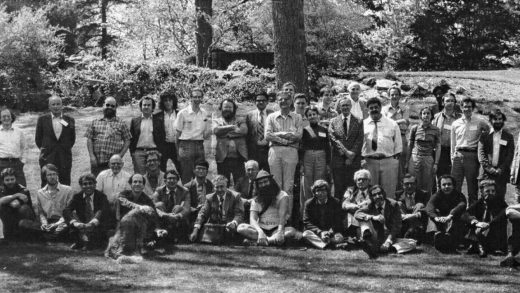

In May 1981, at a conference center housed in a chateau-style mansion outside Boston, a few dozen physicists and computer scientists gathered for a three-day meeting. The assembled brainpower was formidable: One attendee, Caltech’s Richard Feynman, was already a Nobel laureate and would earn a widespread reputation for genius when his 1985 memoir “Surely You’re Joking, Mr. Feynman!”: Adventures of a Curious Character became a bestseller. Numerous others, such as Paul Benioff, Arthur Burks, Freeman Dyson, Edward Fredkin, Rolf Landauer, John Wheeler, and Konrad Zuse, were among the most accomplished figures in their respective research areas.

The conference they were attending, The Physics of Computation, was held from May 6 to 8 and cohosted by IBM and MIT’s Laboratory for Computer Science. It would come to be regarded as a seminal moment in the history of quantum computing—not that anyone present grasped that as it was happening.

“It’s hard to put yourself back in time,” says Charlie Bennett, a distinguished physicist and information theorist who was part of the IBM Research contingent at the event. “If you’d said ‘quantum computing,’ nobody would have understood what you were talking about.”

Why was the conference so significant? According to numerous latter-day accounts, Feynman electrified the gathering by calling for the creation of a quantum computer. But “I don’t think he quite put it that way,” contends Bennett, who took Feynman’s comments less as a call to action than a provocative observation. “He just said the world is quantum,” Bennett remembers. “So if you really wanted to build a computer to simulate physics, that should probably be a quantum computer.”

[Photo: courtesy of Charlie Bennett, who isn’t in it—because he took it]

Even if Feynman wasn’t trying to kick off a moonshot-style effort to build a quantum computer, his talk—and The Physics of Computation conference in general—proved influential in focusing research resources. Quantum computing “was nobody’s day job before this conference,” says Bennett. “And then some people began considering it important enough to work on.”

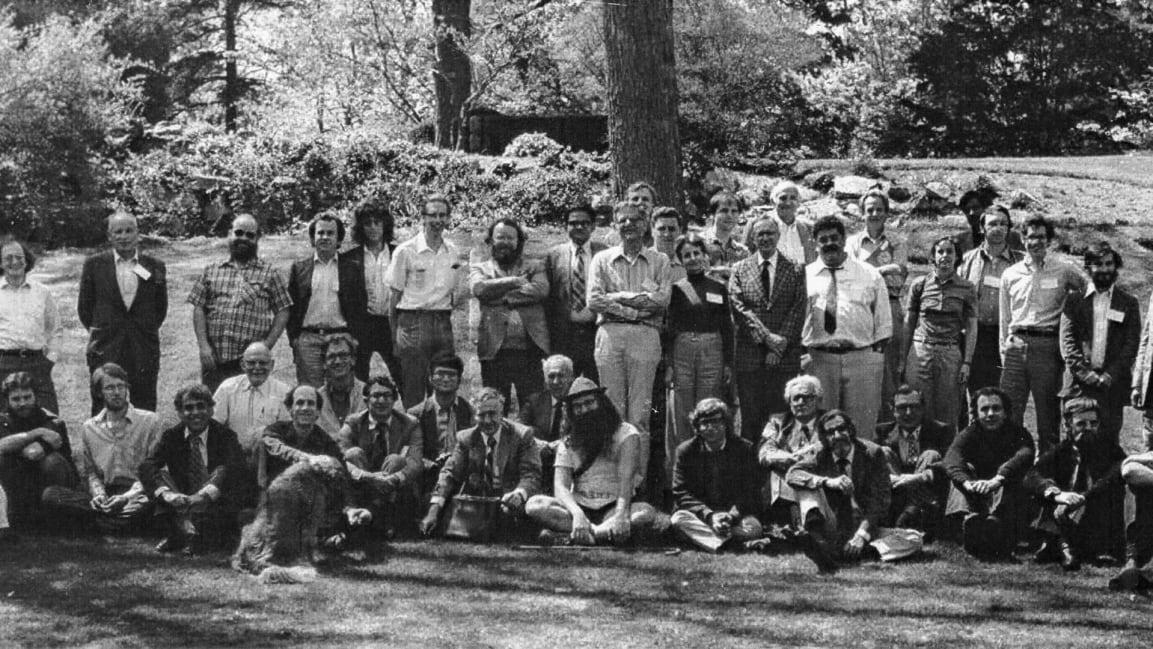

It turned out to be such a rewarding area for study that Bennett is still working on it in 2021—and he’s still at IBM Research, where he’s been, aside from the occasional academic sabbatical, since 1972. His contributions have been so significant that he’s not only won numerous awards but also had one named after him. (On Thursday, he was among the participants in an online conference on quantum computing’s past, present, and future that IBM held to mark the 40th anniversary of the original meeting.)

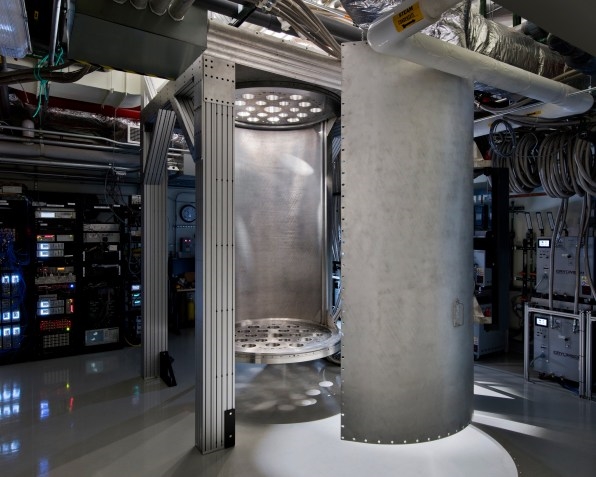

[Photo: courtesy of IBM]

These days, Bennett has plenty of company. In recent years, quantum computing has become one of IBM’s biggest bets, as it strives to get the technology to the point where it’s capable of performing useful work at scale, particularly for the large organizations that have long been IBM’s core customer base. Quantum computing is also a major area of research focus at other tech giants such as Google, Microsoft, Intel, and Honeywell, as well as a bevy of startups.

According to IBM senior VP and director of research Dario Gil, the 1981 Physics of Computation conference played an epoch-shifting role in getting the computing community excited about quantum physics’s possible benefits. Before then, “in the context of computing, it was seen as a source of noise—like a bothersome problem that when dealing with tiny devices, they became less reliable than larger devices,” he says. “People understood that this was driven by quantum effects, but it was a bug, not a feature.”

Making progress in quantum computing has continued to require setting aside much of what we know about computers in their classical form. From early room-sized mainframe monsters to the smartphone in your pocket, computing has always boiled down to performing math with bits set either to one or zero. But instead of depending on bits, quantum computers leverage quantum mechanics through a basic building block called a quantum bit, or qubit. It can represent a one, a zero, or—in a radical departure from classical computing—both at once.

[Photo: courtesy of IBM]

Qubits give quantum computers the potential to rapidly perform calculations that might be impossibly slow on even the fastest classical computers. That could have transformative benefits for applications ranging from drug discovery to cryptography to financial modeling. But it requires mastering an array of new challenges, including cooling superconducting qubits to a temperature only slightly above abolute zero, or -459.67 Farenheit.

Four decades after the 1981 conference, quantum computing remains a research project in progress, albeit one that’s lately come tantalizingly close to fruition. Bennett says that timetable isn’t surprising or disappointing. For a truly transformative idea, 40 years just isn’t that much time: Charles Babbage began working on his Analytical Engine in the 1830s, more than a century before technological progress reached the point where early computers such as IBM’s own Automated Sequence Controlled Calculator could implement his concepts in a workable fashion. And even those machines came nowhere near fulfilling the vision scientists had already developed for computing, “including some things that [computers] failed at miserably for decades, like language translation,” says Bennett.

I think was the first time ever somebody said the phrase ‘quantum information theory.’”

IBM Fellow Charlie Bennett

That Bennett has been investigating physics and computing at IBM Research for nearly half a century is a remarkable run given the tendency of the tech industry to chase after the latest shiny objects at the expense of long-term thinking. (It’s also longer than IBM Research chief Gil has been alive.) And even before Bennett joined IBM, he participated in some of the earliest, most purely theoretical discussion of the concept that became known as quantum computing.

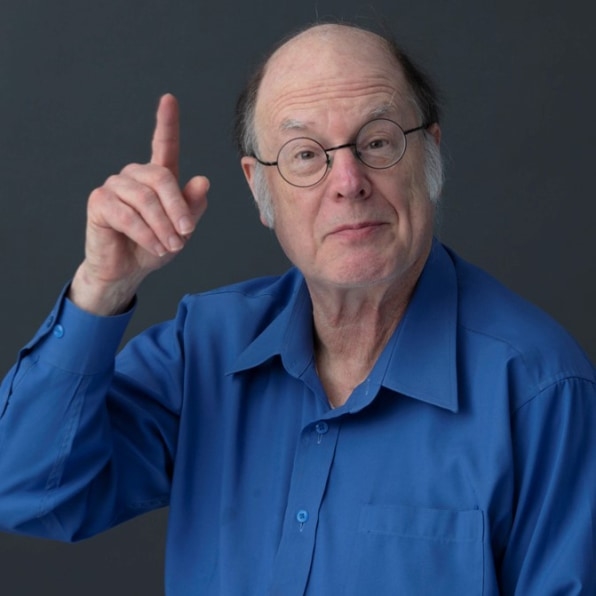

In 1970, as a Harvard PhD candidate, Bennett was brainstorming with fellow physics researcher Stephen Wiesner, a friend from his undergraduate days at Brandeis. Wiesner speculated that quantum physics would make it possible to “send, through a channel with a nominal capacity of one bit, two bits of information; subject however to the constraint that whichever bit the receiver choose to read, the other bit is destroyed,” as Bennett jotted in notes which—fortunately for computing history—he preserved.

[Photo: courtesy of Charlie Bennett]

“I think was the first time ever somebody said the phrase ‘quantum information theory,’” says Bennett. “The idea that you could do things of not just a physics nature, but an information processing nature with quantum effects that you couldn’t do with ordinary data processing.”

Long and winding road

Like many technological advances of historic proportions—AI is another example—quantum computing didn’t progress from idea to reality in an altogether predictable and efficient way. It took 11 years from Wiesner’s observation until enough people took the topic seriously enough to inspire the Physics of Computation conference. Bennett and the University of Montreal’s Gilles Brassard published important research on quantum cryptography in 1984; in the 1990s, scientists realized that quantum computers had the potential to be exponentially faster than their classical forebears.

All along, IBM had small teams investigating the technology. According to Gil, however, it wasn’t until around 2010 that the company had made enough progress that it began to see quantum computing not just as an intriguing research area but as a powerful business opportunity. “What we’ve seen since then is this dramatic progress over the last decade, in terms of scale, effort, and investment,” he says.

[Photo: courtesy of IBM]

As IBM made that progress, it shared it publicly so that interested parties could begin to get their heads around quantum computing at the earliest opportunity. Starting in May 2016, for instance, the company made quantum computing available as a cloud service, allowing outsiders to tinker with the technology in a very early form.

It is really important that when you put something out, you have a path to deliver.”

IBM Research Director Dario Gil

More recently, IBM has been confident enough that it understands the remaining work its quantum effort will require that it’s published road maps that spell out when it expects to reach further milestones. Even quantum-computing experts tend to make wildly varying predictions about how quickly the technology will reach maturity; Gil says that IBM decided that disclosing its own plans in some detail would cut through the noise.

“One of the things that road maps provide is clarity,” he says, allowing that “road maps without execution are hallucinations, so it is really important that when you put something out, you have a path to deliver.”

Scaling up quantum computing into a form that can trounce classical computers at ambitious jobs requires increasing the number of reliable qubits that a quantum computer has to work with. When IBM published its quantum hardware road map last September, it had recently deployed the 65-qubit IBM Quantum Hummingbird processor, a considerable advance on its previous 5- and 27-qubit predecessors. This year, the company plans to complete the 127-qubit IBM Quantum Eagle. And by 2023, it expects to have a 1,000-qubit machine, the IBM Quantum Condor. It’s this machine, IBM believes, that may have the muscle to achieve “quantum advantage” by solving certain real-world problems faster the world’s best supercomputers.

Essential though it is to crank up the supply of qubits, the software side of quantum computing’s future is also under construction, and IBM published a separate road map devoted to the topic in February. Gil says that the company is striving to create a “frictionless” environment in which coders don’t have to understand how quantum computing works any more than they currently think about a classical computer’s transistors. An IBM software layer will handle the intricacies (and meld quantum resources with classical ones, which will remain indispensable for many tasks).

“You don’t need to know quantum mechanics, you don’t need to know a special programming language, and you’re not going to need to know how to do these gate operations and all that stuff,” he explains. “You’re just going to program with your favorite language, say, Python. And behind the scenes, there will be the equivalent of libraries that call on these quantum circuits, and then they get delivered to you on demand.”

[Photo: courtesy of IBM]

“In this vision, we think that at the end of this decade, there may be as many as a trillion quantum circuits that are running behind the scene, making software run better,” Gil says.

Even if IBM clearly understands the road ahead, there’s plenty left to do. Charlie Bennett says that quantum researchers will overcome remaining challenges in much the same way that he and others confronted past ones. “It’s hard to look very far ahead, but the right approach is to maintain a high level of expertise and keep chipping away at the little problems that are causing a thing not to work as well as it could,” he says. “And then when you solve that one, there will be another one, which you won’t be able to understand until you solve the first one.”

As for Bennett’s own current work, he says he’s particularly interested in the intersection between information theory and cosmology—”not so much because I think I can learn enough about it to make an original research contribution, but just because it’s so much fun to do.” He’s also been making explainer videos about quantum computing, a topic whose reputation for being weird and mysterious he blames on inadequate explanation by others.

“Unfortunately, the majority of science journalists don’t understand it,” he laments. “And they say confusing things about it—painfully, for me, confusing things.”

For IBM Research, Bennett is both a living link to its past and an inspiration for its future. “He’s had such a massive impact on the people we have here, so many of our top talent,” says Gil. “In my view, we’ve accrued the most talented group of people in the world, in terms of doing quantum computing. So many of them trace it back to the influence of Charlie.” Impressive though Bennett’s 49-year tenure at the company is, the fact that he’s seen and made so much quantum computing history—including attending the 1981 conference—and is here to talk about it is a reminder of how young the field still is.

Fast Company , Read Full Story

(53)