How Apple Will Use AR To Reinvent The Human-Computer Interface

As a technology industry analyst, I have had the privilege of covering Apple since 1981. During this period of time, I have watched Apple introduce new products and change leadership many times. I’ve delved deep into its product roadmaps and strategies. In the process I have learned a great deal about Apple’s culture and how its people think about advancing personal computing.

It’s in Apple’s DNA to continually deliver the “next” major advancement to the personal computing experience. Its innovation in man-machine interfaces started with the Mac and then extended to the iPod, the iPhone, the iPad, and most recently, the Apple Watch.

Now, get ready for the next chapter, as Apple tackles augmented reality, in a way that could fundamentally transform the human-computer interface.

With the Mac, Jobs and his team created a new computing design that included a mouse and a new software graphical user interface (GUI). In those days the PC was usually battleship gray, and consisted of a monitor, CPU unit, and a keyboard. It usually ran Microsoft’s text-based DOS OS. Jobs and Apple changed the paradigm by creating an all-in-one design in the original Mac, and added the mouse and a GUI to the OS. The company then extended the computing experience by adding a CD ROM drive, launching the era of multimedia computing. With the iPhone and iPad, Apple introduced a new way to interact with mobile devices. Instead of a mouse, it used finger touch for navigation.

The hardware design of Apple products has constantly evolved. At the same time, the software and UI have been pushed forward to harmonize with the hardware. This will continue as Apple moves into new technology areas.

As I look at Apple’s future role in driving a “next” computing experience, I am drawn to augmented reality. Apple CEO has said on numerous occasions that he sees a big opportunity in augmented reality, including in commercial use cases.

Apple has made two key acquisitions for foundational technologies that might go into an AR product. In 2015 it bought Metaio, a German company that had developed software that integrated camera images with computer-generated imagery. Back in 2013 Apple acquired the Israeli company PrimeSense, which had developed a tiny 3D sensor and accompanying software that scanned and captured three-dimensional objects.

Glasses are “Next”

Apple introduced its version of AR–namely the ARKit AR app development framework–at its developers conference last year. I expect it to give important updates and show more examples of what ARKit can do at this year’s developers conference in early June. I see ARKit apps being at the center of Apple’s “next” big thing that advances the personal computing experience.

At the moment, Apple is focused on using the iPhone as the delivery device for this AR experience. The phone-based AR experience of today will evolve into a glasses-based experience tomorrow. Glasses are the most efficient and practical way to deliver AR to Apple customers in the future.

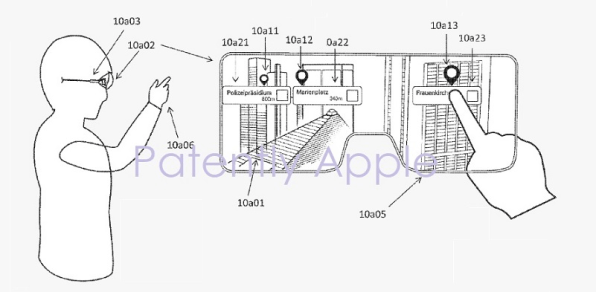

Apple has been filing patents on glasses or goggles in this area over the past year, as tracked by the Patently Apple blog. A Google search for Apple Glasses reveals other design illustrations from the patent filings.

While these patent drawings are interesting, I think of them as first generation designs. They are a long way from what I believe Apple has to deliver to extend its leadership in next-generation personal computing experiences.

While there are other companies who are working on similar AR apps and even glasses, Apple’s track record suggests that it could be the company that defines and refines this “next” AR computing experience for the masses.

Apple’s iPod was not the first MP3 player but it became the best, and dominated this market for over 10 years. Apple didn’t introduce the first smartphone, but the iPhone redefined what a smartphone should be. Apple now brings in over 50% of all worldwide smartphone revenues. Similarly, Apple’s version of AR could become the version that the masses embrace since it will be tied to hardware, software, apps and services that Apple controls.

The iPhone Stays In The Picture

As Apple moves to deliver this “next” version of man-machine interface, it will likely deviate from the competition in one major way. Most vendors I talk to believe all of the intelligence for smart glasses needs to be integrated into the headset or glasses themselves. The problem is that this makes these glasses bulky, heavy, and makes the wearer look like a dork.

I believe Apple’s strategy will have the iPhone serve as the CPU and brains behind the glasses. It will feed the data and AR content wirelessly to the glasses. This approach would allow for AR glasses that look like a normal pair of reading glasses. The glasses will need special optics, a small battery, and a wireless radio. The phone will handle the serious processing.

This approach benefits Apple in three ways.

First, it allows it to create glasses that would be acceptable to a mass audience. Consumers might resist bulky glasses or goggles that make them look odd or different.

Second, it makes the iPhone even more important. If all the processing and AR functionality is being done on the iPhone, the iPhone becomes indispensable to the overall “next” computing experience Apple brings to the market. This could also launch one of the biggest iPhone sales update cycles Apple has ever seen.

Third, this approach is consistent with Apple’s history of controlling a new ecosystem. While others will surely copy Apple’s approach to AR, they won’t enjoy the same level of control that Apple exerts over its hardware, software, applications, and services ecosystem. That control puts Apple in a position to define and deliver this next generation computing model, and to dominate it if they do everything right.

Apple is now laying the groundwork that will allow it to deliver the “next” big thing in personal computing. For the next couple of years, ARKit will evolve and spur new innovations in AR apps that use the iPhone as the delivery medium. However, if its patents are any guide, you can see that Apple may be on pace to migrate its AR experience to (iPhone-powered) glasses as the delivery medium in the not-too-distant distant future.

Apple’s success in ushering in this new era of man-machine interface may be critical to the advancement of personal computing in general. The new approach would also make voice and gestures the new “mouse,” and free us to have a much richer and immersive computing experience than we could ever have on a PC, laptop, tablet, or smartphone alone.

Eventually, Apple and others may be able to put all of the computing power needed for AR into a pair of glasses. But I believe Apple’s iPhone-powered approach is the “next” major advancement in man-machine interfaces and will define personal computing for the next decade. And it’ll make computing more personal than ever.

(32)