For years, critics have all but begged social media platforms to take a tougher stance on misinformation and disinformation. But Facebook and Twitter were once more resistant to these calls.

Mark Zuckerberg has for years said Facebook should not be an “arbiter of truth.” Twitter CEO Jack Dorsey once said the same of Twitter. And while Facebook and Twitter’s policies have evolved since “fake news” became a mainstream issue in 2016, the past year has seen some of the most dramatic changes when it comes to their efforts to combat misinformation — and it was all because of the pandemic.

The unique danger posed by the coronavirus pandemic forced companies to adopt newly aggressive steps to beat back conspiracy theories, rumors and other outlandish claims. These measures also laid important groundwork that helped them later confront another misinformation superspreader: Donald Trump.

Why COVID-19 was different

Social media companies seemed to realize early on the dangers of their platforms becoming superspreaders of coronavirus misinformation. Facebook first addressed the issue in January. At a time when many in the US still viewed COVID-19 as a distant threat, Facebook was willing to take much more aggressive steps to combat misinformation than it has in the past: the company said it would actually take down posts it considered dangerous, like claims of fake cures.

We may take that for granted now but, prior to the pandemic, it was rare for Facebook to actually remove misinformation. The company, always reluctant to play “arbiter of truth,” has instead preferred to rely on outside fact checkers labeling false claims and then burying them in users’ News Feeds. With the pandemic, though, Facebook said some of these claims were sufficiently dangerous to come down. In all, Facebook has taken down more than 12 million posts for sharing misinformation that could “lead to imminent physical harm,” the company’s VP of Integrity Guy Rosen told reporters in October.

Twitter also signaled that it was willing to take new and aggressive steps to combat misinformation related to the pandemic. In March, it updated its policies to require users remove tweets with hoaxes and fake cures. Eventually, Twitter began to aggressively label tweets with misleading or disputed information. This too, was new territory for Twitter, which didn’t previously have policies that allowed it to do its own fact checking. But much like Facebook, the company decided the pandemic called for new rules.

Not all of these efforts were successful, though. Harmful disinformation about the pandemic has repeatedly gone viral. QAnon, the cult-like conspiracy the FBI has described as a domestic terror threat, has reached new heights during the pandemic.

In May, Facebook, Twitter and YouTube were slow to take action against a viral video that spread widely discredited conspiracy theories about the pandemic. The clip racked up millions of views on Facebook and YouTube before either company took action. Copies continued to spread well after the fact and the takedowns only fueled further conspiracies about the reasons for its removal.

Conspiracy theories about COVID-19 also opened up new opportunities for other purveyors of disinformation. Supporters of QAnon, once considered a fringe movement confined to message boards like 4Chan, took an early interest in the pandemic, which seemed almost perfectly-tailored to a cult obsessed with a supposed cabal of devil-worshiping global elites.

And while we don’t know exactly what drew coronavirus conspiracy theorists to QAnon, there’s ample evidence of overlap between the two groups. Interest in QAnon spiked around the same time as logic-defying conspiracies about 5G and COVID-19. QAnon supporters also gained a foothold in other conspiracy-oriented communities, like those for anti-vaxxers, and were often boosted by Facebook’s own recommendation algorithms. By the time the social network finally banned QAnon in October, its loose network of conspiracy theories had reached millions.

Laying groundwork for fact-checking Trump

But even with some pretty big stumbles, the pandemic seems to have provided a useful framework for platforms to fight other sources of viral misinformation.

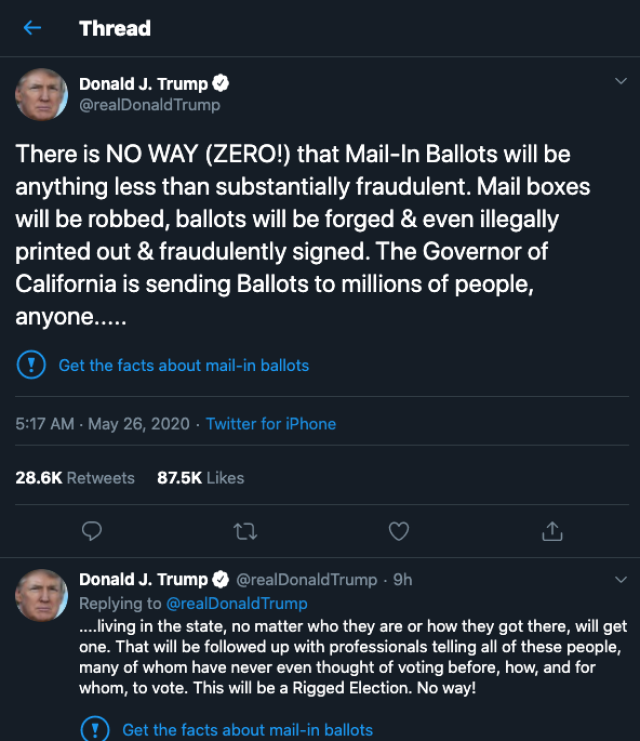

In May, just as Twitter was ramping up its work to combat COVID-19 conspiracies, the company took another, previously unheard of step: fact-checking Donald Trump. In response to a tweet about “fraudulent” mail-in ballots, Twitter added a label urging users to “get the facts” that linked to a Twitter Moment, which described the claim as false and pointed to news stories from mainstream news outlets like CNN and The Washington Post.

Again, it’s easy to take this for granted now. The company has slapped labels on so many Trump tweets since that it’s no longer a remarkable occurrence. But it was a step Twitter had resisted for years, despite thousands of false claims from the president.

Crucially, the company cited its coronavirus misinformation policies when talking about its decision to fact check the president. “This decision is in line with the approach we shared earlier this month,” a Twitter spokesperson said at the time, referring to a blog post that was primarily about how it applied labels to false and misleading claims about the coronavirus.

But a close reading reveals how the company viewed its coronavirus labels as something that could be applied in other contexts, too. “Moving forward, we may use these labels and warning messages to provide additional explanations or clarifications in situations where the risks of harm associated with a Tweet are less severe but where people may still be confused or misled by the content,” the company wrote.

Another Twitter spokesperson was even more explicit, telling Politico that “COVID was a game changer” for the company because it created tools necessary to implement fact-checking and add “context” to misleading tweets. As Politico explained:

The coronavirus lesson for Twitter was that the company’s trust and safety team, which is charged with taking action on inappropriate posts, could selectively challenge tweets that don’t violate its stated rules — the black-and-white laws of Twitter that get tweets deleted for specific behaviors like encouraging violence — but that wade into grayer territory, whereby misleading or confusing people they can cause real-world harm. In those cases, the supposed worst-of-the-worst get tagged with additional context from around the web, summarized by a specialized Twitter content team.

Not everyone in the industry approved of Twitter’s approach. Zuckerberg, who attended a private dinner with Trump in 2019, disagreed with Twitter’s decision. “I believe strongly that Facebook shouldn’t be the arbiter of truth of everything that people say online,” he told Fox News. “I think in general private companies, or especially these platform companies, shouldn’t be in the position of doing that.”

As much as he disagreed, Twitter’s actions created new pressure for Zuckerberg. Particularly after Twitter labeled a now-infamous Trump tweet for glorifying violence while Facebook declined to take any action on the same comments. Frustrated Facebook employees staged a virtual walkout — a rare public show of disapproval — over the company’s inaction. A massive advertiser boycott, organized by civil rights groups, followed a couple weeks later. Zuckerberg relented: Facebook would use labels too (though not for Trump’s “looting and shooting” post that kicked off the protests).

Then, in October, Facebook did something it had for years tried to avoid: it removed a Trump post that broke its rules. The policy he had violated, though, wasn’t related to the election or glorification of violence but COVID-19. Trump had shared a video of comments he had made in which he falsely claimed that children were “almost immune” from the virus. Trump finally found a line Facebook wasn’t willing to bend its rules to let him cross.

The pandemic was also a driving influence behind Facebook and Twitter’s extensive preparation for the 2020 election. With millions more voting by mail and a flood of disinformation about the validity of voting by mail (much of it emanating from the White House), the presidential election, like the pandemic, required an entirely different set of policies. And social media platforms spent weeks outlining the various measures they would take to prevent what election experts warned could be nightmare scenarios.

For Facebook, this included new limits on political ads, labels for any and all election-related posts and a “voting information center,” that mirrored its coronavirus hub. While Twitter introduced new rules around unverified claims of “election rigging” and misinformation that could “prevent a peaceful transfer of power.”

As with the coronavirus pandemic, the companies calculated that the election required a different set of rules.

“Companies over the course of this year have defined two exceptional events: One is ongoing, the pandemic, and the other is sort of discrete in duration, the election,” says Paul Barrett, the deputy director of NYU’s Stern Center for Business and Human Rights. “And they decided internally, we’re going to take special measures in connection with both of those things.”

Another question is how much, if at all, those “special measures” taken in 2020 will influence policies going forward. The election is over, and with the first wave of coronavirus vaccines approaching, the threat posed by COVID-19 could eventually become less urgent too.

“The steps they’ve taken in connection with both events — the pandemic and election — have been promising,” Barrett says. “But the question is, is whether that diligence and that vigor that they’ve shown will carry over to normal times.”

The reckoning isn’t over

While the steps taken in the face of a global pandemic and an unprecedented presidential election might offer a glimmer of hope, neither event is the unequivocal success story the platforms may suggest. Misinformation and disinformation remains rampant across social media. And many experts say it will take much more than labels, fact checks and iterative policy tweaks to truly make a dent.

The election is over and Joe Biden’s victory is officially official, yet Facebook’s fact checkers are still debunking viral claims otherwise. Meanwhile, the coronavirus pandemic is set to reach devastating new records in the coming weeks and months. And with the arrival of coronavirus vaccines has also come a fresh wave of new conspiracy theories, misinformation and anti-science grift. Companies like Facebook, which have long resisted taking a tough stance on anti-vax zealots, are again being forced to weigh policies they may have once tried to avoid.

So while the events of 2020 may have forced uncomfortable and unprecedented decisions, the coming year will likely bring its own moment(s) of reckoning.

(63)