How generative AI got cast in its first Hollywood movie

Anyone who’s spent any time on TikTok is familiar with DeepTomCruise, i.e. the deepfake videos of the Mission: Impossible star doing everything from the Wednesday dance to discovering there’s bubble gum in the center of a lollipop. Created by AI tech wizard Chris Umé and performer Miles Fisher (who bears an uncanny resemblance to Cruise even without the help of technology), DeepTomCruise went viral when it first debuted two years ago, racking up hundreds of millions of views.

Given the sophistication of the deep fake technology behind the parody videos, it was perhaps an obvious question: What if Metaphysic, the company that created the younger, shinier Cruise, was put to use in a feature film, making a whole bevy of actors look much younger (or older) than they actually are in a way that doesn’t feel like clunky special effects?

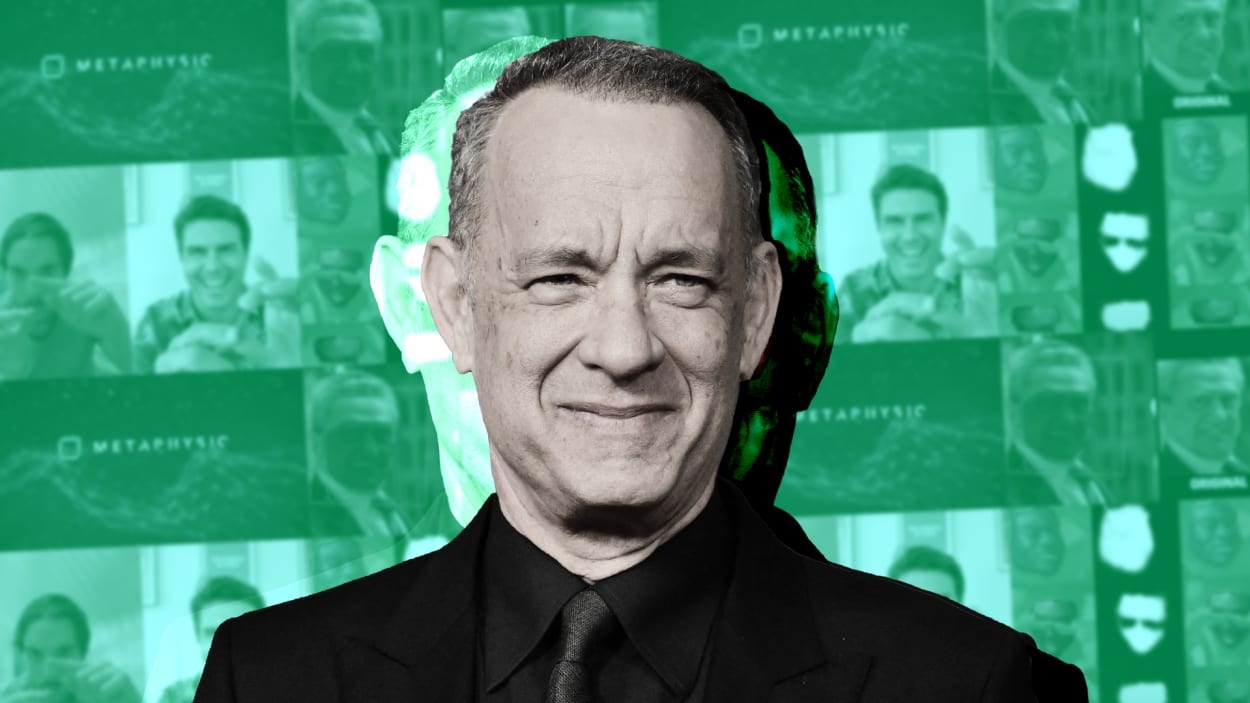

The answer to that hypothetical musing is coming out later this year in the form of Here, a film starring Tom Hanks based on the graphic novel by Richard McGuire that tells the story of a particular room and the people and events that have transpired in it across time. In the film, which is being directed by Robert Zemeckis and produced by Miramax, Hanks’ character must play a man at various ages in his life—in his twenties, thirties, fifties, and eighties—as do the other actors in the film, including Robin Wright and Paul Bettany.

According to Metaphysic cofounder and CEO Tom Graham, Zemeckis’ team “rang us up one day” and asked about working together. They’d seen the Tom Cruise videos, as well as the work Metaphysic had done on America’s Got Talent—the company used AI to created a de-aged Simon Cowell and have him perform, and brought back Elvis to compete—and wanted to know what Metaphysic could do for Here.

Quite a bit, it as it turns out. The film marks the first time that AI has played such a central role in a Hollywood production, in effect becoming the defining aesthetic in the film, albeit one that viewers will hardly notice. The key, Graham says, was “finding a way to use the technology to preserve Tom Hanks’ performance, while making a 20-year-old version on top of him. If you do that using 3D or VFX or CGI—think Benjamin Button or Gemini Man—you kind of lose the performance most of the time, or it looks uncanny or weird. We had to solve those problems.”

VFX at its most sophisticated is also extraordinary expensive. Graham says that using AI in a film reduces the cost of special effects by one-fifth.

To breakdown how it all works in basic terms: Metaphysic built AI models not only for each of the actors, but also for each of the actors at specific ages. Each model, Graham says, was then “trained” on the actor via huge data sets of filmed footage. So in the case of the model for Hanks’ younger self, “the AI model understands how a younger version of Tom Hanks looks,” he says, “and how he might act under certain circumstances.”

Then, after Hanks finished filming a scene—as his current self—the model can then create a younger version of his face and other body parts, which is then layered on the film. “It knows how to stitch younger Tom Hanks on top of current day Tom Hanks based on the expression he has in that particular shot,” Graham says. “So it does that over and over and over again. The AI is so sophisticated that it builds this flawless, amazing image that’s like looking into a mirror through time.”

This process also works in real time. Metaphysic was able to evolve its technology so that even as Zemeckis was filming, the de-aging (or aging) process could be created, so that the director and the actors could watch the AI-ified performance on screens in between shots.

The beauty, Graham says, is that the performance is ultimately “pure Tom Hanks. It’s how he’s acting, how he’s moving. The model is also pure Tom Hanks, just from a different time.”

Graham says Hollywood is a “fascinating” piece of Metaphysic’s future business. The company also has a partnership with the talent agency CAA to develop tools and help its clients better “own and control the data sets around their faces, their digital performances.” But Graham and his team are also focused on bringing AI to non-celebrities and shifting the narrative around deepfake technology, which is often associated with more nefarious projects like pornography and political disinformation.

“This technology has a tremendous potential to harm individual people and to render negative outcomes for society as a whole,” Graham admits. “So when we sat down many years ago and thought, What can we do? [We decided that] we can work with governments and regulators to help educate them to move legal paradigms toward protecting people and offering remedies. We can also educate the public—that was the most immediate thing we could do—through creating content. And we can work with our industry and other technologists and think about ways we can develop this tech in a responsible framework.”

One of the more accessible ways that Metaphysic is doing this is through Every Anyone, the company’s consumer-facing platform, which allows people to create their own AI avatars or identities and then control the intellectual property. Tom Cruise, however, remains the company’s flagship project. “It was really a turning point, an inflection point for AI,” Graham says. “It was the first large-scale experiment where literally hundreds of millions of people looked at this computer-generated AI content and thought: Wow, that’s real.”

(17)