How Google’s Music-Making AI Learns From Human Minds At Festivals

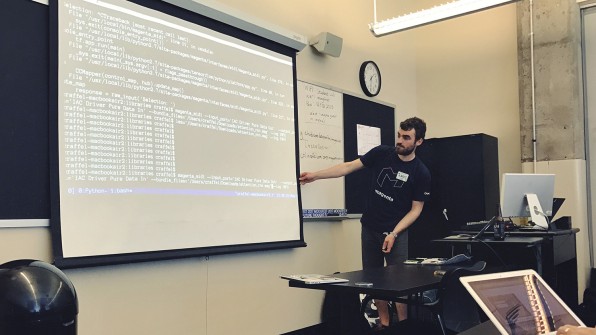

I’m sitting in a packed classroom when the weird noises start whirring around me. In the front of the room, lines of code pour down the screen while beeps and bloops start chiming from the laptop speakers on each desk.

Some of the sounds are reminiscent of a vintage synthesizer, but what’s happening here is much more modern. A powerful neural network is helping to create these tones in the hopes of offering musicians a cutting-edge new tool for their creative arsenal. Over time, the machines will learn to create music themselves.

The classroom is being led by Adam Roberts and Colin Raffel, two Google engineers working on the Magenta project within the rapidly expanding Google Brain artificial intelligence lab. First unveiled to the public last May at the Moogfest music and technology festival in Durham, NC, Magenta is focused on teaching machines to understand and generate music and building tools that supplement human creativity with the horsepower of Google’s machine learning technology. Today, almost exactly one year later, Roberts and Raffel are back at Moogfest showing software engineers and musicians how to get Magenta’s latest tools up and running on their computers so they can start playing around and, they hope, contributing code and ideas to the open source project.

“The goal of the project is to interface with the outside world, especially creators,” says Roberts. “We all have some artistic abilities in some sense, but we don’t consider ourselves artists. We’re trained as researchers and software engineers.”

A Different Knob To Play With

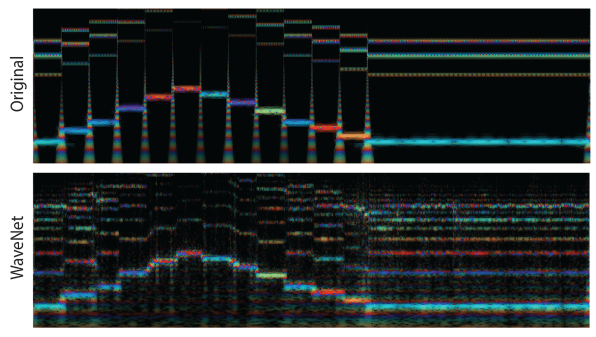

This workshop, focused on Magenta’s MIDI-based musical sequence generator API, was just one of several events Magenta engineers hosted at Moogfest this year. Throughout the four-day festival, they could be seen giving workshops, presenting demos of Magenta’s latest playable interfaces, like the web-based NSynth “neural synthesizer,” which uses neural networks to mathematically blend existing sounds to generate entirely new ones.

“With synthesizers, you’re giving people knobs and oscillators that can combine to create a sound,” says Roberts. “This is just a different knob to play with.”

The Magenta team’s extensive presence at Moogfest is not just a byproduct of their nerdy enthusiasm for all things tech and music. It’s strategically deliberate. As an open source project (its code base can be found on Github), Magenta needs software developers to poke and prod at its code, critique its interface designs, and generally help push the project forward. Moogfest’s bleeding edge, progressive focus on the intersection of music and technology attracts a high concentration of exactly the type of people Magenta needs. It’s a total nerdfest, in other words. By hosting public workshops at a gathering like Moogfest, Magenta aims to ensure that its toolkit is installed on more laptops belonging to people who are among the best equipped to tinker with it and stretch its limits.

It’s also helpful for Google engineers to be onsite for these types of events, because as Roberts admits, the user interfaces aren’t exactly polished. “We’re definitely not a place where you can sit down, open something up, and just start playing around with it,” he says. “There is a lot of overhead and you have to know how to use the command line and all that stuff.”

Workshops like this one let Roberts and Raffel walk coders through the process of installing the tools needed to use Magenta. Much of the work happens in raw code in the command line, but also through applications like PureData. In addition to user-friendly interfaces like the NSynth web app, Magenta coders do their best to build a bridge from Google’s AI infrastructure to more familiar music-creation tools like Ableton Live and Logic Pro. The team’s work strikes a balance between research mode (they just had four research papers accepted for the next International Conference on Machine Learning, which is no small feat) and build-and-design mode. Thus, the user-friendly polish is sometimes slow to come to fruition.

Watching Creatives Use AI

Once everyone in the room is up and running with the software, Roberts and Raffel start to get something very valuable in return: Critical feedback. Workshop attendees, who vary in terms of their technical prowess, can quickly identify the pain points of using Magenta’s tools—and their imperfections. This is insight Google’s engineers wouldn’t necessarily always get from Github and other online communities. They can also observe firsthand how people use the code and interfaces, sometimes in unexpected ways.

“Whenever you create something, you have some idea of ‘This is how I think I would use it,’” says Roberts. “But all great things that people have invented music-wise, like the electric guitar or the 808, were invented for one purpose and then totally taken by creative people and used in a different way.”

The project has come long way since last May, but Google Magenta is still barely an infant in the grand scheme of things. Magenta can auto-generate new sounds and short melodies, for instance, but doesn’t know much about the larger structure of songs. This is one of a few different territories the team hopes to push into next, both through academic-style research and building creative tools.

“I think it’s a neat technical challenge: Can you actually understand this long-term structure of music?” says Roberts. “And then once you do, can you give people the tools to then fiddle with the parameters of the long-term structure to come up with new concepts that maybe haven’t been explored before?”

A hypothetical, real world example might be a plugin or a button for Garage Band or Ableton that lets songwriters use artificial intelligence to auto-generate a few options for the melodic structure of the song they’re working on. Or perhaps a well-trained neural network could help create ambient “generative” music that never ends—an idea already explored through less technologically sophisticated means by musician Brian Eno. Such prospects may spark chilling, dystopian thoughts among some artists, but Roberts and his colleagues insist the goal is to craft tools that supplement human creativity, not replace it.

More Work To Do

“I don’t have any expectation that anybody is going to sit in their armchair and listen to symphonies written by computers,” says Doug Eck, who leads the Magenta team at Google. But, in the future AI could be used to mimic the social, collaborative nature of playing music.

“If you’re a musician and you play with other musicians, [this is like] having something at the table computationally that can function as a kind of musician with you,” says Eck. “But it doesn’t mean you’d want to listen to its output all by itself.”

We’re likely still years away from plug-and-play robo-companions for musicians—although with the rapid pace of developing in AI, who knows? In the meantime, Magenta has a lot more work to do. For starters, Roberts admits, they could build more intuitive interfaces and write cleaner code that’s easier to work with. They’re also continuing on the festival circuit, bringing Magenta’s tools and code to the Sonar festival and Barcelona in June.

They also desperately need more data. One thing these workshops and demos don’t do is train the underlying machine learning models, at least not directly. For that, Magenta needs more sources of raw data about music. Roberts’s fantasy about teaching machines to understand and create longer song structures, for example, would require a mountain of intelligence from the annals of written music. Earlier Google A.I. art experiments, like the bizarre Deep Dream image generator, were relatively easy to pull off because of the wealth of image data that’s readily available online. Music is a much more complex form of media than two-dimensional JPEGs, and thus finding meaningful and machine-readable sources of data remains a challenge.

One additional advantage of having a presence at conferences and festivals is that they offer leads on potential data sources. Moogfest is teeming with the sort of people who build MIDI datasets or otherwise find useful ways to compile and play with music-related data. Many of them, Roberts say, are eager to find ways to collaborate with Google’s artificial musical brain.

“These types of conversations are going to lead to our ability to have more data,” says Roberts. “Not just for ourselves. This is meant to be a collaborative project internally and externally. So, these conversations are useful.”

Google’s year-long quest to teach computers to make music is humming along—but the robots have a lot to learn.

I’m sitting in a packed classroom when the weird noises start whirring around me. In the front of the room, lines of code pour down the screen while beeps and bloops start chiming from the laptop speakers on each desk.

Fast Company , Read Full Story

(55)