How Machine-Learning AI Is Going To Make Your Phone Even Smarter

We all know that our smartphones are as powerful as desktop computers from a few years ago, and even supercomputers from a few decades ago—and now they’re on the verge of teaching themselves to become even smarter and more competent. Today, image-recognition AI company Clarifai debuted new software that allows mobile apps to do machine learning—the actual training of models—right on smartphones, going back to at least the iPhone 5, with no cloud server farm required.

Understanding the significance of this development requires us to unpack some jargon. Tech companies frequently talk about apps that use machine learning—showing enough images or other data to an artificial intelligence program until it starts to discern important patterns, such as what a hamburger patty and bun look like. That’s how apps like Dog Breed Identifier or Food Calorie Counter, for instance, can identify what you point the phone’s camera at.

But these apps aren’t learning on the phone what, say, a Yorkie looks like. That process usually happens at a powerful server farm, often running on racks of graphics processing units (GPUs) such as NVidia’s Tesla P100 to develop a model of things like the identifying characteristics of a Yorkie. The apps on the phone apply that model to the particular dog in front of the camera, a process called inferring. It’s a processor-intensive operation, but not nearly as intensive as training a model.

Related: Your Smartphone Is Becoming An AI Supercomputer

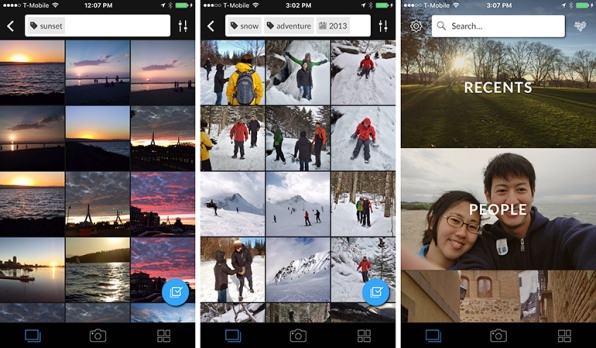

Very few apps do the actual training on phones: Apple’s Photos app being a prime example for its limited ability to learn that, for instance, a particular face is yours or your friend’s. Clarifai introduced an app called Forevery in 2015 with similar capabilities. Apple’s upcoming iOS 11 has a component called Core ML that allows developers to add inference capability, but not training, to their apps. Google has taken a similar step with Android, announcing software called TensorFlow Lite for Android developers.

But with today’s release of software called Mobile SDK (software developer kit), Clarifai is letting developers build training for aspects of images and videos into any app. Clarifai’s CEO Matthew Zeiler explained the difference to me in an email: “You can train faces in . . . Apple Photos, but that’s it. With Clarifai’s Mobile SDK, we allow developers to train models on any set of concepts in their own apps (for example, a Lamborghini from a Ferrari, or a frappuccino from a latte, etc.) completely on device.”

Clarifai did a pilot test of the technology with i-Nside, which makes smartphone-attached medical devices. Along with its endoscopic attachment for examining a patients’ ears, i-Nside created an app that uses Clarifai’s machine learning to train a model of ear diseases found in patients. (The finished model will then be used for diagnosis.) Doctors can use the app to train models in remote parts of places like Africa, South America, and South Asia that don’t have good internet access. So training has to happen on the phone.

Clarifai is releasing a preview version of its software for iOS today, with an Android version to come soon. Zeiler says he’s gotten the Mobile SDK to work on devices as far back as an iPhone 5. “We hope to test the limits to see if we can support earlier generations,” he tells me.

Once requiring giant computers or server farms, training AI models can now happen on smartphones going back at least as far as the iPhone 5.

We all know that our smartphones are as powerful as desktop computers from a few years ago, and even supercomputers from a few decades ago—and now they’re on the verge of teaching themselves to become even smarter and more competent. Today, image-recognition AI company Clarifai debuted new software that allows mobile apps to do machine learning—the actual training of models—right on smartphones, going back to at least the iPhone 5, with no cloud server farm required.

Fast Company , Read Full Story

(27)