How tiny startup teams handle big privacy issues

Privacy headlines often focus on big tech behemoths—and for good reason. Last year alone, the Federal Trade Commission settled a $5 billion civil penalty against Facebook and a $170 million fine for alleged violations of the Children’s Privacy Law against Google and YouTube. These companies have contributed to waves of identity theft, social stigma, and barriers to housing and jobs for the victims of their data breaches.

This year, California’s state privacy law (CCPA) went into effect, and companies will be spending big money to make sure they are on the right side of the law: CCPA estimates companies will spend between $50,000 to $55 billion to get in compliance. For the European Union’s General Data Protection Regulation (GDPR), which went into effect in 2018, 88% of surveyed companies in the U.S. reported spending more than $1 million, according to a PWC survey.

Policymakers created thresholds to exempt firms from certain compliance activities if they fall below an annual revenue level or size in relation to GDPR and CCPA legislation. But the question remains: How might small companies without big budgets or robust legal teams begin to digest the complexities of policy compliance?

I recently spoke with four resourceful, early-stage social impact companies affiliated with MIT Solve that handle sensitive data, such as child developmental information or maps. These companies highlighted three common themes when discussing the individual considerations of their projects: user needs, legal compliance, and privacy culture.

Privacy, defined as the ability to control or limit access to personal information, is an expectation for end users. All the founders pointed to contextualized, often irreversible harms that could arise through data collection.

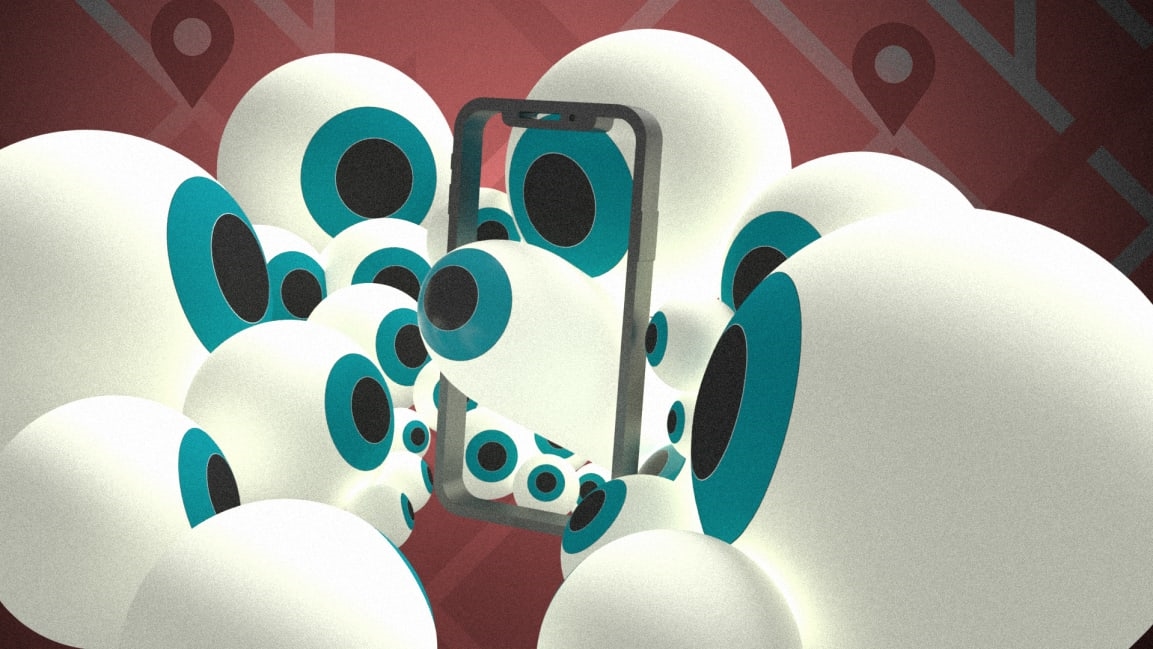

“We’ve had several meetings thinking about the risks and responsibilities that come with owning and managing the kind of data we are collecting,” said Kamil Shafiq, cofounder of Poket, a mobile app that maps previously unmapped, offline merchants through crowdsourcing in countries such as Nigeria. “It’s important to take a very careful look at both the planned and unplanned use cases for things like location data.” These risk assessments influence their company’s product design, reducing the potential for the compromise of information through data breaches and cyberattacks.

Sindhuja Jeyabal, cofounder of Dost Education, a mobile platform that promotes parent engagement in early childhood development, says its users—currently illiterate women in India with children under six—need to understand their data is safe to be able to reliably use their platform. “Revealing a child is behind with educational milestones [could lead to] further educational displacement or bias against the child,” Jeyabal says. This can prevent users from providing accurate information to the company. So, the team trains staff members doing fieldwork to practice good habits for global data consent and collection.

Meanwhile, understanding legal requirements is an ongoing challenge as privacy laws differ by region and culture. Even interpreting the meaning of state-level policy CCPA differs depending on the company or the lawyer who’s asked. Covering your bases is best. For instance, Poket’s founding team are natives of Toronto and are working with local legal experts in both Lagos and Toronto to review their privacy policy and terms of service to make sure they comply with both regional and national laws.

Similarly, health-tech company Neopenda, which makes vitals-signs monitors for infants in developing countries, looks to broader privacy standards such as GDPR and HIPAA to ensure newborn patients are safe through data protection. Their founder, Sona Shah, says they are getting a European certification mark to indicate conformity with international health, safety, and environmental protection standards.

Clearly, legal aspects cannot be overlooked—but privacy extends beyond product architecture or regulation. Companies must also focus on building privacy into company culture and habits.

One company I met with articulated a grassroots approach. “We have a strong sense of tribal farmer empowerment,” said Cherilyn Yazzie, founder of Coffee Pot Farms, an Arizona-based food producer that supports nutrition and access to healthy food in the Navajo Nation. “How do [Native leaders] teach individuals within the tribe in order to allow them to thrive, instead of hiring [consultants and researchers] from the outside?” For Native communities and sovereign nations that have experienced decades of displacement and systemic inequality, data privacy is central to the company’s efforts to create sustainable, healthy communities.

With these shared understandings, what can be done to help small companies succeed with their privacy and product visions?

Some articulate a vision for data residencies, where data is managed based on regional expectations and laws. Others have compiled checklists, created compliance policies, and simply hired lawyers.

Perhaps the headlines of last year are a wake-up call. Small teams should plan for risk, instill a strong culture of privacy, and seek out resources to create privacy-respecting products that mimic the cultures, laws, and user expectations in their markets. These innovative practices can serve as case studies for how to continue building the privacy-protecting products and services we hope to see everywhere.

Beyond a wake-up call, there is an opportunity. Privacy strategies can take a more collaborative, integrated approach in order to avoid endless compliance checklists.

Each founder I spoke with expressed a need to cultivate more community conversations on privacy practices. “Sometimes it feels like we’re making key decisions about data privacy/security for emerging markets in a silo, and I would love to share some of our challenges and learnings within a community,” said Shafiq.

At Coffee Pot Farms, Yazzie said they “looked to Standing Rock to see what policies have been put in place for training with data collection and sharing . . . but there is no substitute for human contact for help” when it comes to learning how to manage sensitive data about tribal culture.

There’s never a moment where a company can check the theoretical box of “now we’re privacy-compliant.” But if we take cues from what smaller companies are doing, we can find inspiration to make data privacy less about compliance and more about building products that meet user expectations and contexts.

Stephanie Thien Hang Nguyen is a research scientist at MIT Media Lab focusing on data privacy, design, and tech policies that impact marginalized populations. She previously led privacy and user experience design projects with the National Institutes of Health and Johns Hopkins’ Precision Medicine team. Find her on Twitter @stephtngu.

(50)