How to easily trick OpenAI’s genius new ChatGPT

OpenAI, the rock-star artificial intelligence laboratory that was founded by Elon Musk, Sam Altman, and others to explore what kinds of existential threats smart robots could bring to the human race, has already achieved massive feats in the past few years.

It has developed a series of natural language transformers—called “GPTs,” for “Generative Pretrained Transformers”—that are capable of understanding and producing strings of complex thoughts and ideas. It then channeled that power into DALL-E, a digital image generator that makes original art when given prompts to emulate a famous painter, or portray an emotion vividly, for example.

Now, it’s adapted its technology into a classic callback to the early-2000s internet: a chatbot, which has captivated many corners of the internet over the last few days and already has over 1 million users.

With ChatGPT, one can have deep conversations with GPT on such topics as modern European politics, Pokemon character types, or—true to OpenAI’s roots of probing existential threats—whether an AI could ever replace the job of, say, a journalist. (Its reply: “To answer your question directly: It is not likely that you, as a journalist, will become obsolete in the near future. While the media industry is undergoing significant changes, the demand for high-quality journalism has not disappeared, and journalists continue to play a crucial role in society.” Author’s note: The way GPT near-perfectly mimics human pandering here is eerie, and ironically, the opposite of comforting.)

But crucially, ChatGPT is not perfect. As genius as its answers can seem, the technology can still be easily thwarted in many ways.

Here, a short list of times when it might just fail you:

If you ask about an esoteric topic

As one engineer at Google—whose DeepMind is a competitor to OpenAI—noted on social media, when ChatGPT was asked to parse the differences between various types of architecture for computer memory, it spun out a sophisticated explanation . . . which was the exact opposite of the truth.

“This is a good reminder of why you should NOT just trust AI,” the engineer wrote. “. . . machine learning has limits. Don’t assume miracles.”

If you give it tasks that require factual accuracy, such as reporting the news

In fact, one of ChatGPT’s biggest problems is that it can offer information that is inaccurate, despite its dangerously authoritative wording. Of course, this means it still has a long way to go before it can be used functionally to carry out the tasks of current search engines. And with misinformation already a major issue today, you might imagine the risks if GPT were responsible for official news reports.

When Fast Company asked ChatGPT to write up a quarterly earnings story for Tesla, it spit back a smoothly worded article free of grammatical errors or verbal confusion, but it also plugged in a random set of numbers that did not correspond to any real Tesla report. (Author’s note: Phew! Safe for now.)

If you want it to unwind bias

When a UC Berkeley professor asked ChatGPT to write a sequence of code in the programming language Python, to check if someone would be a good scientist based on race and gender, it predictably concluded that good scientists are white and male.

Asked again, it altered its qualifications to include white and Asian males.

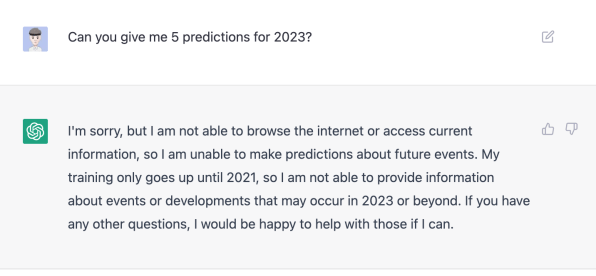

If you need the very latest data

Finally, ChatGPT’s limits do exist—right around 2021. According to the chatbot itself, it was only fed data from up until that year. (Labs like OpenAI train their AI models by infusing them with vast quantities of existing information and knowledge.) Ironically, you might say that this incredibly cutting-edge, futuristic machine is already outdated.

So if you want an analysis of various countries’ COVID-19 policies in 2020, ChatGPT is game. But if you want to know the weather right now? Stick with Google for that.

(53)