How to stop worrying about Google updates

Google is providing less information about algorithm updates these days, leaving SEOs scrambling for answers every time they experience a huge drop in traffic. But columnist Kristine Schachinger believes that all this panic is unnecessary. Read on to learn why.

As SEOs, we tend to obsess over changes to the organic results. It usually works like this:

You get to your computer in the morning. Ready to start work, you take a quick look at Facebook to check what you have missed. You run across someone asking if anyone saw changes last night. They’ll typically also note that there was “a lot of activity.”

“Activity” means that SEOs who follow changes to search rankings saw some fluctuations in a short period of time. If there is “a lot of activity,” that means there were large fluctuations in many websites’ rankings in a vertical or across verticals. Sometimes these results are positive, but mostly they are not. Big updates can often mean big drops in traffic.

So you quickly go check your Analytics and Search Console. Phew! The “activity” didn’t impact you — this time. But what about the next one?

This is what happens when Google rolls out large-scale changes to its search algorithms, and what is in these rollouts has been the topic of many articles, tweets and Facebook posts over the years.

What if I told you, though, that while it is very important to know what Google’s algorithms contain, you do not really need to know granular details about every update to keep your site in the black?

No-name rollouts

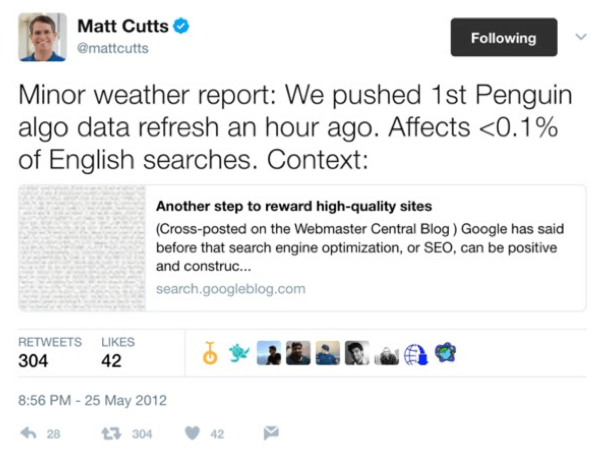

When former Head of Web Spam Matt Cutts was the point of communication between SEOs and Google, he would confirm updates — and either he or others in the industry would give each update a name. This was very helpful when you had to identify why your site went belly up. Knowing what the update was targeting, and why, made it much easier to diagnose the issues. However, Google does not share that information much anymore. They are much more tight-lipped about what changes have been rolled out and why.

Sure, Google will confirm the big stuff — like the last Penguin update, when it went real-time — but how many times have we seen an official announcement of a Panda update since it became part of the core ranking algorithm? The answer is none — and that was over 18 months ago.

The ‘Fred’ factor

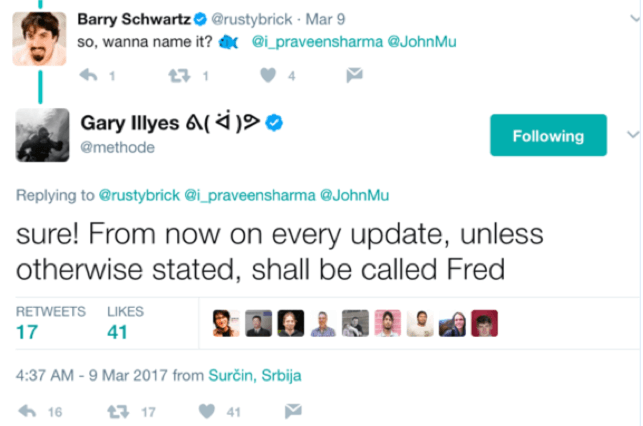

As for all the other unidentified changes SEOs notice, but that Google will not confirm? Those have been just been given the name “Fred.”

Fred, for those who don’t know, is just a silly name that came out of an exchange between Google Webmaster Trends Analyst Gary Illyes and several SEOs on Twitter. Fred is meant to cover every “update” SEOs notice that Google does not confirm and/or name.

So, what’s an SEO or site owner to do? If your site suffers a downturn, how will you know what caused it? How do you know what to fix if Google won’t tell you what that update did? How can you make gains if you don’t know what Google wants from you? And even more importantly, how do you know how to protect your site if Google does not tell you what it is “penalizing” with its updates?

Working without a net

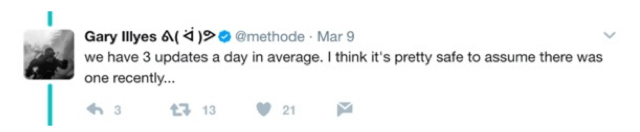

Today, we work in a post-update world. Google updates are rolling out all the time. According to Gary Illyes and John Mueller, these algorithms update most every day, and usually several times a day, but they don’t share that information with the community.

If they update all the time, how is it a post-update world?

Post-update world refers to a world where there is no official identifying/naming of algorithm changes, no confirmation that an update has been rolled out, and consequently, no information on when that rollout occurred. Basically, the updates they tell us about are becoming more and more infrequent. Where Matt Cutts might have told us, “Hey we are pushing Penguin today”…

… Illyes or Mueller might just say:

So, if you cannot get the information about updates and algorithm changes from Google, where do you go?

Technically, you can still go to Google to get most of that information — just more indirectly.

Falling off an analytics cliff

While Google is not telling you much about what they are doing these days with regard to algorithm updates, you still can wake up and find yourself at the bottom of an analytics cliff. When this happens, what do you do? Running to Twitter might get you some answers, but mostly you will just get confirmation that some unknown algorithm (“Fred”) likely ran.

Outside of reading others’ thoughts on the update, what can we use to determine exactly how Google is defining a quality site experience?

Understanding the Google algorithms

A few years back, Google divided up most algorithm changes between on-page and off-page. There were content and over-optimization algorithms, and there were link algorithms. The real focus of all of these, however, was spam. Google became the search market leader in part by being better than its competitors at removing irrelevant and “spammy” content from its search results pages.

But today, these algorithms cover so much more. While Google still uses algorithms to remove or demote spam, they are additionally focused on surfacing better user experiences. As far back as 2012, Matt Cutts suggested that we change SEO from “Search Engine Optimization” to “Search Experience Optimization.” About 18 months later, Google released the Page Layout Update. This update was the first to use a rendered page to assess page layout issues, and it brought algorithmic penalties with it.

What do algorithm updates ‘cover?’

Most algorithm updates address issues that fall under the following categories (note mobile and desktop are grouped here):

- Link issues

- Technical problems

- Content quality

- User experience

But how do we know what rules our site violated when Google does not even confirm something happened? What good are categories if I don’t know what the rules are for those categories?

Let’s take a look at how we can evaluate these areas without Google telling us much about what changes occurred.

Link issues: It’s all about Penguin

One of the most vetted areas of organic SEO is, of course, links — and Penguin is the algorithm that evaluates those links.

It could be said that Penguin was one of the harshest and most brutal algorithm updates Google had ever released. With Penguin, if a site had a very spammy link profile, Google wouldn’t just devalue their links — they would devalue their site. So it often happened that a webmaster whose site had a spammy inbound link profile would find their whole site removed from the index (or dropped so far in rankings that it may as well have been removed). Many site owners had no idea until they walked in one day to a 70+ percent drop in traffic.

The site owner then had to make fixes, remove links, do disavows and wait. And wait. And wait until Penguin updated again. The last time it refreshed, there had been a two-year gap between algorithm updates. Without the update, your site could not fully (or sometimes even partially) recover its ranking losses.

September 2016: Real-time Penguin

In September 2016, everything changed: Google made Penguin part of its core algorithm. Penguin’s data now refreshes in real time, and it no longer impacts an entire website’s rankings by default. Thus, with this update, Penguin was no longer a site killer.

When Penguin runs now, it will only devalue the links, not the site — meaning that rankings might be adjusted on query, page or section level. It would be rare to come in and check your site in the morning to find it has fallen off an analytics cliff entirely. That could happen, but if your site links are that spammy, it is much more likely you would get a manual penalty.

When real-time is not real-time

Now, “real-time Penguin” does not mean literally real-time. Google still needs to recrawl your site once the link issues have been fixed, which could take weeks, depending on how often Google crawls your site. Still, this real-time update makes it much easier to fix your link profile if you determine that links are your issue (spammy links are typically very obvious).

Remember, all sites will likely have some bad links. After all, it is not natural for a site to have a perfect backlink profile. But when bad links are comprising a significant percentage of your inbound links (let’s say around 25-30 percent), you need to start looking with a critical eye towards fixing spammy links and/or anchor text. (A general rule of thumb is if you have over 50 percent spammy links or anchor text, you most likely have a link devaluation.)

So, identifying site issues related to links is fairly straightforward. Are your links good links? Do you have over-optimized anchor text? If you have a spammy link profile, you just need to fix the link issues — get the links removed where you can, disavow the links where you can’t, and work on replacing these spammy links with good ones. Once you’ve fixed the link issues, you just have to wait.

As mentioned above, it can take up to a few weeks to see a recovery. In the meantime, you need to review the other areas of your site to see if they are in line with what Google defines as a quality site. “But I know the problem is links!” you say. Well, you might be right — but a site can receive multiple devaluations. You always want to check “all the things!”

Technical, content and user experience issues

This is where we have so much less guesswork than when we are looking at a link issue. Why? Because Google has provided webmasters with a wealth of information about what they think makes a good site. Study what is in their documentation, come as close to the Google site ideal as possible, and you can be pretty sure you are in good standing with Google.

Where do you find this information?

Following are some resources you can use to get a solid idea of what Google is looking for when it comes to a website. These resources cover everything from SEO best practices to guidelines for judging the quality of site content:

- Search Engine Optimization Starter Guide — This is a basic outline of best practices for helping Google to crawl, index and understand your website content. Even if you are an experienced SEO, it never hurts to review the basics.

- Google Webmaster Guidelines — These are Google’s “rules of the road” for site owners and webmasters. Stay on the right side of the Webmaster Guidelines to avoid incurring a manual action.

- Google Quality Raters Guide — This is the guide Google gives its quality raters to help them evaluate the quality of search results — in other words, when a user clicks on your website listing from a search results page. Quality raters use this guide to determine what is and what is not a good page/site, and you can garner a lot of helpful insights from this content.

- Bonus: Search Engine Land’s Periodic Table of SEO Factors — This isn’t a Google resource, but it’s helpful nonetheless.

Almost anything and everything you need to know about creating a good site for Google is in these documents.

One note of caution, however: The resources above are only meant as guides and are not the be-all and end-all of SEO. For instance, if you only acquired links in the ways Google recommends, you would never have any. However, these documents will give you a good blueprint for making your site as compliant with the algorithms as possible.

If you use these as guides to help make site improvements, you can be fairly certain you will do well in Google. And furthermore, you will be fairly well protected from most negative algorithm shifts — even without knowing what algorithm is doing what today.

The secret? It is all about distance from perfect, a term coined by Ian Lurie of Portent. In an SEO context, the idea is that although we can never know exactly how Google’s algorithms work, we still do know quite a lot about Google considers to be a “perfect” site or web page — and by focusing on these elements, we can in turn improve our site performance.

So, when your site has suffered a negative downturn, consult the available resources and ask yourself, What line(s) did I cross? What line(s) did I not come close to?

If you can move your site toward the Google ideal, you can stop worrying about every algorithm update. Next time you wake up in the morning and everyone is posting about their losses, you can be pretty assured you will be able to go check your metrics, see nothing bad happened and move on with your day.

The resources listed above tell you what Google wants in the site. Read them. Study them. Know them.

Quality Rater’s Guide caveat

It is important to note that the Quality Rater’s Guide is (as it says) for Quality Raters, not search marketers. While it contains a great deal of information about how you can create a quality site, remember it is not a guide to SEO.

That being said, if you adhere to the quality guidelines contained therein, you are more likely to be shortening that distance to perfect. By understanding what Google considers to be a high- (or low-) quality page, you can create content that is sure to satisfy users and search engines alike — and avoid creating content that might lead to an algorithmic penalty.

Get busy reading!

It’s important to educate yourself on what Google is looking for in a website. And it’s a good idea to read up on the major algorithm updates throughout the search engine’s history to get an idea of what issues Google has tackled in the past, as this can provide some insight into where they might be headed next.

However, you don’t need to know what every “Fred” update did or didn’t do. The algorithms are going to target links and/or site quality. They want to eliminate spam and poor usability from their results. So make sure your site keeps its links in check and does not violate the rules listed in the documents above, and you will likely be okay.

Read them. Know them. Apply them. Review often. Repeat for future proofing and site success.

[Article on Search Engine Land.]

Some opinions expressed in this article may be those of a guest author and not necessarily Marketing Land. Staff authors are listed here.

Marketing Land – Internet Marketing News, Strategies & Tips

(62)