Hyundai Preps Vehicle ‘Personal Assistant’

Hyundai Preps Vehicle ‘Personal Assistant’

by Tanya Gazdik , December 26, 2017

Hyundai Motor Co. is collaborating with Soundhound to develop a voice-enabled virtual assistant system.

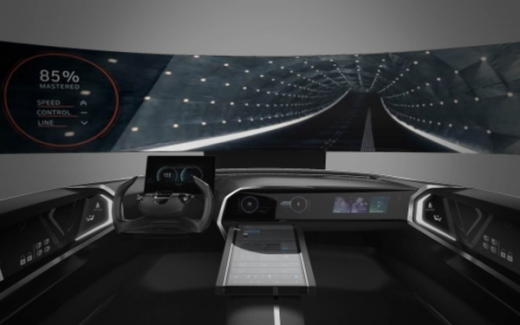

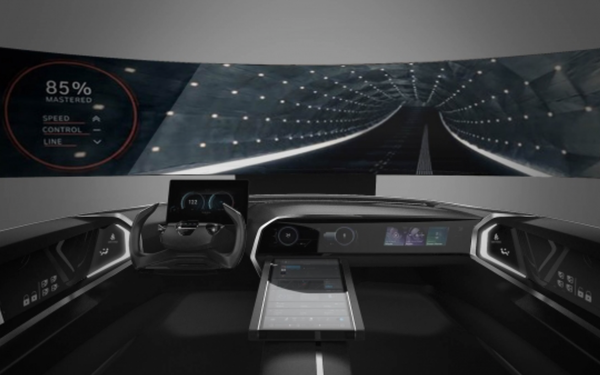

The automaker will unveil its “Intelligent Personal Agent” during CES 2018 in Las Vegas in January. It will be demonstrated via a connected car cockpit equipped with the feature.

The system is activated by the wake-up voice command: “Hi, Hyundai.” The assistant system will initially understand English only, but will expand to other major languages going forward.

Early next year, Hyundai plans to demonstrate a simplified version of the Intelligent Personal Agent, equipping next-generation Fuel Cell Electric Vehicles (FCEVs) scheduled for test drives on South Korean public roads with the system.

The system will appear in new models starting in 2019. It will allow drivers to use voice commands for many different operations and real-time data, which connected cars of the future will demand.

Silicon Valley-based SoundHound is the leading innovator in voice-enabled AI and conversational intelligence technologies.

The Intelligent Personal Agent is unique in that it acts as a proactive assistant system, predicting the driver’s needs and providing useful information. For instance, the system may give an early reminder of an upcoming meeting and suggest departure times that account for current traffic conditions.

These personal features combine with driver conveniences, such as the ability to make phone calls, send text messages, search destinations, search music, check weather and manage schedules. It also allows drivers to voice-control frequently used in-vehicle functions such as air-conditioning, sunroofs and door locks, while gathering various information about the vehicle. The system will support a “Car-to-Home” service, enabling the driver to control electronic devices at home with voice commands.

The Intelligent Personal Agent sets itself apart from the competition with its “multiple-command recognition” function. When the user says, “Tell me what the weather will be like tomorrow and turn off the lights in our living room,” the system recognizes two separate commands in the same sentence and completes each task separately.

MediaPost.com: Search Marketing Daily

(23)